Expo

Overview

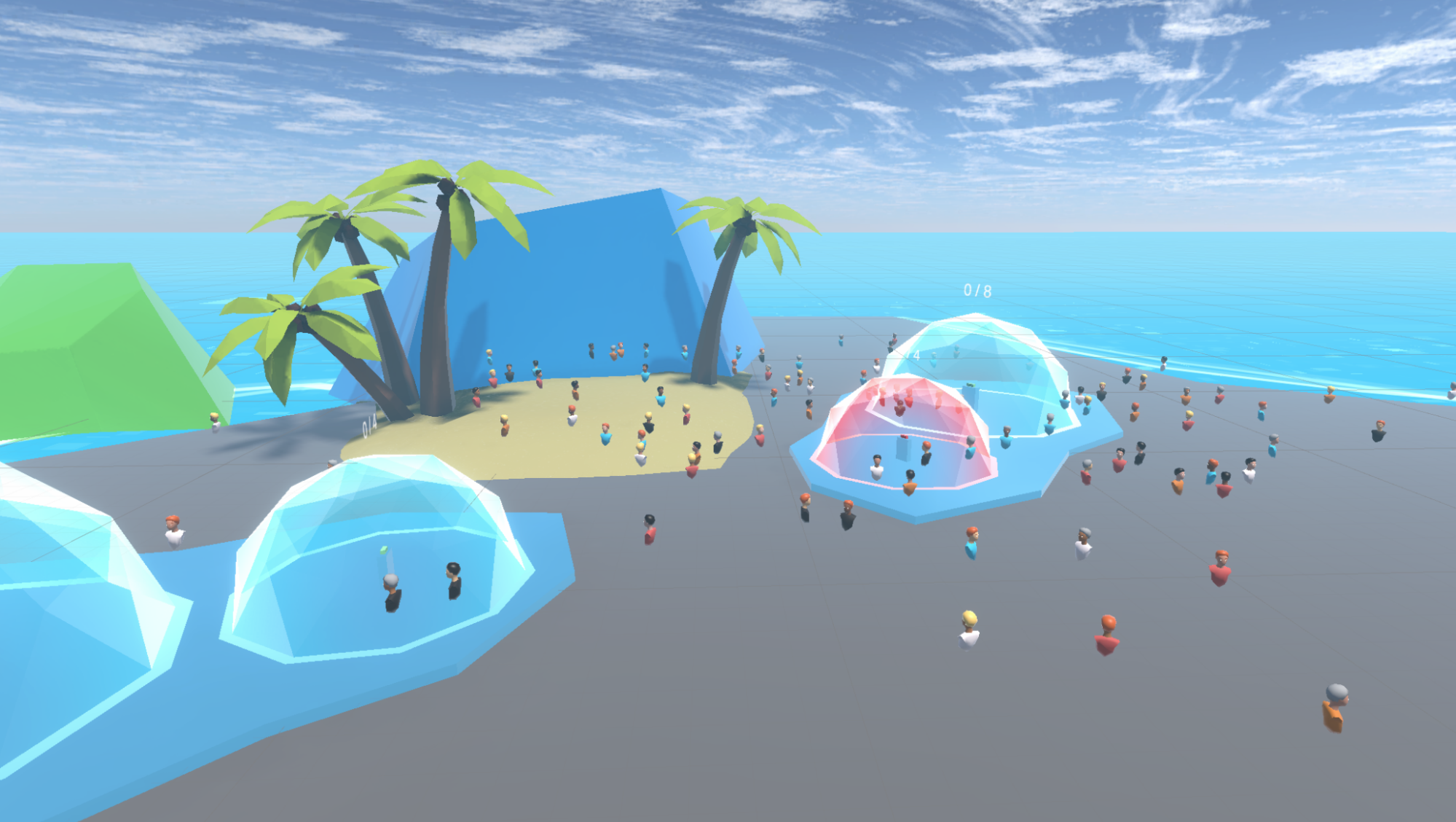

The Fusion Expo sample demonstrates an approach on how to develop a social application for up to 100 players with Fusion.

Each player is represented by an avatar and can talk to other players if they are located in the same chat buble thanks to Photon Voice SDK.

Some of the highlights of this sample are :

- First, the player customizes its avatar on the selection avatar screen,

- Then, they can join the Expo scene. If the player launches the sample on a PC or MAC, it can choose between the Desktop mode (using keyboard & mouse) or VR mode (Meta Quest headset).

- Players can talk to each other if they are located in the same static chat bubble. In each static chat bubble, a lock button is available to prevent new players from entering.

- Also, if two players are close to each other, a dynamic chat bubble is created around the players.

- Some 3D pens are available to create 3d drawing. Each 3D drawing can be moved using the anchor.

- Also, a classic whiteboard is available. Each drawing can be moved using the anchor.

- Players can move to a new location (new scene loading).

More technical details are provided directly in the code comments.

Technical Info

- This sample uses the Shared Mode topology,

- Builds are available for PC, Mac & Meta Quest,

- The project has been developed with Unity 2021.3, Fusion 2, Photon Voice 2.53,

- 2 avatars solutions are supported (home made simple avatars & Ready Player Me avatars),

Before you start

To run the sample :

Create a Fusion AppId in the PhotonEngine Dashboard and paste it into the

App Id Fusionfield in Real Time Settings (reachable from the Fusion menu).Create a Voice AppId in the PhotonEngine Dashboard and paste it into the

App Id Voicefield in Real Time SettingsThen load the

AvatarSelectionscene and pressPlay

Download

| Version | Release Date | Download | |

|---|---|---|---|

| 2.0.0 | Apr 18, 2024 | Fusion Expo 2.0.0 Build 503 | |

Download Binaries

A demo version of Expo is available below :

Handling Input

Desktop

Keyboard

- Move : WASD or ZQSD to walk

- Rotate : QE or AE to rotate

- Pen color : C to change the pen Color

- Menu : Esc to open or close the application menu

- Bot spawn: press “B” to spawn one bot. Stay pressed for longer than 1 second to create 50 bots on release.

Mouse

- Move : left click with your mouse to display a pointer. You will teleport on any accepted target on release

- Rotate : keep the right mouse button pressed and move the mouse to rotate the point of view

- Move & rotate : keep both the left and right button pressed to move forward. You can still move the mouse to rotate

- Grab & use (3D pens) : put the mouse over the object and grab it using the left mouse button. Then you can use it using the keyboard space key

Meta Quest

- Teleport : press A, B, X, Y, or any stick to display a pointer. You will teleport on any accepted target on release

- Touch (ie for chat bubbles lock buttons) : simply put your hand over a button to toggle it

- Grab : first put your hand over the object and grab it using controller grab button

- Bot spawn : press the menu button on the left controller to spawn one bot. Stay pressed for longer than 1 second to create 50 bots on release

- Pen color : move the joystick up or down to change the pen color

Folder Structure

The main folder /Expo contains all elements specific to this sample.

The folder /IndustriesComponents contains components shared with others industries samples like Fusion Meeting sample.

The /Photon folder contains the Fusion and Photon Voice SDK.

The /Photon/FusionXRShared folder contains the rig and grabbing logic coming from the VR shared sample, creating a FusionXRShared light SDK that can be shared with other projects.

The /Photon/FusionAddons folder contains the Industries Addons used in this sample.

The /XR folder contains configuration files for virtual reality.

Architecture overview

The Expo sample rely on the same code base than the one described in the VR Shared page, notably for the rig synchronization.

The grabbing system used here is the alternative "local rig grabbing" implementation described in the VR Shared - Local rig grabbing page.

Aside from this base, the sample, like the other Industries samples, contains some extensions to the FusionXRShared or Industries Addons, to handle some reusable features like synchronized rays, locomotion validation, touching, teleportation smoothing or a gazing system.

Audio

VoiceConnection and FusionVoiceBridge components start the audio Photon Voice connection along the Fusion session, the Recorder component catching the microphone input.

For Oculus Quest builds, additional user authorisations are required, and this request is managed by the MicrophoneAuthorization script.

The user prefab contains a Speaker and VoiceNetworkObject placed on its head, to project spatialized sound upon receiving voice.

For more details on Photon Voice integration with Fusion, see this page: https://doc.photonengine.com/en-us/voice/current/getting-started/voice-for-fusion

Bots

To demonstrate how an expo full of people would be supported by the samples, it is possible to create bots, in addition to regular users.

Bots are regular networked prefabs, whose voice has been disabled, and which are driven by a navigation mesh instead of user inputs.

The BotNetworkRig class also uses the locomotion validation system to only move into valid positions.

Used Industries Addons

We provide to our Industries Circle members a list of reusable addons to speed up 3D/XR application prototyping. See Industries Addons for more details. Here are the addons we've used in this sample.

Spaces

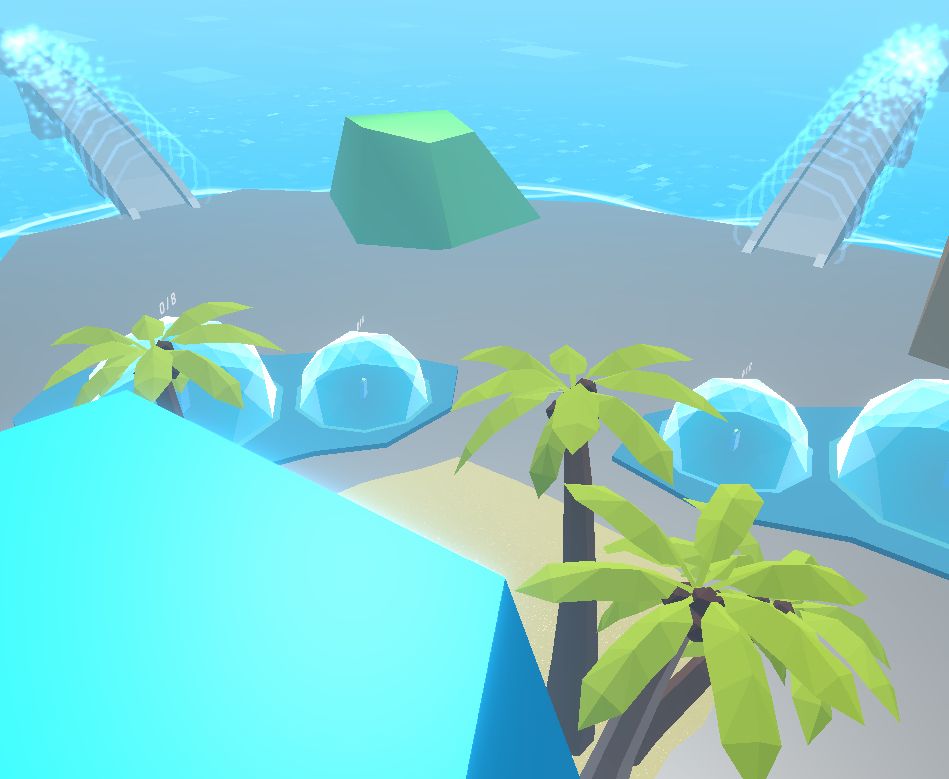

The player can move to a new world by using bridges located in the scene. Each bridge triggers a specific scene loading.

To do so, each bridge has a SpaceLoader class in charge to load a new scene when the player collides with the box collider.

For example, the main scene called ExpoRoomMainWithBridge has two bridges. A first bridge will load the ExpoRoomNorth scene while the other bridge will trigger the ExpoRoomSecondScene loading.

So, now, there are three posibilities regarding the player spawn position :

- a random position close to the palm trees when the player join the game at first,

- a position near the first bridge when the player come back from the

ExpoRoomNorthscene, - a position near the second bridge when the player come back from the

ExpoRoomSecondScenescene.

To determine the correct spawn position, a SpawnManager is required in each scene.

It changes the default behavior of the RandomizeStartPosition component, to define where the player should appear when he comes from another scene (or in case of reconnection).

Also, users have the possibility to join the public group or a private group by specyfing a group number. This choice can be made in the avatar selection screen or later with the application menu.

See Space Industries addons for more details.

Connection Manager

We use the ConnectionManager addon to manage the connection launch and spawn the user representation.

See ConnectionManager Addons for more details.

Extended Rig Selection

We use this addon to switch between the two rigs required in this sample :

- VR rig for Meta build,

- Desktop rig for Windows and Mac client,

See Extended Rig Selection Industries Addons for more details.

Avatar

This addon supports avatar functionality, including a set of simple avatars.

See Avatar Industries Addons for more details.

Ready Player Me Avatar

This addon handles the Ready Player Me avatars integration.

See Ready Player Me Avatar Industries Addons for more details.

Social distancing

To ensure comfort and proxemic distance, we use the social distancing addon.

See Social distancing Industries Addons for more details.

Locomotion validation

We use the locomotion validation addon to limit the player's movements (stay in defined scenes limits).

See Locomotion validation Industries Addons for more details.

Dynamic Audio group

We use the dynamic audio group addon to enable users to chat together, while taking into account the distance between users to optimize comfort and bandwidth consumption.

See Dynamic Audio group Industries Addons for more details.

Audio Room

The AudioRoom addon is used to soundproof chat bubbles.

See Audio Room Industries addons for more details.

Chat Bubble

This addon provides the static chat bubbles included in the Expo scenes. Additionally, it handles the dynamic chat bubble that appears when two players are close to each other.

See Chat Bubble Industries Addons for more details.

Drawing

The main Expo scenes contains whiteboards with 2D pens and several 3D pens. When the drawing is complete (i.e. when the user releases the "trigger" button), a handle is displayed. This allows the user to move 2D or 3D drawings.

See 3D & 2D drawing Industries Addons for more details.

Data Sync Helpers

This addon is used here to synchronize the 3D/2D drawing points.

See Data Sync Helpers Industries Addons for more details.

Blocking contact

We use this addon to block 2D pens and drawing' pin on whiteboard surfaces.

See Blocking contact Industries Addons for more details.

Virtual Keyboard

A virtual keyboard is required to customize the player name or room ID.

See Virtual Keyboard Industries Addons for more details.

Touch Hover

This addon is used to increase player interactions with 3D objects or UI elements. Interactions can be performed with virtual hands or a ray beamer.

See Touch Hover Industries Addons for more details.

Feedback

We use the Feedback addon to centralize sounds used in the application and to manage haptic & audio feedbacks.

See Feedback Addons for more details.

Third party components

- Oculus Integration

- Oculus Lipsync

- Oculus Sample Framework hands

- Ready player me

- Sounds

- Overview

- Architecture overview

- Used Industries Addons

- Spaces

- Connection Manager

- Extended Rig Selection

- Avatar

- Ready Player Me Avatar

- Social distancing

- Locomotion validation

- Dynamic Audio group

- Audio Room

- Chat Bubble

- Drawing

- Data Sync Helpers

- Blocking contact

- Virtual Keyboard

- Touch Hover

- Feedback

- Third party components