Overview

Introduction

The FPS Template bots use the standard HFSM implementation from Bot SDK and completement it with the addition of systems supporting more complex AI behavior. Those systems are made of:

- Sensors for gathering information about the world surrounding the AI Agents (e.g. eyes sensor for detecting an enemy);

- Memory for remembering certain events (e.g. memory of lost enemy);

- Animation States that tells how movement and rotation related decisions made in HFSM should be executed; and,

- Rotation for advanced first person look behavior.

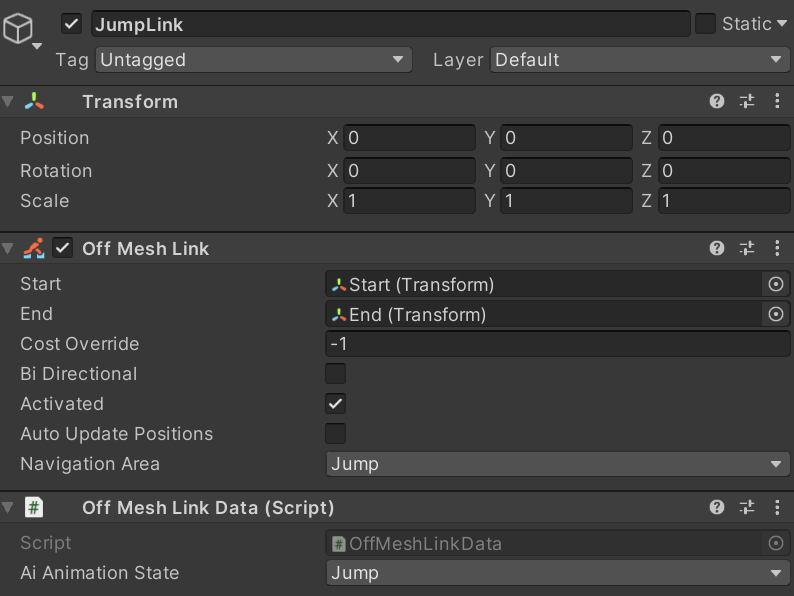

The FPS Template also expanded the capabilities of NavMesh links to provide more intricate control over the agent behavior while traversing the link.

What to expect

The FPS Template bots behavior includes:

- Actively searching for an enemy based on specified waypoints in the scene; or, based on spawn points if waypoints are not available.

- Attacking an enemy and searching for a good attack position. The attack position is changed periodically. Strafing is also supported and depends on the selected bot difficulty.

- Pursuing a lost enemy.

- Investigating incoming damage.

- Investigating fire sounds within hearing range.

- Going for a nearby pickup.

AI Execution and Data Flow

The AI loop starts with sensors. Sensors are used to define and execute the behaviour related to the gathering and processing information about the world; this includes things such as audible / visible targets or nearby pickups. The information captured by the sensors is stored in either the AI agent's AIBlackbloard component or the AIMemory component. The information is then used by the HFSM which acts as the AI agent's metaphorical brain. It draws information from the AIBlackboard and AIMemory to take decisions. The decision's result is stored as either AI data or InputDesires. If the HFSM can be considered the AI Agent's brain, then the animation states would represent its the muscles. The Animation state asset takes the AI agent's data and converts it to specific component desires for actions such as movement (MovementDesires) and look (LookDesires).

Unified Architecture for AI and Players

When starting agent processing the input is either taken from player inputs (and commands) or from an AI's generated input that was stored in the agent’s InputDesires.

AI systems never directly modify the agent's state (e.g.: position or rotation). All output is written into the InputDesires struct. Any decision's result is always just InputDesires. This allows for systems to treat players and AI agents identically. In other words, from this point onwards all processes are identical for both AI agents and players; InputDesires are first preprocessed by some systems to create the relevant component desires and then postprocessed by the others that are in charge of executing actions such as movement, weapons and abilities.

In addition to unifying the system architecture, it ensures AI agents are on the same footing as players by (only) being able to do the same actions and interactions.

Back to top