Sensors

Introduction

The purpose of sensors is twofold:

- optimization by centralizing data processing thus ; and,

- emulating Players' ability to perceive information in their immediate surroundings.

This approach handles complexity gracefully and remains maintainable even when the behavior is extended.

The processed information is saved by the sensors into an agent's blackboard or memory. This makes it available to the HFSM which can access the saved data when taking decisions and performing actions; this saves performance by avoiding the overhead that would incurre during a recalculation.

Note: Sensors usually encapsulate performance heavy calculations such as raycasts or NavMesh checks. However, these calculations do not need to be performed every tick. This enables simple optimizations such as only updating a fraction of the sensors during each tick; for example sensor eyes can be updated every 5th AI tick.

Sensors are assets, this enables Designers to specify which sensors an AI will have straight from the editor without requiring multiple or different versions of an HFSM.

It is possible to have multiple versions of the same sensor for different agents or agent difficulties. For instance, an EasySensorEyes asset could have its max view distance for spotting an enemy set to 20 meters while a DifficultSensorsEyes asset can have much larger view distance and an increased field of view.

Sensor Types

Each type of information has a sensor dedicated to "sensing" it. The sensors included in this entry are the one included in the FPS Template by default; you are free to create as many other sensors type as required by your game; some examples of expanded sensor use would be cover sensor, dropped weapon sensor, dangerous object sensor (e.g. thrown grenade) or interactable object sensor.

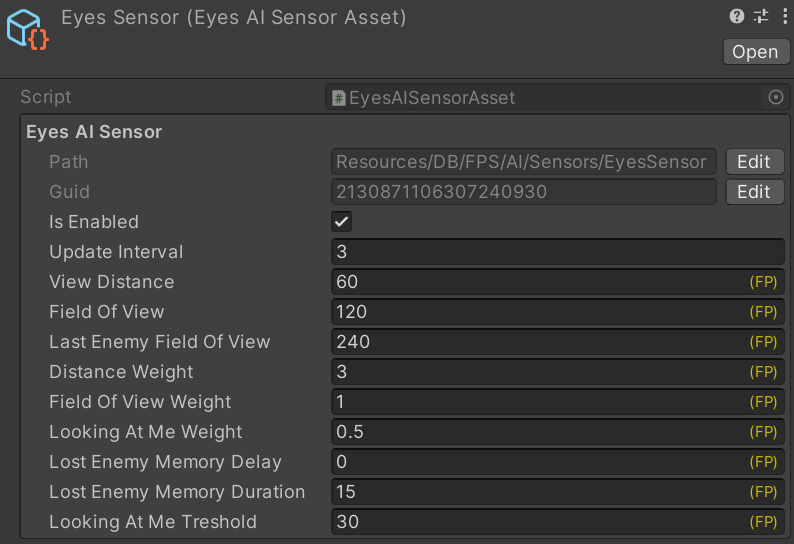

SensorEyes

SensorEyes is the most basic sensor. It is used for detecting the best attack target (enemy) and remembering the last known location of an enemy lost from sight. Targets are evaluated based on distance, position in the field of view and rotation (looking at the AI agent or not). Designers can assign weight to any of these metrics; these will change how metrics will be evaluated at runtime. The most expensive check in the procedure is the raycast; to optimize the performance, the potentially visible targets are filtered beforehand.

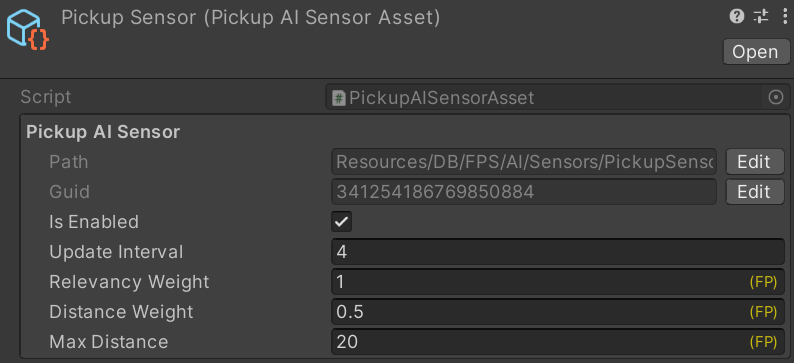

SensorPickup

SensorPickup chooses the "best" nearby pickup. The relevancy of a pickup is taken into consideration - e.g. bots will not try to pick up health pack if they are already at max health. Designers can again specify weights to prioritize distance over relevancy or vice versa.

SensorDamage

SensorDamage listen to the OnDamage() signal and write a damage record into the agent's memory. Damage dealt by the same instigator is aggregated over time which allows the agent to react to the damage memory only after a certain amount of damage was inflicted.

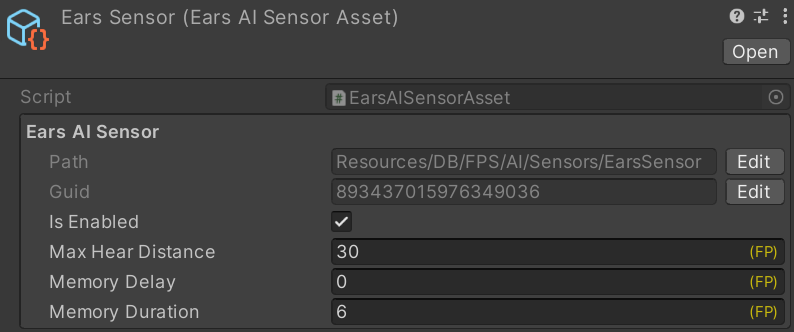

SensorEars

SensorEars listen to the OnWeaponFired() signal and records whether the "heard" firing was friendly or hostile into memory.