VR Host

Overview

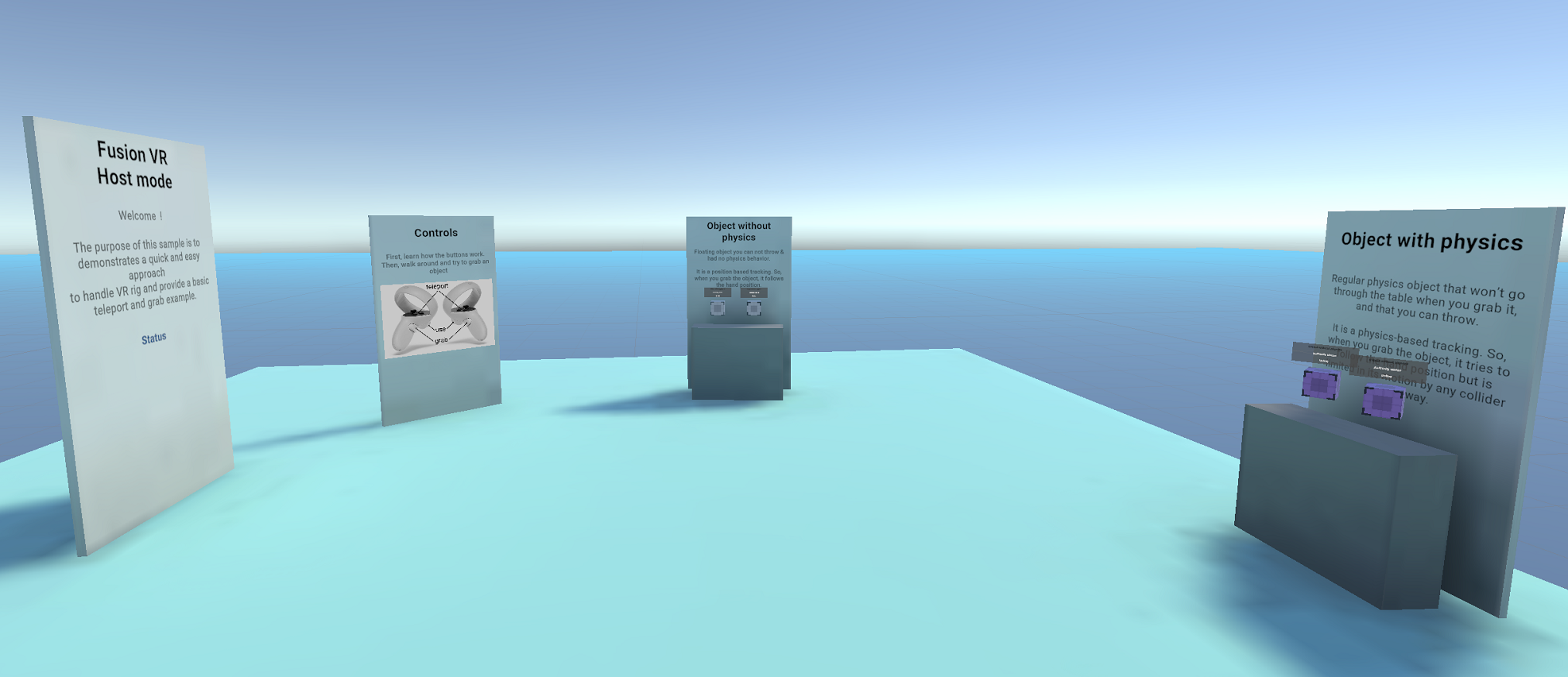

Fusion VR Host demonstrates a quick and easy approach to start multiplayer games or applications with VR.

The choice between Shared or Host/Server topologies must be driven by your game specificities. In this sample, the Host mode is used.

The purpose of this sample is to clarify how to handle VR rig and provide a basic teleport and grab example.

Before You start

- The project has been developed with Unity 2021.3.29f1 and Fusion 1.1.8

- To run the sample, first create a Fusion AppId in the PhotonEngine Dashboard and paste it into the

App Id Fusionfield in Real Time Settings (reachable from the Fusion menu). Then load theLaunchscene and pressPlay.

Download

| Version | Release Date | Download | |

|---|---|---|---|

| 1.1.8 | Sep 21, 2023 | Fusion VR Host 1.1.8 Build 278 | |

Handling Input

Meta Quest

- Teleport : press A, B, X, Y, or any stick to display a pointer. You will teleport on any accepted target on release

- Grab : first put your hand over the object and grab it using controller grab button

Mouse

A basic desktop rig is included in the project. It means that you have basic interaction using the mouse.

- Move : left click with your mouse to display a pointer. You will teleport on any accepted target on release

- Rotate : keep the right mouse button pressed and move the mouse to rotate the point of view

- Grab : left click with your mouse on an object to grab it.

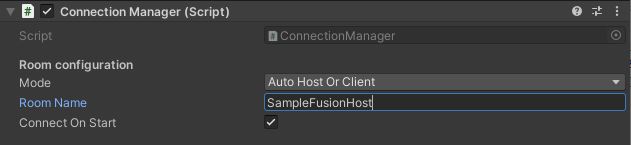

Connection Manager

The NetworkRunner is installed on the Connection Manager game object.

The Connection Manager is in charge of configuring the game settings and starting the connection.

C#

private async void Start()

{

// Launch the connection at start

if (connectOnStart) await Connect();

}

public async Task Connect()

{

// Create the scene manager if it does not exist

if (sceneManager == null) sceneManager = gameObject.AddComponent<NetworkSceneManagerDefault>();

if (onWillConnect != null) onWillConnect.Invoke();

// Start or join (depends on gamemode) a session with a specific name

var args = new StartGameArgs()

{

GameMode = mode,

SessionName = roomName,

Scene = SceneManager.GetActiveScene().buildIndex,

SceneManager = sceneManager

};

await runner.StartGame(args);

}

Implementing INetworkRunnerCallbacks will allow Fusion NetworkRunner to interact with the Connection Manager class.

In this sample, the OnPlayerJoined call back is used to spawn on the host the user prefab when a player joins the session, and OnPlayerLeft to Despawn it when the same player leaves the session.

C#

public void OnPlayerJoined(NetworkRunner runner, PlayerRef player)

{

// The user's prefab has to be spawned by the host

if (runner.IsServer)

{

Debug.Log($"OnPlayerJoined {player.PlayerId}/Local id: ({runner.LocalPlayer.PlayerId})");

// We make sure to give the input authority to the connecting player for their user's object

NetworkObject networkPlayerObject = runner.Spawn(userPrefab, position: transform.position, rotation: transform.rotation, inputAuthority: player, (runner, obj) => {

});

// Keep track of the player avatars so we can remove it when they disconnect

_spawnedUsers.Add(player, networkPlayerObject);

}

}

// Despawn the user object upon disconnection

public void OnPlayerLeft(NetworkRunner runner, PlayerRef player)

{

// Find and remove the players avatar (only the host would have stored the spawned game object)

if (_spawnedUsers.TryGetValue(player, out NetworkObject networkObject))

{

runner.Despawn(networkObject);

_spawnedUsers.Remove(player);

}

}

Please check that "Auto Host or Client" is selected on Unity Connection Manager game object.

Rigs

Overview

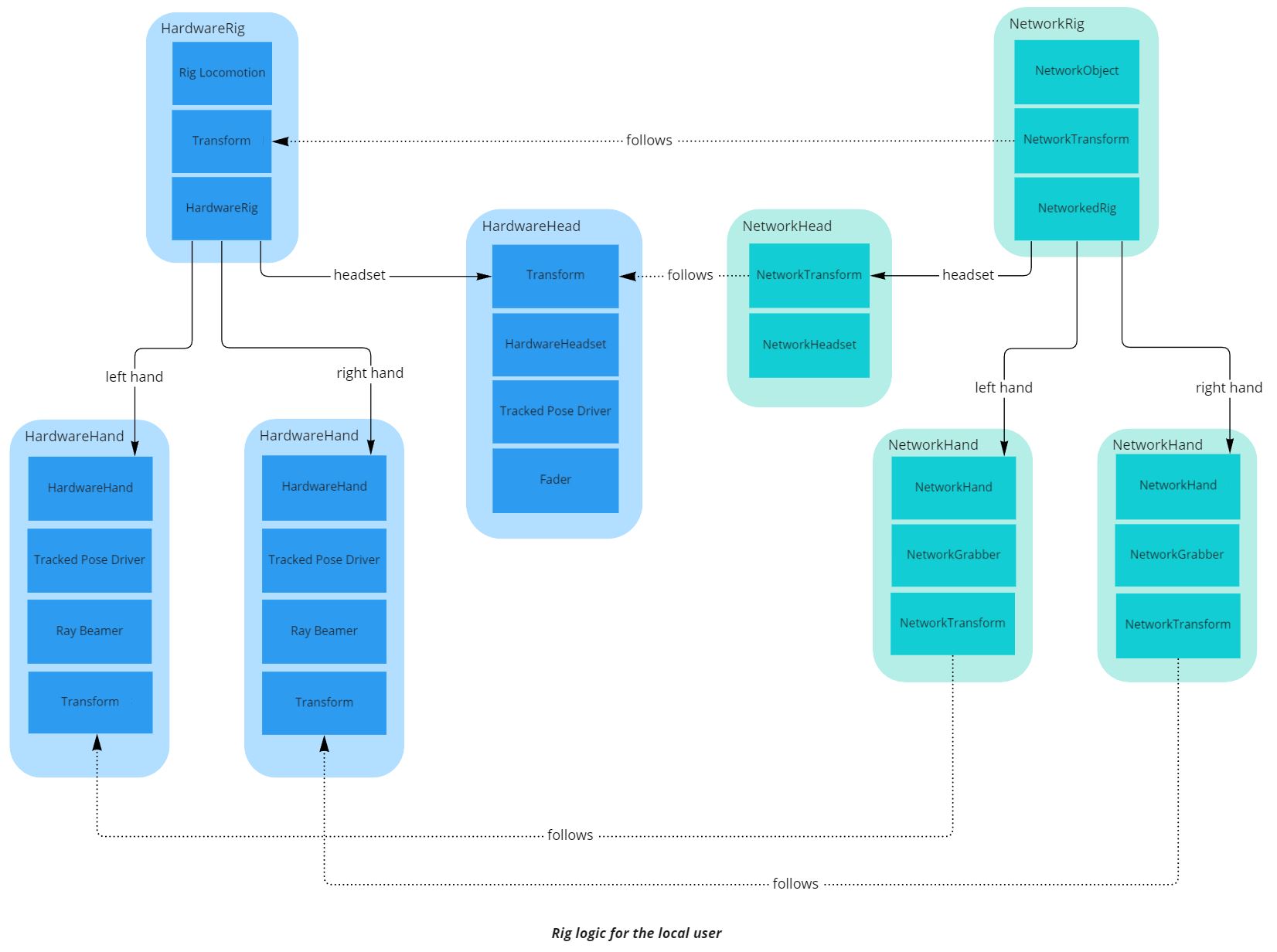

In an immersive application, the rig describes all the mobile parts that are required to represent an user, usually both hands, an head, and the play area (it is the personal space that can be moved, when an user teleports for instance),

While in a networked session, every user is represented by a networked rig, whose various parts positions are synchronized over the network.

Several architectures are possible, and valids, regarding how the rig parts are organized and synchronized. Here, an user is represented by a single NetworkObject, with several nested NetworkTransforms, one for each rig parts.

Regarding the specific case of the network rig representing the local user, this rig has to be driven by the hardware inputs. To simplify this process, a separate, non networked, rig has been created, called the “Hardware rig”. It uses classic Unity components to collect the hardware inputs (like TrackedPoseDriver).

Details

Rig

All the parameters driving the rig (its position in space and the pose of the hands) are included in the RigInput structure.

Also, information related to grabbed objects are included into this structure.

C#

public struct RigInput : INetworkInput

{

public Vector3 playAreaPosition;

public Quaternion playAreaRotation;

public Vector3 leftHandPosition;

public Quaternion leftHandRotation;

public Vector3 rightHandPosition;

public Quaternion rightHandRotation;

public Vector3 headsetPosition;

public Quaternion headsetRotation;

public HandCommand leftHandCommand;

public HandCommand rightHandCommand;

public GrabInfo leftGrabInfo;

public GrabInfo rightGrabInfo;

}

The HardwareRig class updates the structure when Fusion NetworkRunner polls for user inputs. To do so, it collects input paramaters from the various hardware rig parts.

C#

public void OnInput(NetworkRunner runner, NetworkInput input)

{

// Prepare the input, that will be read by NetworkRig in the FixedUpdateNetwork

RigInput rigInput = new RigInput();

rigInput.playAreaPosition = transform.position;

rigInput.playAreaRotation = transform.rotation;

rigInput.leftHandPosition = leftHand.transform.position;

rigInput.leftHandRotation = leftHand.transform.rotation;

rigInput.rightHandPosition = rightHand.transform.position;

rigInput.rightHandRotation = rightHand.transform.rotation;

rigInput.headsetPosition = headset.transform.position;

rigInput.headsetRotation = headset.transform.rotation;

rigInput.leftHandCommand = leftHand.handCommand;

rigInput.rightHandCommand = rightHand.handCommand;

rigInput.leftGrabInfo = leftHand.grabber.GrabInfo;

rigInput.rightGrabInfo = rightHand.grabber.GrabInfo;

input.Set(rigInput);

}

Then, the networked rig associated with the user who sent those input receives them: both the host (as the state authority) and the user having sent those input (as the input authority) receives them. Other users do not (they are proxies here).

It happens in the NetworkRig component, located on the user prefab, during the FixedUpdateNetwork() (FUN), through GetInput (which only return the input for the state and input authorities).

During the FUN, every networked rig parts is configured to simply follow the input parameter coming from the matching hardware rig parts.

In Host mode, when the inputs are handled by the host, they can then be forwarded to the proxies, so they can replicate users' movements. It is either:

- handled through

[Networked]variables (for the hand pose and grabbing info): when the state authority (the host) change a networked var value, this value is replicated on each user - or, regarding the positions and rotations, handled by the state authority (the host)

NetworkTransformcomponents, which handle the replication to other users.

C#

// As we are in host topology, we use the input authority to track which player is the local user

public bool IsLocalNetworkRig => Object.HasInputAuthority;

public override void Spawned()

{

base.Spawned();

if (IsLocalNetworkRig)

{

hardwareRig = FindObjectOfType<HardwareRig>();

if (hardwareRig == null) Debug.LogError("Missing HardwareRig in the scene");

}

}

public override void FixedUpdateNetwork()

{

base.FixedUpdateNetwork();

// update the rig at each network tick

if (GetInput<RigInput>(out var input))

{

transform.position = input.playAreaPosition;

transform.rotation = input.playAreaRotation;

leftHand.transform.position = input.leftHandPosition;

leftHand.transform.rotation = input.leftHandRotation;

rightHand.transform.position = input.rightHandPosition;

rightHand.transform.rotation = input.rightHandRotation;

headset.transform.position = input.headsetPosition;

headset.transform.rotation = input.headsetRotation;

// we update the hand pose info. It will trigger on network hands OnHandCommandChange on all clients, and update the hand representation accordingly

leftHand.HandCommand = input.leftHandCommand;

rightHand.HandCommand = input.rightHandCommand;

leftGrabber.GrabInfo = input.leftGrabInfo;

rightGrabber.GrabInfo = input.rightGrabInfo;

}

}

Aside from moving the network rig parts position during the FixedUpdateNetwork(), the NetworkRig component also handles the local extrapolation: during the Render(), for the local user having the input authority on this object, the interpolation target, which handle the graphical representation of the various rig parts’ NetworkTransforms, is moved using the most recent local hardware rig part data.

It ensures that the local user always has the most up-to-date possible positions for their own hands (to avoid potential unease), even if the screen refresh rate is higher than the network tick rate.

The [OrderAfter] tag before the class ensure that the NetworkRig Render() will be called after the NetworkTransform methods, so that NetworkRig can override the original handling of the interpolation target.

C#

public override void Render()

{

base.Render();

if (IsLocalNetworkRig)

{

// Extrapolate for local user :

// we want to have the visual at the good position as soon as possible, so we force the visuals to follow the most fresh hardware positions

// To update the visual object, and not the actual networked position, we move the interpolation targets

networkTransform.InterpolationTarget.position = hardwareRig.transform.position;

networkTransform.InterpolationTarget.rotation = hardwareRig.transform.rotation;

leftHand.networkTransform.InterpolationTarget.position = hardwareRig.leftHand.transform.position;

leftHand.networkTransform.InterpolationTarget.rotation = hardwareRig.leftHand.transform.rotation;

rightHand.networkTransform.InterpolationTarget.position = hardwareRig.rightHand.transform.position;

rightHand.networkTransform.InterpolationTarget.rotation = hardwareRig.rightHand.transform.rotation;

headset.networkTransform.InterpolationTarget.position = hardwareRig.headset.transform.position;

headset.networkTransform.InterpolationTarget.rotation = hardwareRig.headset.transform.rotation;

}

Headset

The NetworkHeadset class is very simple : it provides an access to the headset NetworkTransform for the NetworkRig class

C#

public class NetworkHeadset : NetworkBehaviour

{

[HideInInspector]

public NetworkTransform networkTransform;

private void Awake()

{

if (networkTransform == null) networkTransform = GetComponent<NetworkTransform>();

}

}

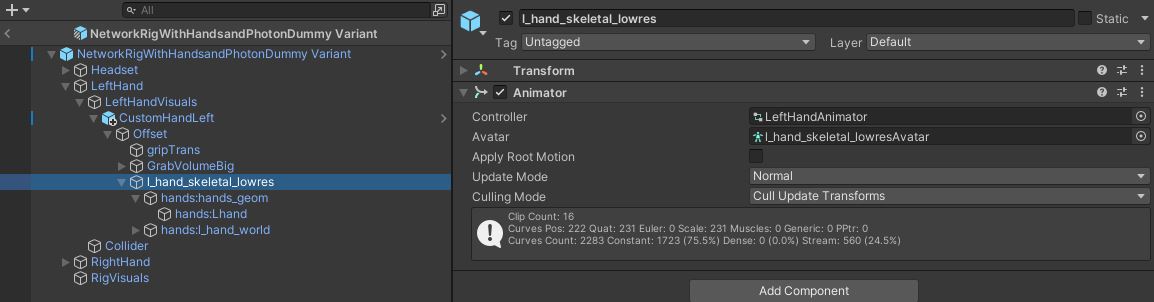

Hands

Like the NetworkHeadset class, the NetworkHand class provides access to the hand Network Transform for the NetworkRig class.

To synchronize the hand pose, a network structure called HandCommand has been created in the HardwareHand class.

C#

// Structure representing the inputs driving a hand pose

[System.Serializable]

public struct HandCommand : INetworkStruct

{

public float thumbTouchedCommand;

public float indexTouchedCommand;

public float gripCommand;

public float triggerCommand;

// Optionnal commands

public int poseCommand;

public float pinchCommand;// Can be computed from triggerCommand by default

}

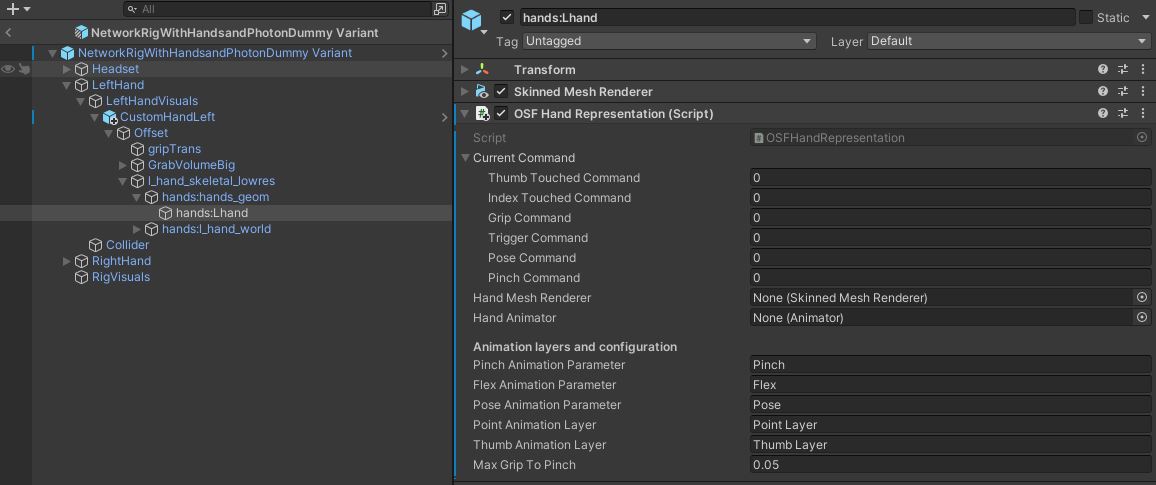

This HandCommand structure is used into the IHandRepresentation interface which set various hand properties including the hand pose. The NetworkHand can have a child IHandRepresentation, to which it will forward hand pose data.

C#

public interface IHandRepresentation

{

public void SetHandCommand(HandCommand command);

public GameObject gameObject { get; }

public void SetHandColor(Color color);

public void SetHandMaterial(Material material);

public void DisplayMesh(bool shouldDisplay);

public bool IsMeshDisplayed { get; }

}

The OSFHandRepresentation class, located on each hand, implements this interface in order to modify the fingers position thanks to the provided hand animator (ApplyCommand(HandCommand command) function).

Now, let's see how it is synchronized.

The HandCommand structure is updated with the fingers’ positions into the Update() of the HardwareHand

C#

protected virtual void Update()

{

// update hand pose

handCommand.thumbTouchedCommand = thumbAction.action.ReadValue<float>();

handCommand.indexTouchedCommand = indexAction.action.ReadValue<float>();

handCommand.gripCommand = gripAction.action.ReadValue<float>();

handCommand.triggerCommand = triggerAction.action.ReadValue<float>();

handCommand.poseCommand = handPose;

handCommand.pinchCommand = 0;

// update hand interaction

isGrabbing = grabAction.action.ReadValue<float>() > grabThreshold;

}

At each NetworkRig FixedUpdateNetwork(), the hand pose datas are updated on the local user, along with the others rig inputs.

C#

public override void FixedUpdateNetwork()

{

base.FixedUpdateNetwork();

// update the rig at each network tick

if (GetInput<RigInput>(out var input))

{

transform.position = input.playAreaPosition;

transform.rotation = input.playAreaRotation;

leftHand.transform.position = input.leftHandPosition;

leftHand.transform.rotation = input.leftHandRotation;

rightHand.transform.position = input.rightHandPosition;

rightHand.transform.rotation = input.rightHandRotation;

headset.transform.position = input.headsetPosition;

headset.transform.rotation = input.headsetRotation;

// we update the hand pose info. It will trigger on network hands OnHandCommandChange on each client, and update the hand representation accordingly

leftHand.HandCommand = input.leftHandCommand;

rightHand.HandCommand = input.rightHandCommand;

leftGrabber.GrabInfo = input.leftGrabInfo;

rightGrabber.GrabInfo = input.rightGrabInfo;

}

}

The NetworkHand component, located on each hand of the user prefab, manages the hand representation update.

To do so, the class contains a HandCommand networked structure.

C#

[Networked(OnChanged = nameof(OnHandCommandChange))]

public HandCommand HandCommand { get; set; }

As the HandCommand is a networked var, the call back OnHandCommandChange() is called for each player every time the networked structure is changed by the state authority (the host), and updates the hand representation accordingly.

C#

public static void OnHandCommandChange(Changed<NetworkHand> changed)

{

// Will be called on all clients when the local user change the hand pose structure

// We trigger here the actual animation update

changed.Behaviour.UpdateHandRepresentationWithNetworkState();

}

C#

void UpdateHandRepresentationWithNetworkState()

{

if (handRepresentation != null) handRepresentation.SetHandCommand(HandCommand);

}

Similarly to what NetworkRig does for the rig part positions, during the Render(), NetworkHand` also handles the extrapolation and update of the hand pose, using the local hardware hands.

C#

public override void Render()

{

base.Render();

if (IsLocalNetworkRig)

{

// Extrapolate for local user : we want to have the visual at the good position as soon as possible, so we force the visuals to follow the most fresh hand pose

UpdateRepresentationWithLocalHardwareState();

}

}

C#

void UpdateRepresentationWithLocalHardwareState()

{

if (handRepresentation != null) handRepresentation.SetHandCommand(LocalHardwareHand.handCommand);

}

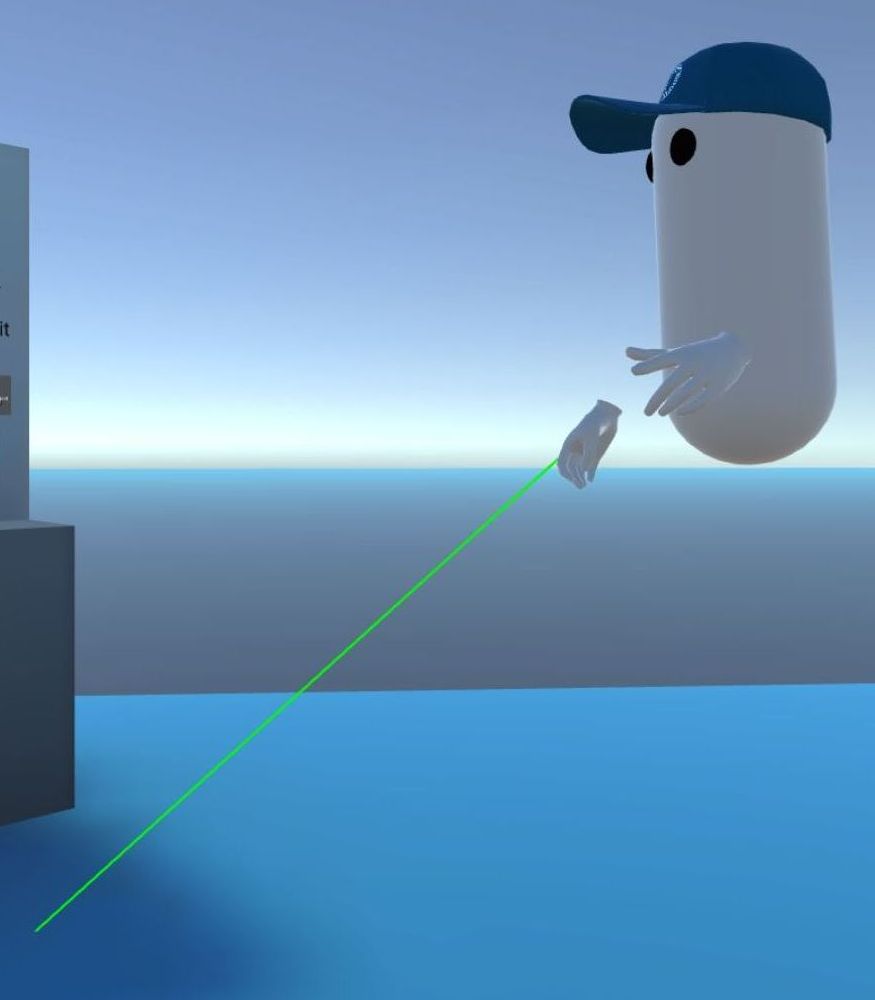

Teleport & locomotion

The RayBeamer class located on each hardware rig hand is in charge of displaying a ray when the user pushes a button. When the user releases the button, if the ray target is valid, then an event is triggered.

C#

if (onRelease != null) onRelease.Invoke(lastHitCollider, lastHit);

This event is listened to by the Rig Locomotion class located on the hardware rig.

C#

beamer.onRelease.AddListener(OnBeamRelease);

Then, it calls the rig teleport coroutine…

C#

protected virtual void OnBeamRelease(Collider lastHitCollider, Vector3 position)

{

if (ValidLocomotionSurface(lastHitCollider))

{

StartCoroutine(rig.FadedTeleport(position));

}

}

Which updates the hardware rig position, and ask a Fader component available on the hardware headset to fade in and out the view during the teleport (to avoid cybersickness).

C#

public virtual IEnumerator FadedTeleport(Vector3 position)

{

if (headset.fader) yield return headset.fader.FadeIn();

Teleport(position);

if (headset.fader) yield return headset.fader.WaitBlinkDuration();

if (headset.fader) yield return headset.fader.FadeOut();

}

public virtual void Teleport(Vector3 position)

{

Vector3 headsetOffet = headset.transform.position - transform.position;

headsetOffet.y = 0;

transform.position = position - headsetOffet;

}

As seen previously, this modification on the hardware rig position will be synchronized over the network thanks to the OnInput call back.

The same strategy applies for the rig rotation where CheckSnapTurn() triggers a rig modification.

C#

IEnumerator Rotate(float angle)

{

timeStarted = Time.time;

rotating = true;

yield return rig.FadedRotate(angle);

rotating = false;

}

public virtual IEnumerator FadedRotate(float angle)

{

if (headset.fader) yield return headset.fader.FadeIn();

Rotate(angle);

if (headset.fader) yield return headset.fader.WaitBlinkDuration();

if (headset.fader) yield return headset.fader.FadeOut();

}

public virtual void Rotate(float angle)

{

transform.RotateAround(headset.transform.position, transform.up, angle);

}

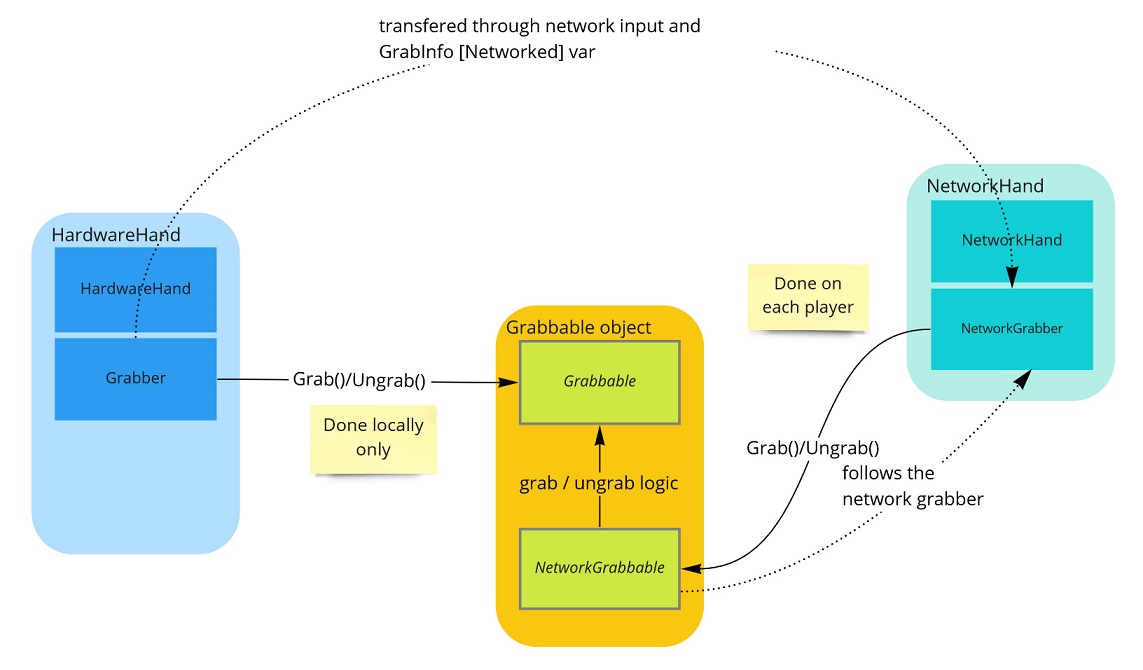

Grabbing

Overview

The grabbing logic here is separated in 2 parts:

- the local part, non networked, that detected the actual grabbing and ungrabbing when the hardware hand has triggered a grab action over a grabbable object (

GrabberandGrabbableclasses) - the networked part, that ensure that all player are aware of the grabbing status, and which manages the actual position change to follow the grabbing hand (

NetworkGrabberandNetworkGrabbableclasses).

Note: the code contains a few lines to allow the local part to manage the following itself when used offline, for instance in use cases where the same components are used for an offline lobby. But this document will focus on the actual networked usages.

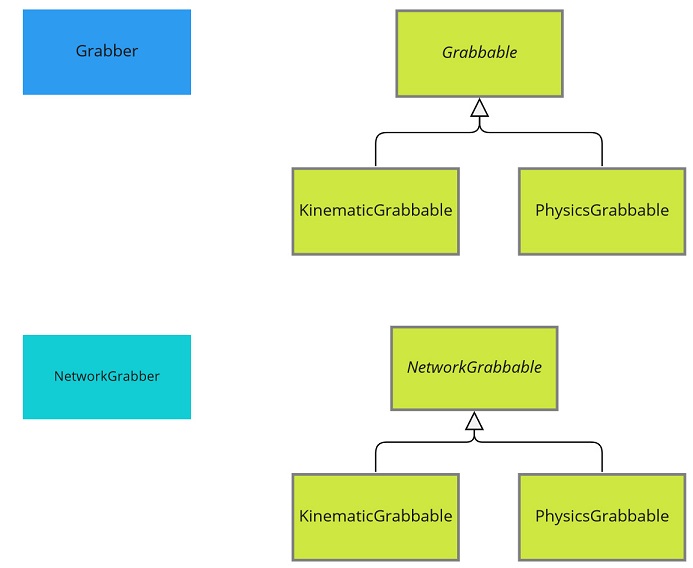

Two different kind of grabbing are available in this sample (Grabbable and NetworkGrabbable classes are abstract classes, with subclasses implementing each specific logic):

- grabbing for kinematic objects: their position simply follow the position of the grabbing hand. They can not have physics interaction with other objects. Implemented in

KinematicGrabbableandNetworkKinematicGrabbableclasses. - grabbing for physics objects: their velocity is changed so that they follow the grabbing hand. They can have physics interactions with other objects, and can be launched. This implementation requires the server physics mode to be set to client side prediction. Implemented in

PhysicsGrabbableandNetworkPhysicsGrabbableclasses.

Note: Even though it is possible to give kinematic object a release velocity (to launch them), it was not added this in this sample, as it would require additional code (while this separate kind of grab is here to demonstrate a very simple code base for simple grab use cases), and as the physics grabbing, also provided here, would in any cases give a more logical implementation and more accurate results for this kind of use case.

Details

The HardwareHand class, located on each hand, updates the isGrabbing bool at each update : the bool is true when the user presses the grip button.

Please note that, the updateGrabWithAction bool is used to support the deskop rig, a version of the rig that can be driven with the mouse and keyboard (this bool must be set to False for desktop mode, True for VR mode)

C#

protected virtual void Update()

{

// update hand pose

handCommand.thumbTouchedCommand = thumbAction.action.ReadValue<float>();

handCommand.indexTouchedCommand = indexAction.action.ReadValue<float>();

handCommand.gripCommand = gripAction.action.ReadValue<float>();

handCommand.triggerCommand = triggerAction.action.ReadValue<float>();

handCommand.poseCommand = handPose;

handCommand.pinchCommand = 0;

// update hand interaction

if(updateGrabWithAction) isGrabbing = grabAction.action.ReadValue<float>() > grabThreshold;

}

To detect collisions with grabbable objects, a simple box collider is located on each hardware hand, used by a Grabber component placed on this hand: when a collision occurs, the method OnTriggerStay() is called.

Note that in host topology, some ticks will be forward ticks (actual new ticks), while other are resimulations (replaying past instants). The grabbing and ungrabbing should only be detected during forward ticks, which correspond to the current positions. So OnTriggerStay() does not launch for resim ticks.

C#

private void OnTriggerStay(Collider other)

{

if (rig && rig.runner && rig.runner.IsResimulation)

{

// We only manage grabbing during forward ticks, to avoid detecting past positions of the grabbable object

return;

}

First, OnTriggerStay checks if an object is already grabbed. For simplification, multiple grabbing is not allowed in this sample.

C#

// Exit if an object is already grabbed

if (GrabbedObject != null)

{

// It is already the grabbed object or another, but we don't allow shared grabbing here

return;

}

Then it checks that :

- the collided object can be grabbed (it has a

Grabbablecomponent) - the user presses the grip button

If these conditions are met, the grabbed object is asked to follow the hand thanks to the Grabbable Grab method

C#

Grabbable grabbable;

if (lastCheckedCollider == other)

{

grabbable = lastCheckColliderGrabbable;

}

else

{

grabbable = other.GetComponentInParent<Grabbable>();

}

// To limit the number of GetComponent calls, we cache the latest checked collider grabbable result

lastCheckedCollider = other;

lastCheckColliderGrabbable = grabbable;

if (grabbable != null)

{

if (hand.isGrabbing) Grab(grabbable);

}

The Grabbable Grab() method stores the grabbing position offset

C#

public virtual void Grab(Grabber newGrabber)

{

// Find grabbable position/rotation in grabber referential

localPositionOffset = newGrabber.transform.InverseTransformPoint(transform.position);

localRotationOffset = Quaternion.Inverse(newGrabber.transform.rotation) * transform.rotation;

currentGrabber = newGrabber;

}

Similarly, when the object is not grabbed anymore, the Grabbable Ungrab() call store some details about the object

C#

public virtual void Ungrab()

{

currentGrabber = null;

if (networkGrabbable)

{

ungrabPosition = networkGrabbable.networkTransform.InterpolationTarget.transform.position;

ungrabRotation = networkGrabbable.networkTransform.InterpolationTarget.transform.rotation;

ungrabVelocity = Velocity;

ungrabAngularVelocity = AngularVelocity;

}

}

Note that depending on the grabbing type subclass actually used, some fields are not relevant (the ungrab positions are not used for physics grabbing for instance).

All those data about the grabbing (the network id of the object that is grabbed, the offset, the eventual release velocity and position) are then shared in the input transfer through the GrabInfo structure.

C#

// Store the info describbing a grabbing state

public struct GrabInfo : INetworkStruct

{

public NetworkBehaviourId grabbedObjectId;

public Vector3 localPositionOffset;

public Quaternion localRotationOffset;

// We want the local user accurate ungrab position to be enforced on the network, and so shared in the input (to avoid the grabbable following "too long" the grabber)

public Vector3 ungrabPosition;

public Quaternion ungrabRotation;

public Vector3 ungrabVelocity;

public Vector3 ungrabAngularVelocity;

}

When building the input, the grabber is asked to provide the up-to-date grabbing info:

C#

public GrabInfo GrabInfo

{

get

{

if (grabbedObject)

{

_grabInfo.grabbedObjectId = grabbedObject.networkGrabbable.Id;

_grabInfo.localPositionOffset = grabbedObject.localPositionOffset;

_grabInfo.localRotationOffset = grabbedObject.localRotationOffset;

}

else

{

_grabInfo.grabbedObjectId = NetworkBehaviourId.None;

_grabInfo.ungrabPosition = ungrabPosition;

_grabInfo.ungrabRotation = ungrabRotation;

_grabInfo.ungrabVelocity = ungrabVelocity;

_grabInfo.ungrabAngularVelocity = ungrabAngularVelocity;

}

return _grabInfo;

}

}

Then, when received by the host in NetworkRig, it stores them in the NetworkGrabber GrabInfo [Networked] var.

There, on each clients, during the FixedUpdateNetwork(), the class checks if the grabbing info has changed. It is done only in forward ticks, to avoid replaying the grab/ungrab during resimulations. It is done by calling HandleGrabInfoChange, to compare between the previous and current grab status of the hand. When needed, it then triggers the actual Grab and Ungrab methods on the NetworkGrabbable.

To grab a new object, the method first finds this grabbed NetworkGrabbable by searching it with its network id, with Object.Runner.TryFindBehaviour

C#

void HandleGrabInfoChange(GrabInfo previousGrabInfo, GrabInfo newGrabInfo)

{

if (previousGrabInfo.grabbedObjectId != newGrabInfo.grabbedObjectId)

{

if (grabbedObject != null)

{

grabbedObject.Ungrab(newGrabInfo);

grabbedObject = null;

}

// We have to look for the grabbed object has it has changed

NetworkGrabbable newGrabbedObject;

// If an object is grabbed, we look for it through the runner with its Id

if (newGrabInfo.grabbedObjectId != NetworkBehaviourId.None && Object.Runner.TryFindBehaviour(newGrabInfo.grabbedObjectId, out newGrabbedObject))

{

grabbedObject = newGrabbedObject;

if (grabbedObject != null)

{

grabbedObject.Grab(this, newGrabInfo);

}

}

}

}

The actual network grabbing, ungrabbing, and following the network grabber, differs depending on which grabbing type was choosen

Kinematic grabbing type

Follow

For the kinematic grabbing type, following the current grabber is simply teleporting to its actual position

C#

public void Follow(Transform followingtransform, Transform followedTransform)

{

followingtransform.position = followedTransform.TransformPoint(localPositionOffset);

followingtransform.rotation = followedTransform.rotation * localRotationOffset;

}

FixedupdateNetwork

When online, the following code is called during FixedUpdateNetwork calls

C#

public override void FixedUpdateNetwork()

{

// We only update the object position if we have the state authority

if (!Object.HasStateAuthority) return;

if (!IsGrabbed) return;

// Follow grabber, adding position/rotation offsets

grabbable.Follow(followingtransform: transform, followedTransform: currentGrabber.transform);

}

The position change is only done on the host (the state authority), and then the NetworkTransform ensures that all players receive the position updates.

Render

Regarding the extrapolation, made during the Render() (the NetworkKinematic class as a OrderAfter directive to override the NetworkTransform interpolation if needed ), 2 cases have to be handled here

- extrapolation while the object is grabbed: the object expected position is known, it should be on the hand position. So the grabbable visual (ie.

NetworkTransform's interpolation target) has to be on the position of the hand visual. - extrapolation when the object has just been ungrabbed: the network transform interpolation is still not the same as the extrapolation done while the object was grabbed. So for a short moment, the extrapolation has to continue (ie. the object has to stay still, at its ungrab position), otherwise the object would jump a bit in the past

C#

public override void Render()

{

if (IsGrabbed)

{

// Extrapolation: Make visual representation follow grabber visual representation, adding position/rotation offsets

// We extrapolate for all users: we know that the grabbed object should follow accuratly the grabber, even if the network position might be a bit out of sync

grabbable.Follow(followingtransform: networkTransform.InterpolationTarget.transform, followedTransform: currentGrabber.networkTransform.InterpolationTarget.transform);

}

else if (grabbable.ungrabTime != -1)

{

if ((Time.time - grabbable.ungrabTime) < ungrabResyncDuration)

{

// When the local user just ungrabbed the object, the network transform interpolation is still not the same as the extrapolation

// we were doing while the object was grabbed. So for a few frames, we need to ensure that the extrapolation continues

// (ie. the object stay still)

// until the network transform offers the same visual conclusion that the one we used to do

// Other ways to determine this extended extrapolation duration do exist (based on interpolation distance, number of ticks, ...)

networkTransform.InterpolationTarget.transform.position = grabbable.ungrabPosition;

networkTransform.InterpolationTarget.transform.rotation = grabbable.ungrabRotation;

}

else

{

// We'll let the NetworkTransform do its normal interpolation again

grabbable.ungrabTime = -1;

}

}

}

Note: some additional extrapolation could be done, for additional edge cases, for instance on the client grabbing the object, between the actual grabbing and the first tick were the [Networked] var are set: the hand visual can be followed a bit (a few milliseconds) before it would be otherwise

Physics grabbing type

Follow

For the physics grabbing type, following the current grabber implies changing the velocity of the grabbed object so that it eventually rejoins the grabber.

It can either be done by changing the velocity directly, or using forces to do so, depending of the kind of overall physics desired.

The sample illustrates both logic, with an option to select it on the PhysicsGrabbable. The direct velocity change is the simpler method.

C#

void Follow(Transform followedTransform, float elapsedTime)

{

// Compute the requested velocity to joined target position during a Runner.DeltaTime

rb.VelocityFollow(target: followedTransform, localPositionOffset, localRotationOffset, elapsedTime);

// To avoid a too aggressive move, we attenuate and limit a bit the expected velocity

rb.velocity *= followVelocityAttenuation; // followVelocityAttenuation = 0.5F by default

rb.velocity = Vector3.ClampMagnitude(rb.velocity, maxVelocity); // maxVelocity = 10f by default

}

FixedUpdateNetwork

To be able to compute physics properly, the network inputs data are required for the grabbing client, as explained later during the FixedUpdateNetwork description. So the input authority has to be properly assigned during the Grab():

C#

public override void Grab(NetworkGrabber newGrabber, GrabInfo newGrabInfo)

{

grabbable.localPositionOffset = newGrabInfo.localPositionOffset;

grabbable.localRotationOffset = newGrabInfo.localRotationOffset;

currentGrabber = newGrabber;

if (currentGrabber != null)

{

lastGrabbingUser = currentGrabber.Object.InputAuthority;

}

lastGrabber = currentGrabber;

DidGrab();

// We store the precise grabbing tick to be able to determined if we are grabbing during resimulation tick,

// where tha actual currentGrabber may have changed in the latest forward ticks

startGrabbingTick = Runner.Tick;

endGrabbingTick = -1;

}

During the FixedUpdateNetwork, each clients run the code below so that the grabbed object velocity make it move to the grabbing hand.

The important aspect to keep in mind is that the FixedUpdateNetwork is called on the client:

- during forward ticks (ticks computed for the first time, and that try to predict what happen after the latest reliable host data),

- but also during resim ticks ( predicted ticks that are recomputed when new data arrives from the server, potentially contradicting the predicted ticks made before )

While the user grab the object, it is of little importance as the Follow code is relevant for each tick independantly.

But when the user releases the grabbable object, during the resim ticks, some ticks occured while the object was still grabbed, while some occured while it was not grabbed danymore. But the currentGrabber variable has been set to null in the Ungrab() call, so it is not suitable anymore for the resim ticks before the ungrab.

So, to be sure that during a tick the actual grabbing status is known, the ticks associated to the grabbing and ungrabbing are stored in startGrabbingTick and endGrabbingTick variables. Then, in the FixedUpdateNetwork(), during the resimulations, those variables are used to determine if the object was actualy grabbed during this tick.

C#

public override void FixedUpdateNetwork()

{

if (Runner.IsForward)

{

// during forward tick, the IsGrabbed is reliable as it is changed during forward ticks

// (more precisely, it is one tick late, due to OnChanged being called AFTER FixedUpdateNetwork,but this way every client, including proxies, can apply the same physics)

if (IsGrabbed)

{

grabbable.Follow(followedTransform: currentGrabber.transform, elapsedTime: Runner.DeltaTime);

}

}

if (Runner.IsResimulation)

{

bool isGrabbedDuringTick = false;

if (startGrabbingTick != -1 && Runner.Tick >= startGrabbingTick)

{

if (Runner.Tick < endGrabbingTick || endGrabbingTick == -1)

{

isGrabbedDuringTick = true;

}

}

if (isGrabbedDuringTick)

{

grabbable.Follow(followedTransform: lastGrabber.transform, elapsedTime: Runner.DeltaTime);

}

// For resim, we reapply the release velocity on the Ungrab tick, like it was done in the Forward tick where it occurred first.

if (endGrabbingTick == Runner.Tick)

{

grabbable.rb.velocity = lastUngrabVelocity;

grabbable.rb.angularVelocity = lastUngrabAngularVelocity;

}

}

}

Render

To avoid messing with the position interpolated due to the physics computation, the Render() logic here is not to force the grabbed object visual position on the hand visual position.

Several options are available (including doing nothing, which gives results that may be relevant choices - the hand would pass through the grabbed object when colliding for instance).

The current implemention in the sample uses the following Render logic:

- instead of the grabbed object visual staying on the hand visual, it is the hand visual position that is forced to follow the grabbed object visual position

- in case of collision,this can lead to some differences between the real life hand position and the displayed hand position. To make it comfortable, a "ghost" hand is displayed at the position of the real life hand

- to make the user feel this dissonance (especially during collisions), the controller send a vibration proportional to the dissonance distance between the displayed hand and the actual hand. It provides a slight feeling of resistance.

- no effort is made when releasing the object to restore the hand position smoothly (but it could be added if needed)

C#

public override void Render()

{

base.Render();

if (IsGrabbed)

{

var handVisual = currentGrabber.hand.networkTransform.InterpolationTarget.transform;

var grabbableVisual = networkTransform.InterpolationTarget.transform;

// On remote user, we want the hand to stay glued to the object, even though the hand and the grabbed object may have various interpolation

handVisual.rotation = grabbableVisual.rotation * Quaternion.Inverse(grabbable.localRotationOffset);

handVisual.position = grabbableVisual.position - (handVisual.TransformPoint(grabbable.localPositionOffset) - handVisual.position);

// Add pseudo haptic feedback if needed

ApplyPseudoHapticFeedback();

}

}

// Display a ghost" hand at the position of the real life hand when the distance between the representation (glued to the grabbed object, and driven by forces) and the IRL hand becomes too great

// Also apply a vibration proportionnal to this distance, so that the user can feel the dissonance between what they ask and what they can do

void ApplyPseudoHapticFeedback()

{

if (pseudoHapticFeedbackConfiguration.enablePseudoHapticFeedback && IsGrabbed && IsLocalPlayerMostRecentGrabber)

{

if (currentGrabber.hand.LocalHardwareHand.localHandRepresentation != null)

{

var handVisual = currentGrabber.hand.networkTransform.InterpolationTarget.transform;

Vector3 dissonanceVector = handVisual.position - currentGrabber.hand.LocalHardwareHand.transform.position;

float dissonance = dissonanceVector.magnitude;

bool isPseudoHapticNeeded = (isColliding && dissonance > pseudoHapticFeedbackConfiguration.minContactingDissonance);

currentGrabber.hand.LocalHardwareHand.localHandRepresentation.DisplayMesh(isPseudoHapticNeeded);

if (isPseudoHapticNeeded)

{

currentGrabber.hand.LocalHardwareHand.SendHapticImpulse(amplitude: Mathf.Clamp01(dissonance / pseudoHapticFeedbackConfiguration.maxDissonanceDistance), duration: pseudoHapticFeedbackConfiguration.vibrationDuration);

}

}

}

}

Third party

Next

Here are some suggestions for modification or improvement that you can practice doing on this project :

- Display the local teleport ray to others players (by adding it the the

RigInputstructure shared during theOnInputcall ) - Add voice capability. For more details on Photon Voice integration with Fusion, see this page: https://doc.photonengine.com/en-us/voice/current/getting-started/voice-for-fusion

- Create a button to spawn additionnal networked object at runtime

Changelog

Fusion VR Host 1.1.8 Build 278

- update to Fusion SDK 1.1.8

- update project to Unity 2021.3.29f1 + OpenXR 1.8.1

- TrackedPoseDriver replaced by XRControllerInputDevice for head & hands tracking

- various improvements

Fusion VR Host 1.1.3 Build 6

- update to Fusion SDK 1.1.3

Fusion VR Host 1.1.2 Build 5

- fix to grab/ungrab detection to limit them to Forward ticks

- fix to a bug when the user tries to swap the grabbing hands

- added the optionnal PseudoHapticGrabbableRender to display a ghost grabbed object during pseudo-haptic feedback (ie. when ghost IRL hands appear) for physics grabbable objects

- in NetworkGrabber, use FixedUpdateNetwork instead of OnChanged to have the grabbing info 1 tick earlier

- remove secondary following type, and set

Follow()as virtual in PhysicsGrabbable to allow developpers to implement their own following physics - allow proxy user grabbing physics: the input authority is not changed anymore, neither the InterpolationDataSource, but instead the grabbing/ungrabbing ticks are memorized to determine on each clients the grabbing status during resimulations