Mixed reality (with local multiplayer)

Overview

This sample demonstrates how to setup a project to allow:

- colocalized multiplayer, with Meta shared spatial anchors integration

- synchronizing hand tracking, and switching from controller-based hand positioning to finger-tracking

Technical Info

- This sample uses the Shared Mode topology,

- The project has been developed with Unity 2022.3, Fusion 2, and Photon Voice 2.53,

Before you start

To run the sample :

Create a Fusion AppId in the PhotonEngine Dashboard and paste it into the

App Id Fusionfield in Real Time Settings (reachable from the Fusion menu).Create a Voice AppId in the PhotonEngine Dashboard and paste it into the

App Id Voicefield in Real Time SettingsThen load the

SampleSceneWithNetworkRigscene and pressPlay

Download

| Version | Release Date | Download | |

|---|---|---|---|

| 2.0.0 | Apr 18, 2024 | Fusion mixedreality 2.0.0 Build 510 | |

Testing

To be able to test the colocalization, the application needs to be distributed through a Meta release channel.

If you have trouble testing the application, don't hesitate to join us on our Discord.

Handling Input

Meta Quest (controller tracking)

- Grab : first put your hand over the object and grab it using controller grab button

- Draw: if your hand is not over a grabbable object, use the trigger button of the controller to start drawing. A few second after your last line was drawn, the drawing will be finished, and an anchor will make it grabbbable

Meta Quest (hand tracking)

- Grab : pinch your index and thumb over the object to grab it while your fingers stay pinched

- Draw: if your hand is not over a grabbable object, pinch your finger to start drawing. A few second after your last line was drawn, the drawing will be finished, and an anchor will make it grabbbable

Folder Structure

The main folder /MixedReality contains all elements specific to this sample.

The folder /IndustriesComponents contains components shared with others industries samples like Fusion Meeting sample, or Fusion Metaverse sample.

The /Photon folder contains the Fusion and Photon Voice SDK.

The /Photon/FusionXRShared folder contains the rig and grabbing logic coming from the VR shared sample, creating a FusionXRShared light SDK that can be shared with other projects.

The /Photon/FusionAddons folder contains the Industries Addons used in this sample.

The /XR folder contain configuration files for virtual reality.

Architecture overview

The Mixed Reality sample rely on the same code base than the one described in the VR Shared page, notably for the rig synchronization.

The grabbing system used here is the alternative "local rig grabbing" implementation described in the VR Shared - Local rig grabbing page.

Colocalization through shared spatial anchors

In mixed reality, if several users are in the same room, it is more comfortable to see their avatar at their real life position. To do so, if an user is already present in the room upon connection, the new user is teleported and reoriented in the Unity room, so that what they see in real life matches the position in the network room.

To do so, shared spatial anchors are used. They are based on shared real life room cloud points, synchronized and shared on the Meta backends, and available to the end developper as Id. The overall process is the following:

- when connecting to the room, we create an anchor, that is stored on Meta's backend, and which Id is synchronized on Fusion

- when another user connects, they receive through Fusion the anchor Id

- then they ask to the Meta SDK if this Id is present in their room (behind the scene, the Id is requested on the Oculus backend, and a local lookup is performed)

- if the anchor if found, the teleportation / rotation of the new user is performed

This is done in this sample through the SSAManager, OculusUserInfo and ReferenceAnchor components.

Networked data

The OculusUserInfo is a network behavior, placed on an user rig, that synchronize the user Oculus Id, the state of colocalization for this user, and with which other user it has been colocalized.

The ReferenceAnchor is a network behavior, created for each shared spatial anchor, that synchronize the anchor Id ("UUID") and the list of Oculus user Id with whom it has been shared. The gameobject on which it is located also includes a NetworkTransform, to sync its position.

The SSAManager implements all the operations handling shared spatial anchors, either creation, lookup, or synchronization with the Meta backends. It also keeps track of all the anchors and users, and apply the eventual teleportation/rotation to the local user when a colocalization is found.

Detailed shared anchor synchronisation process

The basic process of handling shared spatial anchor is described in the "big picture" about SSA chapter in their documentation.

To better understand the logic and the task repartition between the 3 classes implementing SSA in the sample, here is the full process used to handle the shared spatial anchors, and which class handles each steps:

For all users:

- [

OculusUserInfo] Wait for the Oculus Platform Core SDK initialization - [

OculusUserInfo] Check if user is entitled to use the application - [

OculusUserInfo] Get the Oculus user Id of the logged in user - [

OculusUserInfo] Fusion networking: store the Oculus Id in a networked var so that other users can access it

For the user creating an anchor:

- [

ReferenceAnchor] Wait for the local Oculus user Id to be available - [

ReferenceAnchor] Create a shared spatial anchor (usingSSAManager) - [

ReferenceAnchor] Wait for the spatial anchor to have been assigned an UUID and to be ready - [

ReferenceAnchor] Save the anchor to the Meta's backend (usingSSAManager) - [

ReferenceAnchor] Fusion networking: stores the anchor UUID in a networked variable - [

ReferenceAnchor] Authorize all oculus user Id in the room to have access to the anchor position on the Meta's backend (usingSSAManager) - [

ReferenceAnchor] Fusion networking: stores the list of Oculus user Id who have access to the anchor in a networked array.

For the user looking for remote anchors

- [

ReferenceAnchor] Fusion networking: receives the anchor UUID - [

ReferenceAnchor] Fusion networking: check the user list, to know when the anchor access on Meta backend is authorized for the local Oculus user Id - [

ReferenceAnchor] Ask the Meta SDK to look for the anchor in the real life room (usingSSAManager) - [

ReferenceAnchor] If the anchor is indeed detected in the room, create a shared spatial anchor, and bind it to the detected data (usingSSAManager) - [

ReferenceAnchor] Wait for the shared spatial anchor to be localized (usingSSAManager) - [

SSAManager] Reposition the user hardware rig to make the anchor network position (received from the siblingNetworkTransformcomponent) matches the real life anchor position

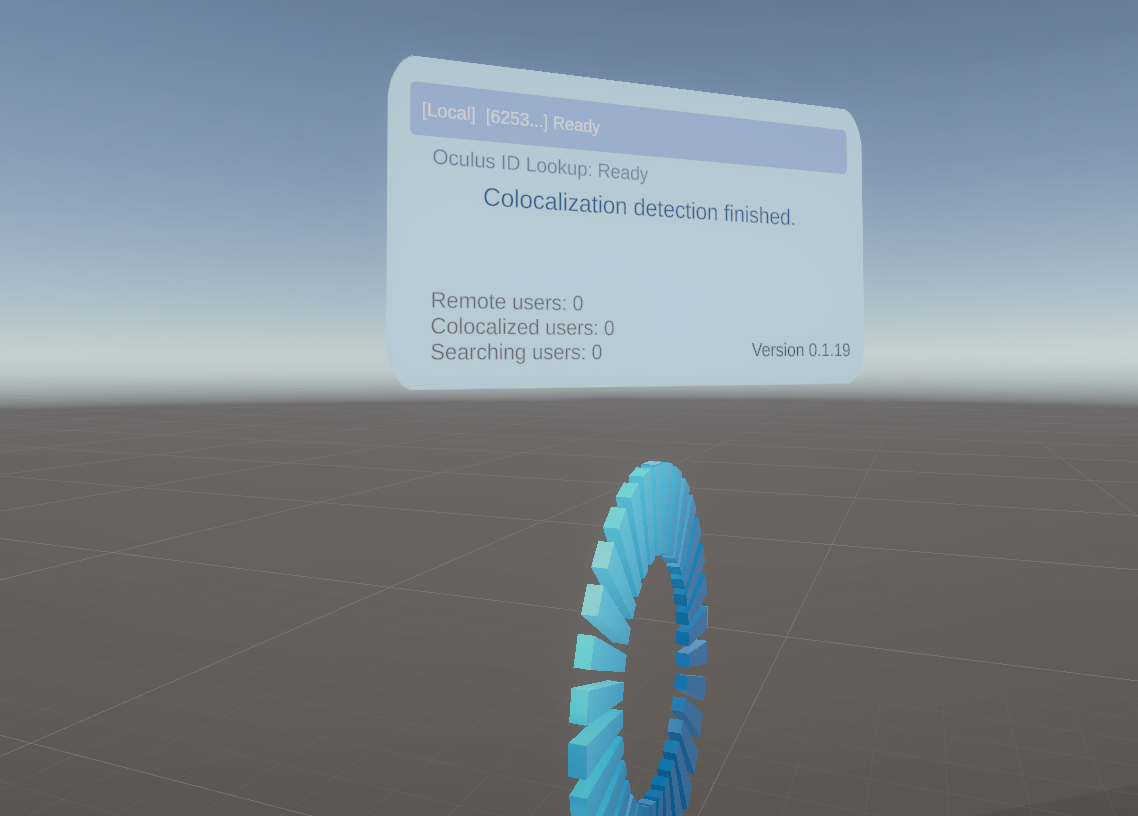

To help the understanding of the colocalization process described here, an information panel is displayed at the center of the room.

Oculus dashboard requirements

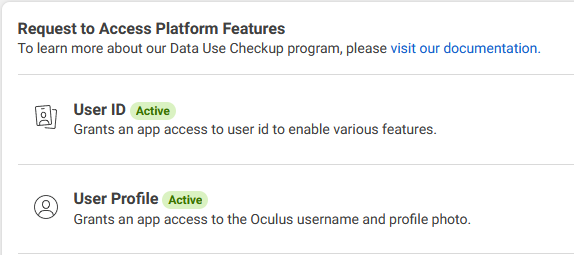

To be able to use the shared spatial anchor, the Oculus user Id is needed.

To have access to it, creating an application on the Oculus dahsboard is required.

Then, a data use checkup should be filled, with the user Id and user profile access requested.

Finally, note that to actually have access to the Oculus user Id on a Quest, it is required to distribute the application through a release channel (otherwise, you might always receive a 0, for the user Id).

Colocalization tips

The SSA anchors-based colocalisation process can be quite sensitive. The most important thing is the quality of the room scan, on each device involved in a colocalized experience.

Besides, sometimes the colocalization might never work everytime the same given user is the first one in the room. It can occurs if the cloud point data sharing settings has some issue in the headset (if it appears set to true, while this is not taken into account).

To fix it, on the Quest, go to Parameters > Confidentiality > Device authorizations, and uncheck and recheck "Cloud points". Doing the same for the Parameters > Confidentiality > Authorizations >

Used XR Addons & Industries Addons

To make it easy for everyone to get started with their 3D/XR project prototyping, we provide a few free addons. See XR Addons for more details. Also, we provide to our Industries Circle members a comprehensive list of reusable addons. See Industries Addons for more details. Here are the addons we've used in this sample.

XRShared

XRShared addon provides the base components to create a XR experience compatible with Fusion. It is in charge of the players' rig parts synchronization, and provides simple features such as grabbing and teleport.

See XRShared for more details.

Fingers synchronization for Meta's hands

The finger tracking is provided by the Meta OVR hands synchronization add-on, which provides an high level of compression for the finger-tracking data.

Drawing

To draw with the finger, a subclass of the Drawerclass from the drawing add-on has been created, FingerDrawer.

This version uses the HandIndexTipDetector component to receive the index position (no matter if it comes from the finger tracking skeleton or the controller tracking hand skeleton), and triggers the drawing based on the current mode.

ConnectionManager

We use the ConnectionManager addon to manage the connection launch and spawn the user representation.

See ConnectionManager Addons for more details.

Dynamic audio group

We use the dynamic audio group addon to enable users to chat together, while taking into account the distance between users to optimize comfort and bandwidth consumption.

See Dynamic Audio group Industries Addons for more details.

Avatar and RPMAvatar

The current state of the sample does not provide an avatar selection screen, but the avatar add-on is integrated, so a selection screen can be easily added to either use Ready Player Me or simple avatars.

Currently, the local user settings simply chooses a random avatar from the RPMAvatarLibrary present in the scene.

Third party components

- Oculus Integration

- Oculus Lipsync

- Oculus Sample Framework hands

- Ready player me

- Sounds

- Overview

- Architecture overview

- Colocalization through shared spatial anchors

- Networked data

- Detailed shared anchor synchronisation process

- Oculus dashboard requirements

- Colocalization tips

- Used XR Addons & Industries Addons

- XRShared

- Fingers synchronization for Meta's hands

- Drawing

- ConnectionManager

- Dynamic audio group

- Avatar and RPMAvatar

- Third party components