Expo

If you require help on how to integrate XR Interaction toolkit in a Fusion app, please contact the VR team on the Circle Discord

Overview

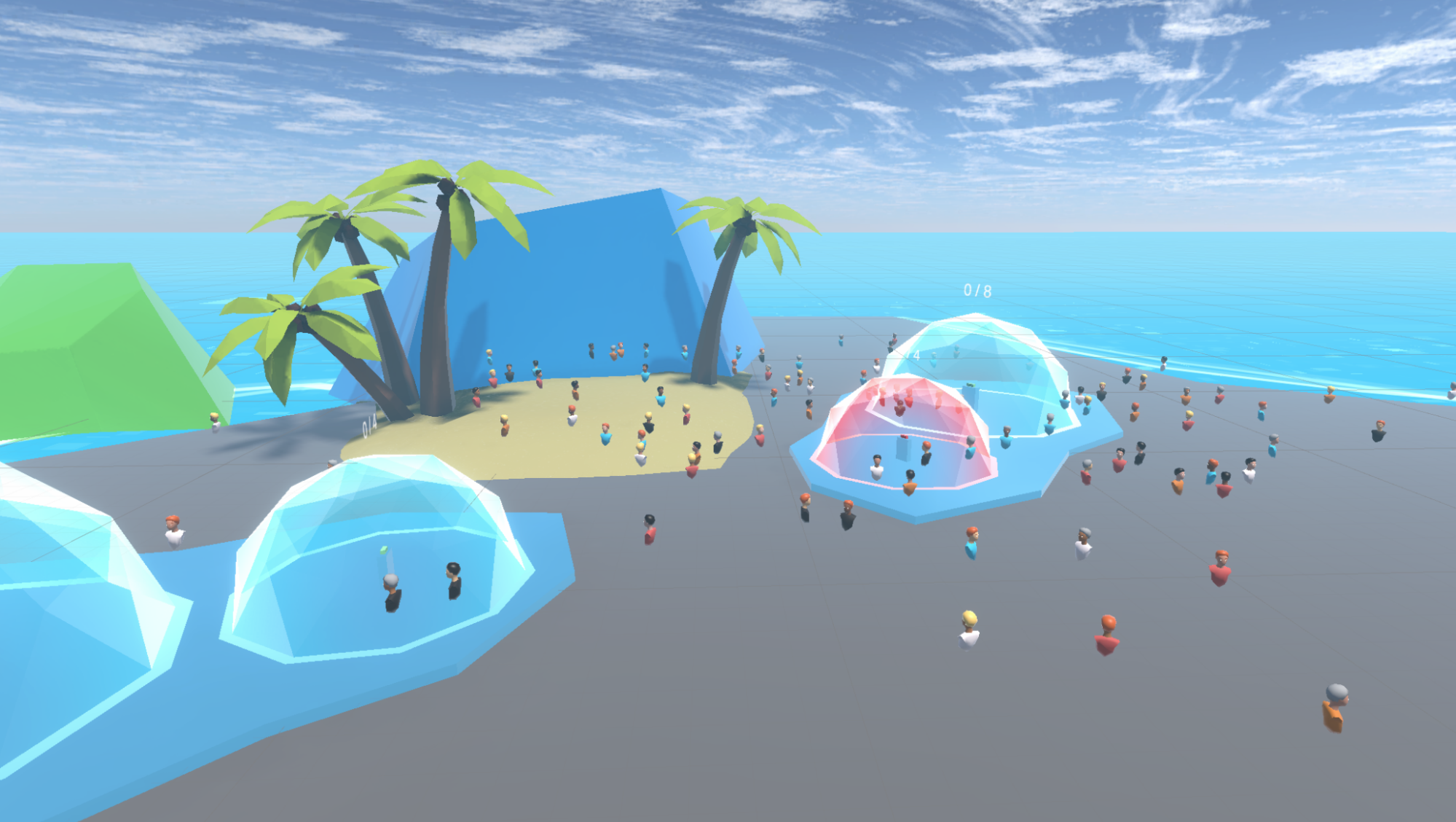

The Fusion Expo sample demonstrates an approach on how to develop a social application for up to 100 players with Fusion.

Each player is represented by an avatar and can talk to other players if they are located in the same chat buble thanks to Photon Voice SDK.

Some of the highlights of this sample are :

- First, the player customizes its avatar on the selection avatar screen,

- Then, they can join the Expo scene. If the player launches the sample on a PC or MAC, it can choose between the Desktop mode (using keyboard & mouse) or VR mode (Meta Quest headset).

- Players can talk to each other if they are located in the same static chat bubble. In each static chat bubble, a lock button is available to prevent new players from entering.

- Also, if two players are close to each other, a dynamic chat bubble is created around the player with the lower velocity.

- Some 3D pens are available to create 3d drawing. Each 3D drawing can be moved using the anchor.

- Also, a classic whiteboard is available. Each drawing can be moved using the anchor.

- Players can move to a new location (new scene loading).

More technical details are provided directly in the code comments.

Technical Info

- This sample uses the Shared Mode topology but core is compatible with Shared & Host Mode topologies,

- Builds are available for PC, Mac & Meta Quest,

- The project has been developed with Unity 2021.3.13f1, Fusion 1.1.3f 599 and Photon voice 2.31,

- 2 avatars solutions are supported (home made simple avatars & Ready Player Me 1.9.0 avatars),

Before you start

To run the sample :

Create a Fusion AppId in the PhotonEngine Dashboard and paste it into the

App Id Fusionfield in Real Time Settings (reachable from the Fusion menu).Create a Voice AppId in the PhotonEngine Dashboard and paste it into the

App Id Voicefield in Real Time SettingsThen load the

AvatarSelectionscene and pressPlay

Download

| Version | Release Date | Download | ||

|---|---|---|---|---|

| 0.0.19 | May 12, 2023 | Fusion Expo XRShared 0.0.19 Build 192 | ||

Download Binaries

A demo version of Expo is available below :

Handling Input

Desktop

Keyboard

- Move : WASD or ZQSD to walk

- Rotate : QE or AE to rotate

- Bot spawn: press “B” to spawn one bot. Stay pressed for longer than 1 second to create 50 bots on release

Mouse

- Move : left click with your mouse to display a pointer. You will teleport on any accepted target on release

- Rotate : keep the right mouse button pressed and move the mouse to rotate the point of view

- Move & rotate : keep both the left and right button pressed to move forward. You can still move the mouse to rotate

- Grab & use (3D pens) : put the mouse over the object and grab it using the left mouse button. Then you can use it using the keyboard space key.

Meta Quest

- Teleport : press A, B, X, Y, or any stick to display a pointer. You will teleport on any accepted target on release

- Touch (ie for chat bubbles lock buttons) : simply put your hand over a button to toggle it

- Grab : first put your hand over the object and grab it using controller grab button

- Bot spawn : press the menu button on the left controller to spawn one bot. Stay pressed for longer than 1 second to create 50 bots on release

Folder Structure

The main folder /Expo contains all elements specific to this sample.

The folder /Expo/Integrations manages compatibility with third party solutions like ReadyPlayerMe.

The /Photon folder contains the Fusion and Photon Voice SDK.

The /Photon/FusionXRShared folder contains the rig and grabbing logic coming from the VR shared sample, creating a FusionXRShared light SDK that can be shared with other projects.

The /Photon/FusionXRShared/Extensions folder contains extensions for FusionXRShared, for reusable features like synchronised rays, locomotion validation, ...

The /Plugins folder contains the Ready Player Me SDK

The /StreamingAssets folder contains pre-built ReadyPlayerMe avatars. It can be removed freely if you don’t want to use those prebuilt avatars.

The /XRI and /XR folders contain configuration files for virtual reality.

Architecture overview

The Fusion Expo rely on the same code base than the one described in the VR Shared page, notably for the rig synchronization.

The grabbing system used here is the alternative "local rig grabbing" implementation described in the VR Shared - Local rig grabbing page.

Aside from this base, the sample contains some extensions to the FusionXRShared, to handle some reusable features like synchronised rays, locomotion validation, touching, teleportation smoothing or a gazing system.

Network Connection and application lifecycle

The ConnexionManager launches a Fusion session, in shared mode topology, and spawns an user prefab for each connected user. For more details, see the "Connexion Manager" section of the VR Shared sample documentation

The SessionEventsManager observes Fusion and PhotonVoice connection status to alert components interested by those, notably the SoundManager which handles various sound effects..

Audio

VoiceConnection and FusionVoiceBridge components start the audio Photon Voice connection along the Fusion session, the Recorder component catching the microphone input.

For Oculus Quest builds, additional user authorisations are required, and this request is managed by the MicrophoneAuthorization script.

The user prefab contains a Speaker and VoiceNetworkObject placed on its head, to project spatialized sound upon receiving voice.

For more details on Photon Voice integration with Fusion, see this page: https://doc.photonengine.com/en-us/voice/current/getting-started/voice-for-fusion

Rigs

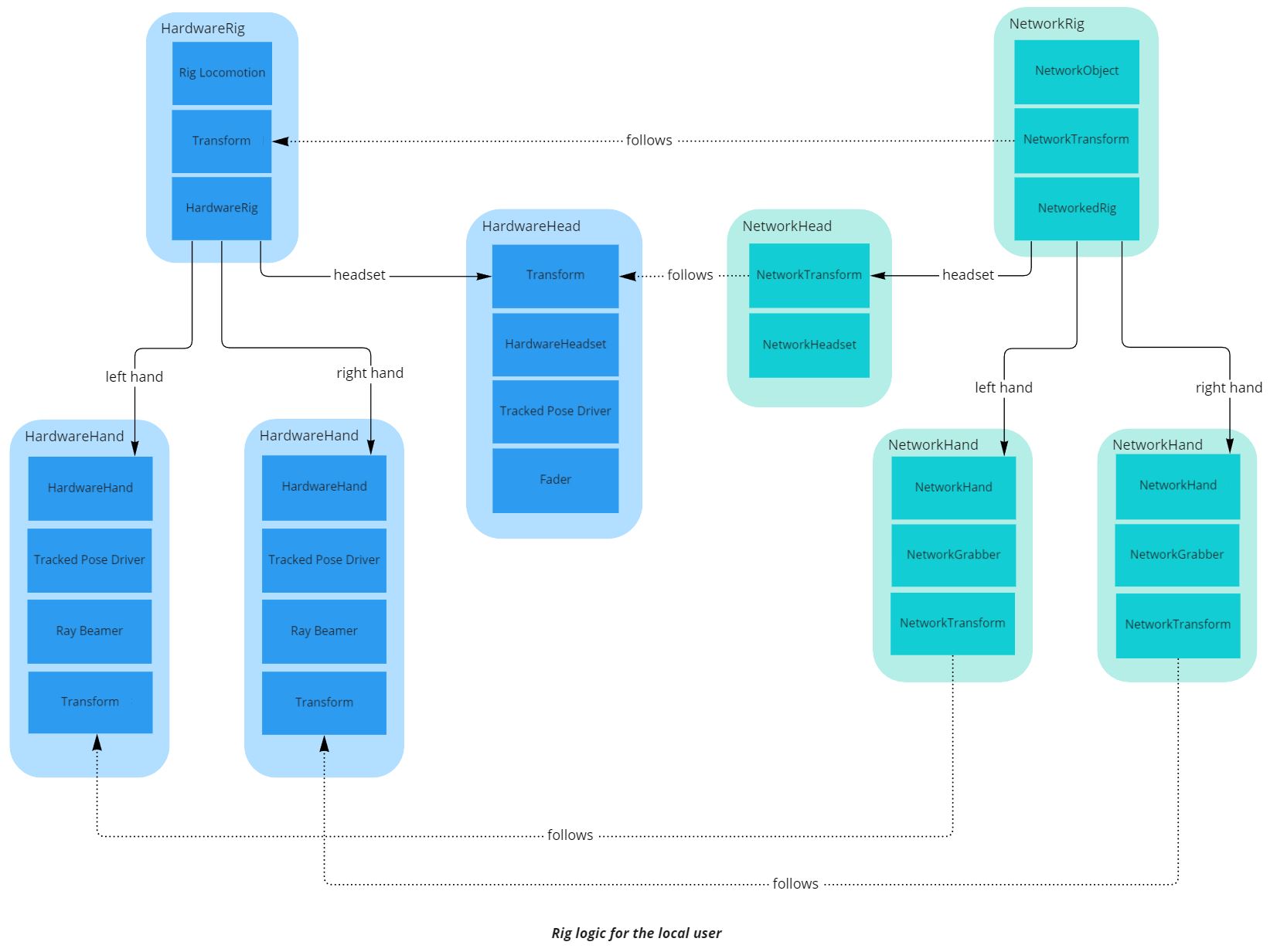

In an immersive application, the rig describes all the mobile parts that are required to represent an user, usually both hands, an head, and the play area (it is the personal space that can be moved, when an user teleports for instance),

While in a networked session, every user is represented by a networked rig, whose various parts positions are synchronized over the network.

Several architectures are possible, and valids, regarding how the rig parts are organized and synchronized. Here, an user is represented by a single NetworkObject, with several nested NetworkTransforms, one for each rig parts.

Regarding the specific case of the network rig representing the local user, this rig has to be driven by the hardware inputs. To simplify this process, a separate, non networked, rig has been created, called the “Hardware rig”. It uses classic Unity components to collect the hardware inputs (like TrackedPoseDriver).

The NetworkedRig component, located on the user prefab, manages this tracking for all the nested rig parts.

Aside from sharing the rig parts position with other players during the FixedUpdateNetwork(), the NetworkRig component also handles the extrapolation : during the Render(), the interpolation target, which handle the graphical representation of the various rig parts’ NetworkTransforms, is moved, to ensure that the local user always see their hands at the most recent position, even between network ticks.

For more details on the rig parts synchronisation, see the "Rig - Details" section of the VR Shared sample documentation

Rig Info

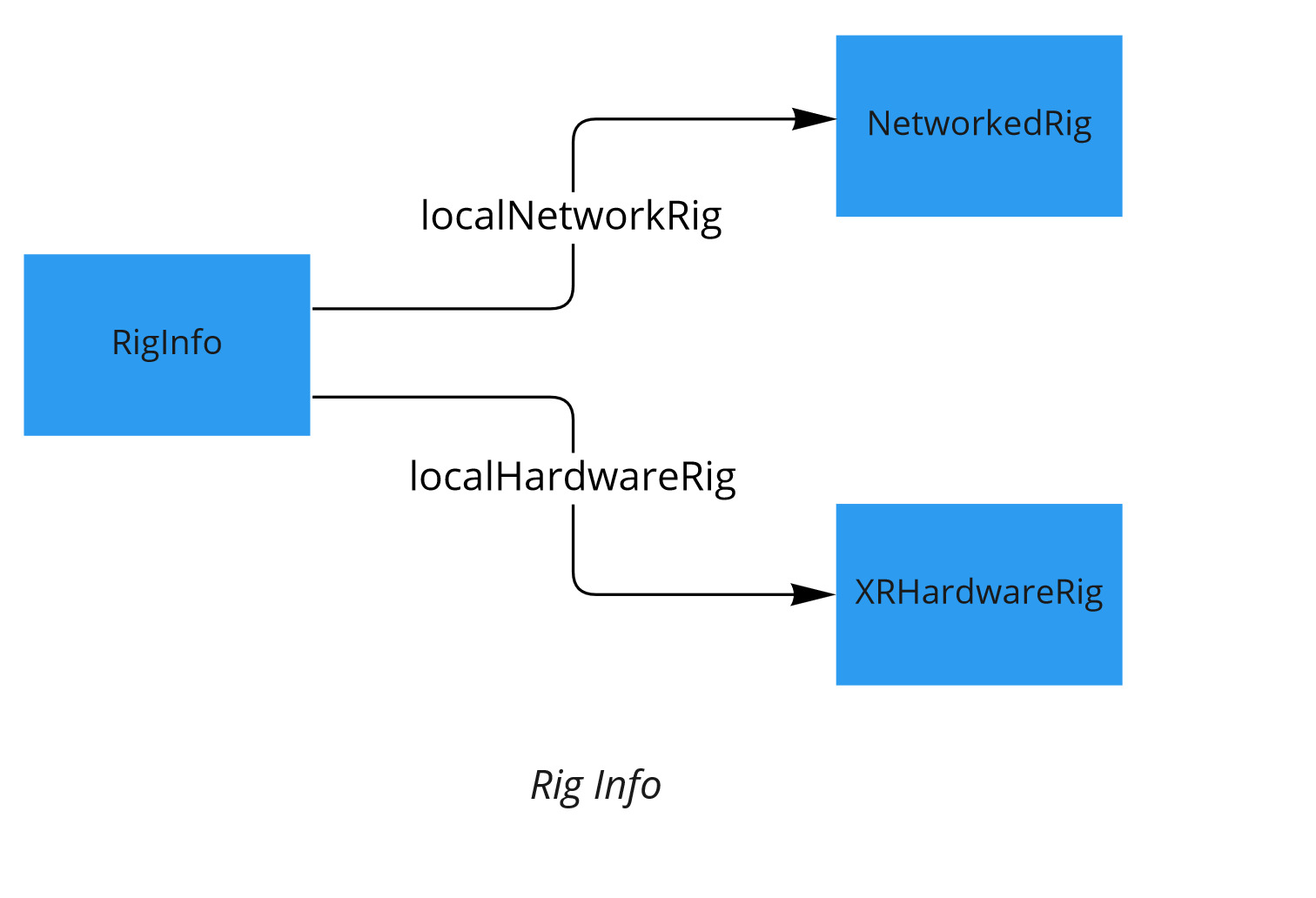

To be able to easily find the HardwareRig and the matching NetworkRig related to the local user, the component RigInfo registers the HardwareRig and the local NetworkedRig for further usage.

Interaction Stack

This sample allows the player to move, grab objects, and touch surfaces.

All these manipulations are done locally (on the hardware rig), independently of the network, then sent to the network through Fusion input to the network rig.

Locomotion

In virtual reality, locomotion is provided through ray-based teleportation, with additional snap-turn available on the joystick. The locomotion is managed by the RayBeamer and RigLocomotion class, as described in the "Teleport & Locomotion" section of the VR Shared sample documentation.

In addition, the ray are synchronized, so that they can be used to point at something to other users. It is done through the HardwareHandRay class, which synchronize a RayData structure with other users through a [Networked] var.

In the desktop version, the user can either move with the keyboard, or mouse left click on the ground to teleport there. The DesktopController and MouseTeleport components manage the locomotion, while the MouseCamera component manages the camera movement using the mouse right-click movements.

For remote users, the play area movement is smoothed, to replace teleportation by a progressive move to the target place (unless the instantPlayareaTeleport option is set to true). This smoothing is done in the TeleportationSmoother class . It includes a [OrderAfter(typeof(NetworkRig))] to override in its own FixedUpdateNetwork() the position defined by the NetworkRig FixedUpdateNetwork (it does this overriding only when smoothing is needed).

Locomotion restrictions

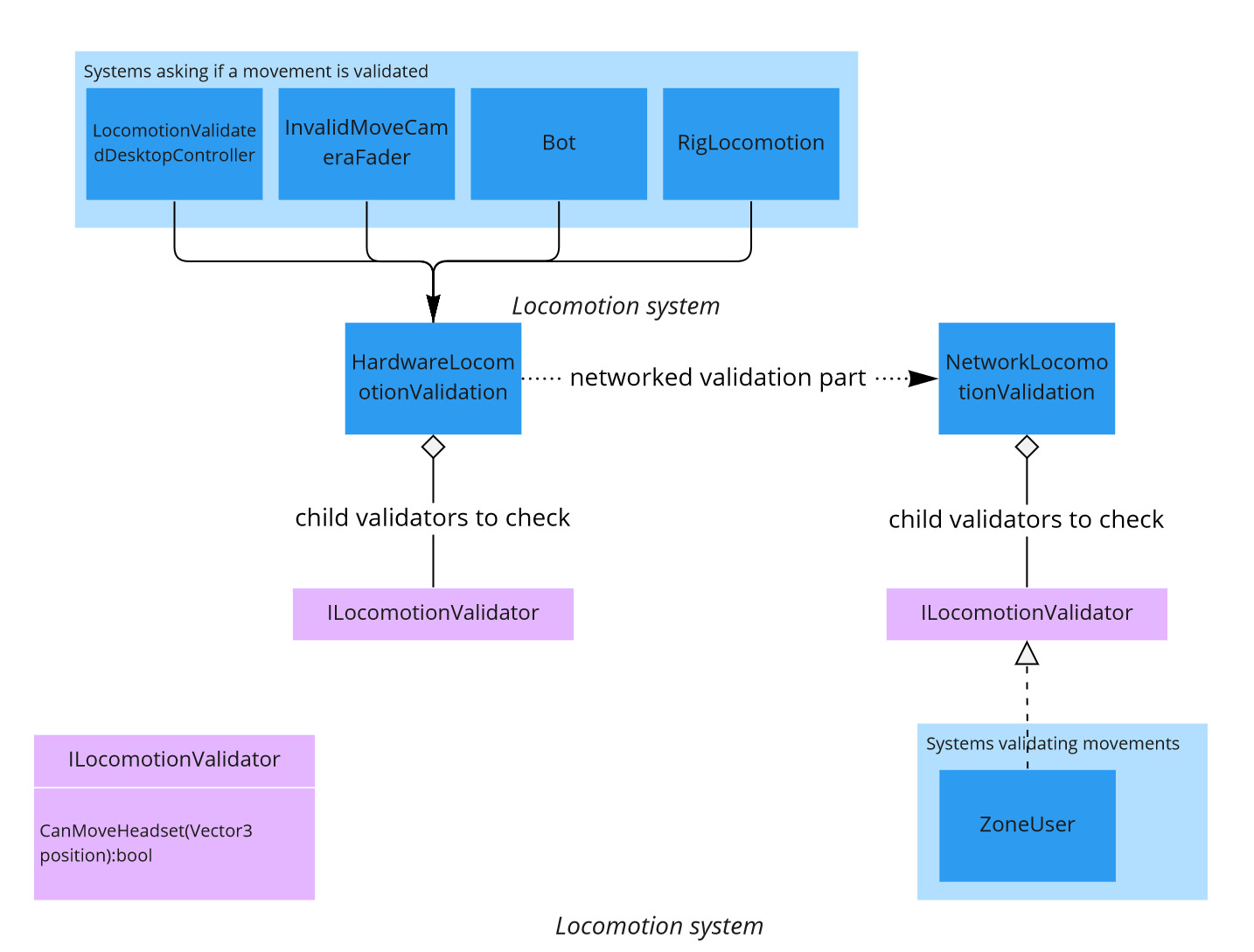

This sample application sometimes prevents the user from going to some places (for example, it is not possible to enter a chat bubble if its max capacity is reached).

To do so, every component that wants to move the user relies on both the generic locomotion validation system, and the constraints associated with a specific locomotion mode.

Locomotion validation system

To determine if the user is not trying to go to a forbidden zone, every locomotion system first asks the HardwareLocomotionValidation component, placed on the HardwareRig, if they can move to this position, with the CanMoveHeadset() method. To answer, the HardwareLocomotionValidation first checks if the move is valid with all its ILocomotionValidator childs, and all the ILocomotionValidator childs of the NetworkLocomotionValidation component, placed next on the NetworkedRig instance representing the local user on the network.

Additionally, if a user puts the head in a forbidden zone, the view will fade, to prevent them from “cheating”. It is handled in the InvalidMoveCameraFader class.

Other restrictions

Additionally, the locomotion is limited by other factor, depending on the locomotion system used:

RigLocomotion: an user can only teleport on an collider with a layer in theRigLocomotion's locomotion layer mask (like theTeleportTarget` layer)LocomotionValidatedDesktopControllerlocomotion : the controller checks that the head position after a move would be correct, by checking it would not be inside a collider, and checking that a correct walkable navigation mesh point will be under it after movingBot: it ensure that bots will stay on the walkable nav mesh

Touchable

To trigger events on finger touch, the hands contain a Toucher component, while some objects have a Touchable one. The event is totally handled in the hardware rig, and has no automatic link to the network: you have to handle it in the components called by Touchable.OnTouch.

Note that the desktop controls allow you to touch Touchable with the mouse pointer, through the BeamTouchable and BeamToucher classes. These same classes can allow a VR users to point and click on an UI (depending on the capbilities choosed on the BeamToucher class).

UI

The canvas buttons and sliders can be used by mouse.

To be able to touch them with the user's fingers or pointer in VR, the UITouchButton component ensures that when the player touches the 3D button box collider, the OnTouch event is forwarded to the UI button. In the same way, the TouchableSlider component manage the slider value.

To automatically add those components on buttons and sliders placed in a canvas, the TouchableCanvas component manage automatic instanciation of prefab containing them.

Grabbing

Grabbing is only used in this sample for handling the pens and drawings manipulations.

This grabbing system used here is the alternative "local rig grabbing" implementation described in the VR Shared - Local rig grabbing page.

Avatar

The users are represented by a graphical representation, called an avatar. This sample supports two kinds of avatar, those from Ready Player Me and a custom simpler one.

Gazer

To offer a more natural avatar representation, a dedicated system handles automatic eye tracking : when an object of interest is presented in front of an avatar, its eyes will find this target and follow it. If a closer target is presented, the eye focus will change.

If no target is available, the eye will move from time to time, randomly.

Finally, all the avatar systems available in this sample handle eye blinking, also to appear more natural.

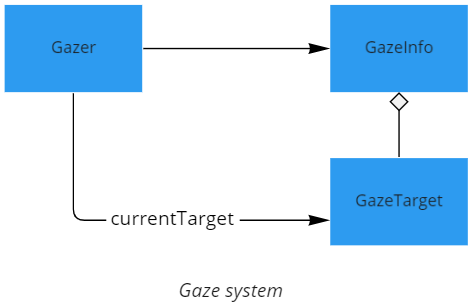

To be a potential eye target, a GameObject must have a GazeTarget component. All avatars' eyes and head have such components by default in the prebuilt prefabs. Those GazeTarget register in the GazeInfo manager,

The Gazer component drives the eyes, by asking the GazeInfo a sorted list (by distance) of potential (with valid resulting eye angle and target distance) GazeTarget for it.

The sorting process is quite heavy when a lot of available targets are close, and is done in background threads.

Hand

The hand models used here come from the Oculus Sample Framework (released under a BSD-3 license by Facebook Technologies, LLC and its affiliates).

The input actions driving the hand movement are collected locally and shared over Fusion to display hand movements on all clients.

The hand movement is a bit discretized remotely to decrease the frequency of change.

The hand color matches the skin color of the avatar representation used.

AvatarRepresentaiton

Each avatar stores an avatarURL, shared through a Fusion [Networked] var in the UserInfo component, placed on the NetworkRig.

Upon change, this URL is parsed by the AvatarRepresentation component, to determine if it represents :

a simplified avatar description URL

(ex: simpleavatar://?hairMesh=2&skinMat=0&clothMat=3&hairMat=0)

a Ready Player Me model URL (ex:https://d1a370nemizbjq.cloudfront.net/0922cf14-763d-4e48-aafc-4344d7b01c2c.glb)

Simple Avatar

The simple avatar system offers a simple and inexpensive avatar model.

Mouth animation is based on volume detection, with no accurate lip synchronization.

Ready Player Me

The Ready Player Me avatar system can display any avatar provided by https://readyplayer.me .

The mouth animation provides lip synchronization, based on the Oculus Lip Sync library, released under the Oculus Audio SDK license (https://developer.oculus.com/licenses/audio-3.3/).

To optimize the avatar downloading and loading, for a given avatar URL, the avatar object can be loaded in several ways:

- If this URL has already been used for an active avatar, the existing avatar is cloned

- If this URL is associated to a prefab, the prefab is spawned, instead of downloading and parsing the glb file

- If those options are not relevant, the glb file is downloaded and parsed. Note that an URL can describe a glb file in the StreamingAssets folder, to skip the download, by using the syntax

%StreamingAssets%/Woman1.glbfor the URL. If you do so, you should place next toWoman1.glbthe associatedWoman1.jsonmetadata file provided by Ready Player Me (to download it, simply replace .glb extension by .json in the original URL provided by Ready Player Me).

LOD

In sessions with a large number of users (or bots), avatars can have a heavy toll on performances.

To avoid this, the avatar representation manages several LOD :

- The regular avatar (simple avatar or Ready Player Me)

- A low poly version of the simple avatar, with hair/skin/cloth color matching the ones of the regular avatar

- A billboard always facing the user camera.

Chat Bubble

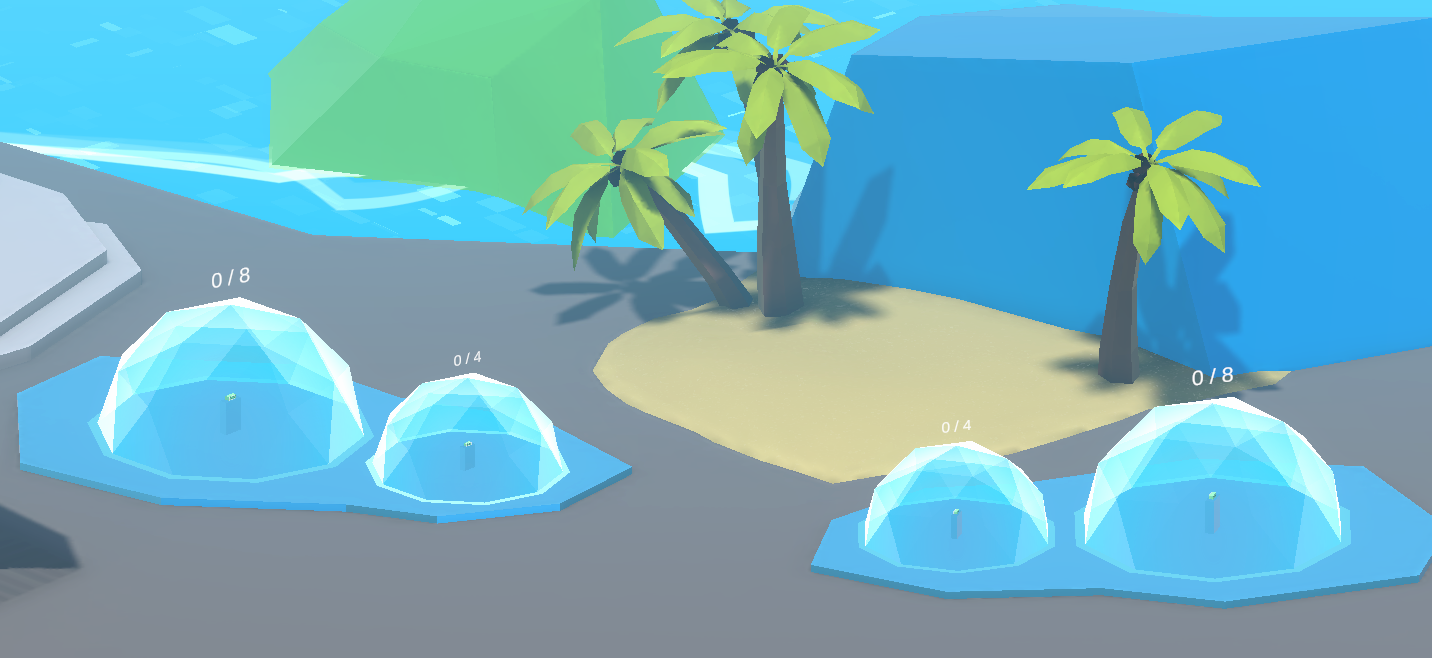

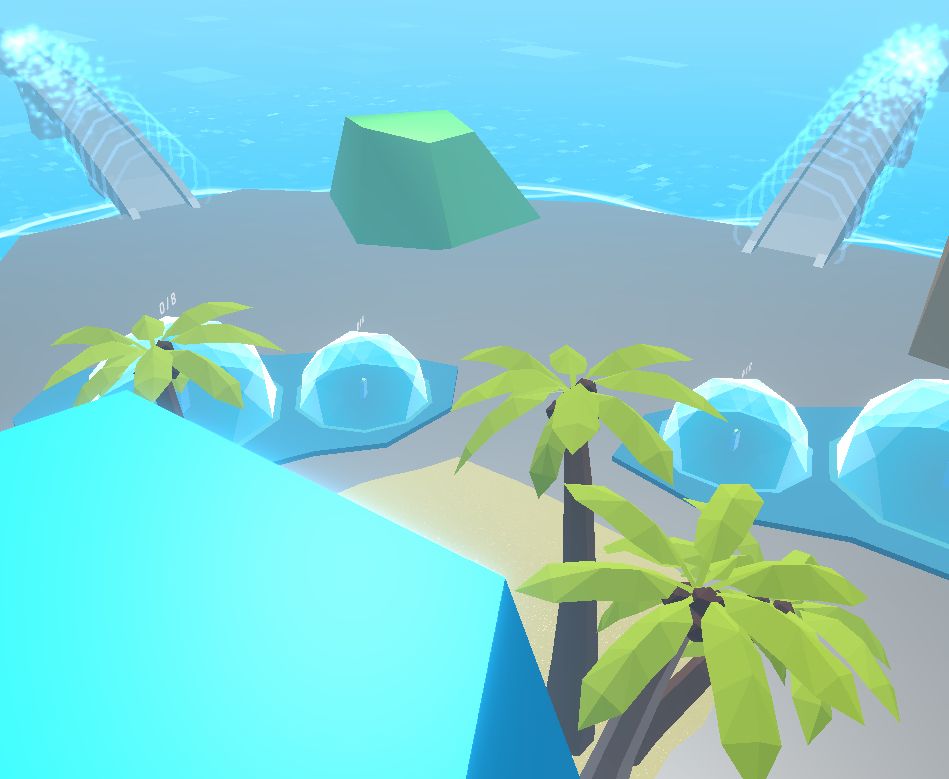

This sample demonstrates chat bubbles. The scene includes 4 static chat bubbles. The application can also create a dynamic chat bubble, when 2 users come close to each other.

Static Chat Bubble

By default, in the expo scene, users don’t hear each other.

But when they enter the same static chat bubble, they will be able to discuss together with spatialized sound over Photon Voice.

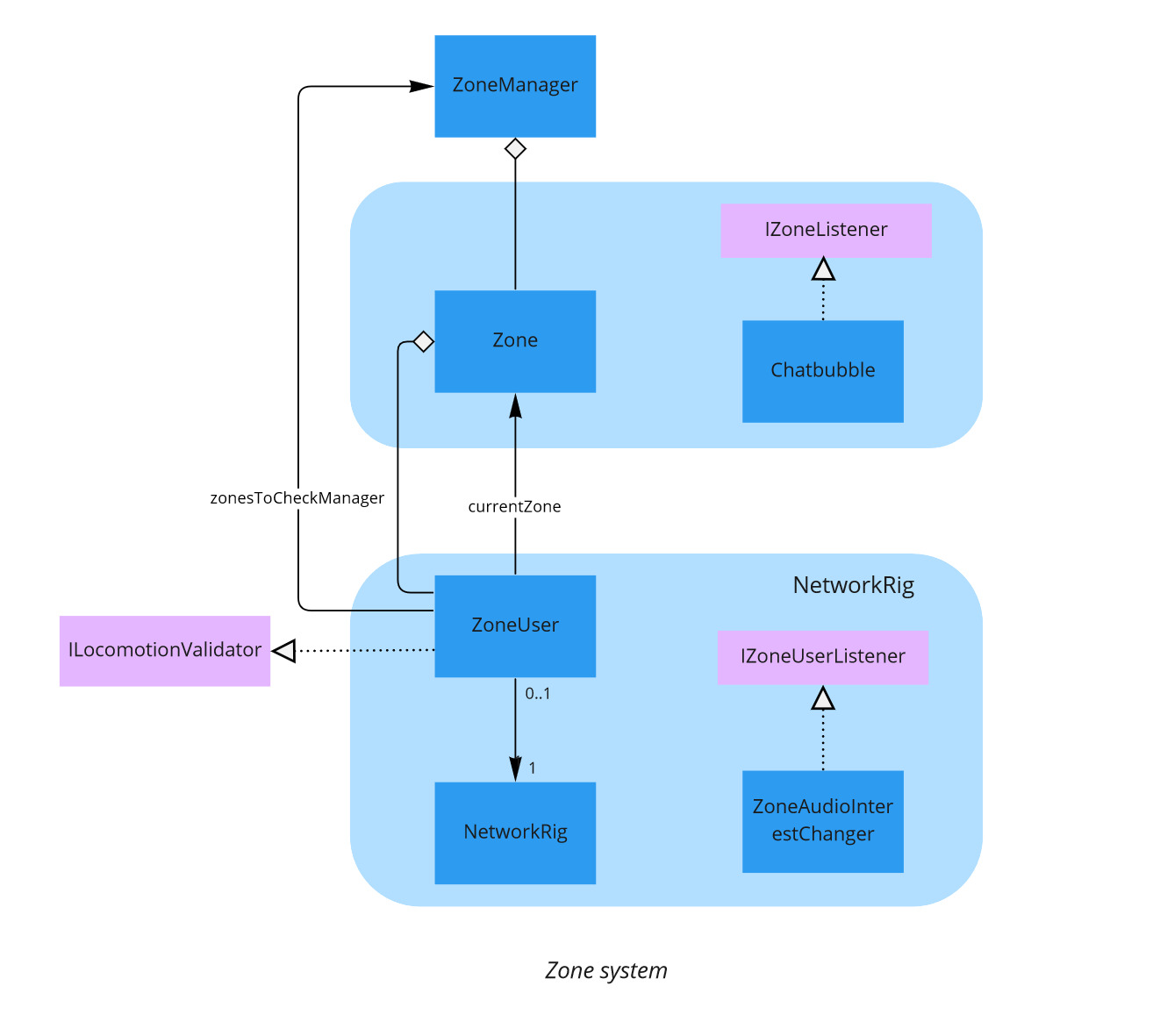

In the sample, a zone system keeps track of ZoneUser components whose NetworkRig is close to a Zone. If it occurs, the ZoneUser will enter the zone, and trigger the various Zone and ZoneUser listeners.

A Zone can have a PhotonVoice interest group associated with them, so the ZoneAudioInterestChanger component can change the audio listening and recording group of the local user if they enter/exit a zone. It also enables and disable the audio source of an user's rig if the local user should hear them.

The static chat bubbles are automatically locked when they have reached the max number of users in it, but it is also possible to lock them before that, by touching the lock button available in every static chat bubble.

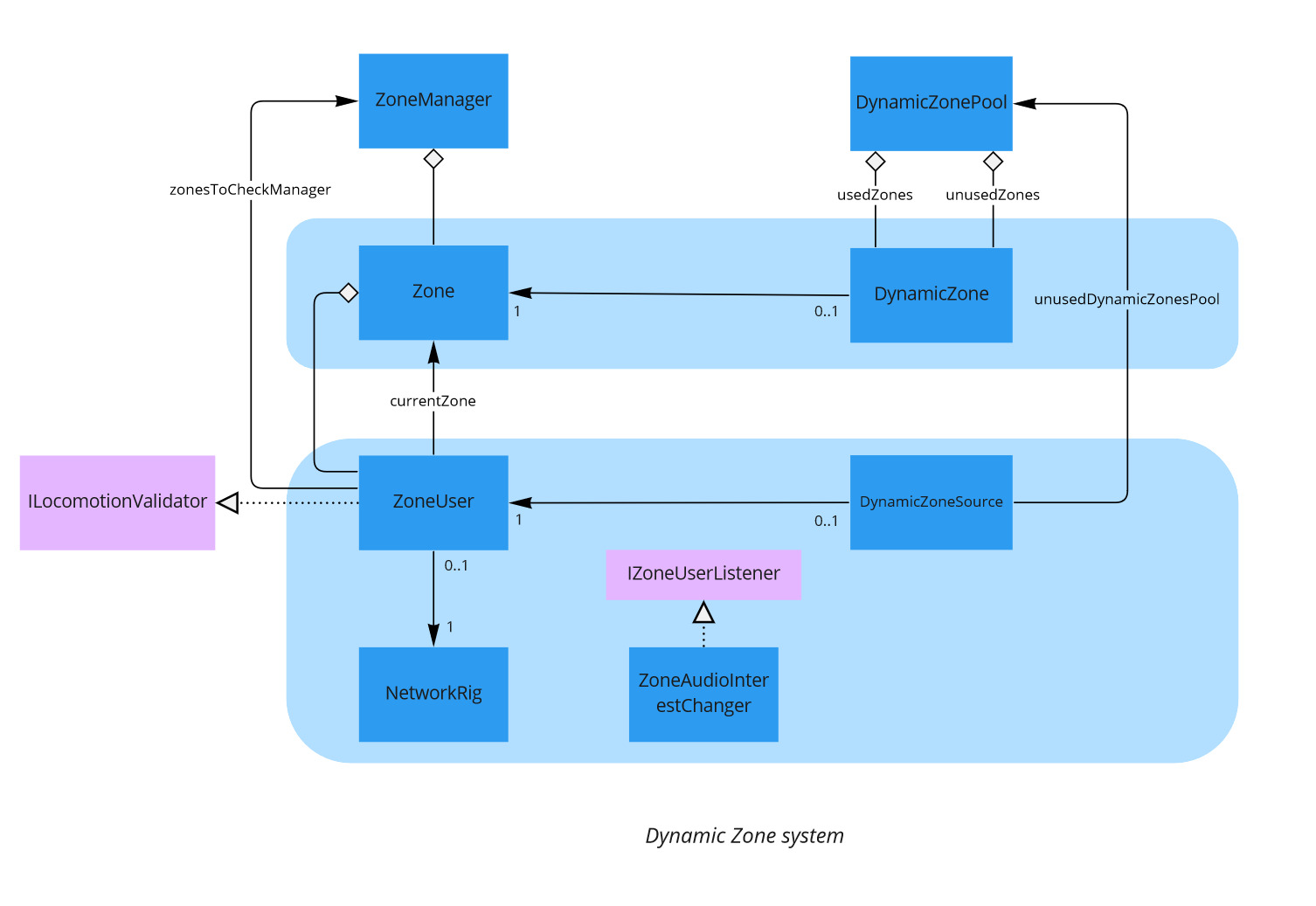

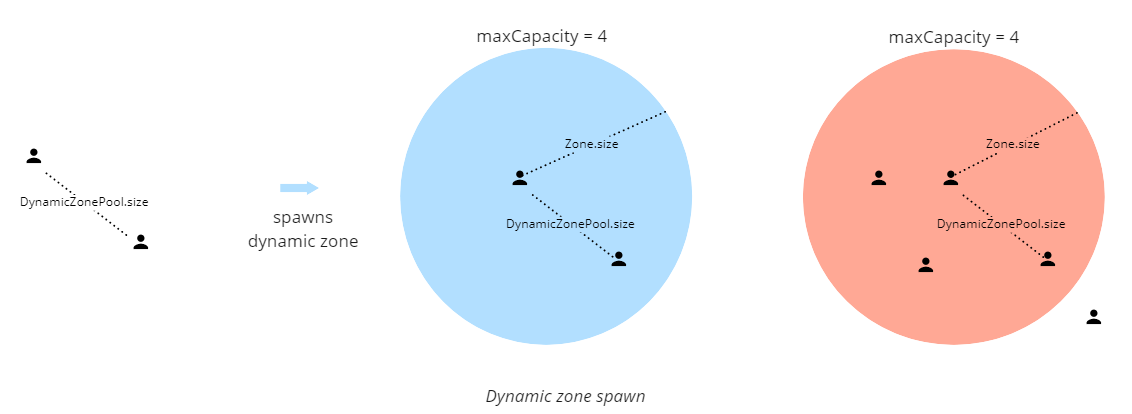

Dynamic Chat Bubble

The prefab representing users contains a DynamicZoneSource component.

This component registers to a DynamicZonePool component, so that it can then check if another existing DynamicZoneSource is in proximity, as defined in the DynamicZonePool.size. In that case, the DynamicZonepool can provide a zone that is spawned (or reused) to be used for both users.

Other user can join them later, up to reaching the max capacity of the zone

Bots

To demonstrate how an expo full of people would be supported by the samples, it is possible to create bots, in addition to regular users.

Bots are regular networked prefabs, whose voice has been disabled, and which are driven by a navigation mesh instead of user inputs.

The Bot class also uses the locomotion validation system, and is thus aware of chat bubbles. Besides, as they can’t speak, they are forbidden to enter any zone, locked or not.

Drawing

3D drawing

The expo scene contains 3D pens that can create 3d drawings : a group of line renderer, with a common handle that can be grabbed and moved.

The 3D pen holds a Drawer component, which spawns a drawing prefab holding a Draw component. The Draw component ensures that all the drawn points are synchronized over Fusion. It is done through a [Networked] variable. As it cannot hold an infinite number of points, the drawing if splitted in several parts when needed, the secondary Draw following the first Draw when any user moves it, making it appear as a single drawing.

2D drawing

The board in the expo scene can be written by the pen around it, and the resulting drawing can then be moved on the boards with a handle similar to the 3D pen drawings’ handles.

It is in fact special 3D drawings, using a layer invisible to the layer camera but visible to a camera associated with the board. The camera then renders the drawing image on a render texture that is displayed on the board.

For performance purposes, this camera is only enabled when the pens near the board are used, or when the drawings created with them are moved.

Scene switching

The player can move to a new world by using bridges located in the scene. Each bridge triggers a specific scene loading.

To do so, each bridge has a NewSceneLoader class in charge to load a new scene when the player collides with the box collider.

Please note that, before loading the new scene, the NewSceneLoader shutdown the Runner as it can not be reused in the new scene : each scene has its own Runner.

For example, the main scene called ExpoRoomMainWithBridge has two bridges. A first bridge will load the ExpoRoomNorth scene while the other bridge will trigger the ExpoRoomSecondScene loading.

So, now, there are three posibilities regarding the player spawn position :

- a random position close to the palm trees when the player join the game at first,

- a position near the first bridge when the player come back from the

ExpoRoomNorthscene, - a position near the second bridge when the player come back from the

ExpoRoomSecondScenescene.

To determine the correct spawn position, a SpawnManager is required in each scene.

It changes the default behavior of the RandomizeStartPosition component, to define where the player should appear when he comes from another scene (or in case of reconnection).

Another point to keep in mind when dealing with multiple scenes, is that it is not possible to use the same room name if the scene is not identical.

In other words, the room name must be different for each scene. Instead of setting this name statically, we use the Space component (located on the Runner) to automatically set a room name based on several parameters including the scene name.

Third party components

- Oculus Integration

- Oculus Lipsync

- Oculus Sample Framework hands

- Ready player me

- Sounds

Known Issues

- If a user connects while the authority of a drawing is disconnecting, the new user won’t receive the drawing data.

Changelog

Fusion Expo 0.0.19

- Add new scenes & the capability to switch from one scene to another

- Avatar selection UI follows the player's head position

- The player can specifiy his nickname

- Various improvements and bug fixes

Fusion Expo 0.0.18

- Fix error message display when a network issue occours

Fusion Expo 0.0.17

- Update to Fusion SDK 1.1.3 F Build 599

Fusion Expo 0.0.16

- Use the core VR implementation described in the VR Shared technical sample

- Use of the local grabbing implementation described in the VR shared Local rig grabbing samble

- Use of the industries components, shared with the Stage sample

- Remove XR Interaction toolkit dependancy