Stage

Overview

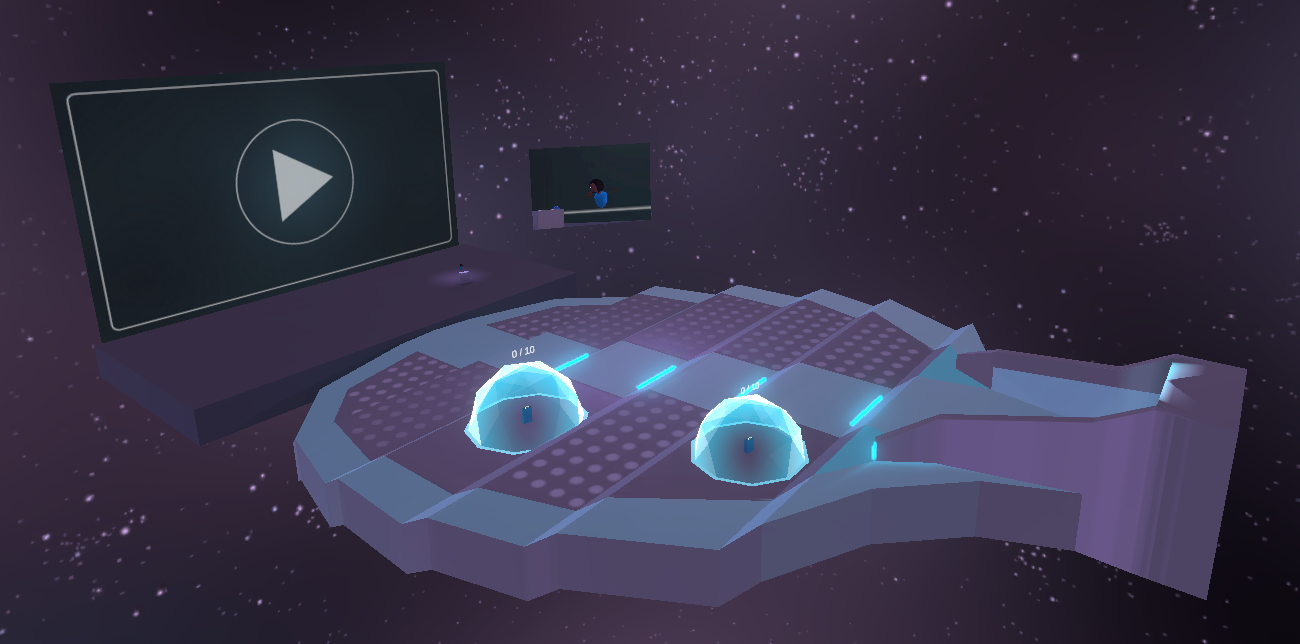

The FusionStage sample demonstrates an approach on how to develop a social application for up to 200 players with Fusion.

Each player is represented by an avatar and can talk to other players if they are located in the same chat bubble thanks to Photon Voice SDK.

And one user can take the stage and start presenting content.

Some of the highlights of this sample are :

- First, the player customizes its avatar on the selection avatar screen,

- Then, they can join the Stage scene. If the player launches the sample on a PC or MAC, it can choose between the Desktop mode (using keyboard & mouse) or VR mode (Meta Quest headset).

- Players can talk to each other if they are located in the same static chat bubble. In each static chat bubble, a lock button is available to prevent new players from entering.

- One user can take the stage, and this way speak to every users in the room. Then this user can increase the number of users allowed to join him on the stage.

- The presenter voice is not spatialized anymore, so everyone can hear them as through speakers.

- There, it is possible to share content, like a video whose playback is synchronized among all users.

- A stage camera system follows the presenter, to display him on a dedicated screen

- Once seated, a user can ask to speak with the presenter on stage. If the presenter accepts, then everyone will hear the conversation between the presenter and the user until the presenter or the user decides to stop the conversation.

- Also, people in the audience can express their opinion on the presentation by sendind emoticons.

More technical details are provided directly in the code comments.

Technical Info

- This sample uses the Shared Mode topology,

- Builds are available for PC, Mac & Meta Quest,

- The project has been developed with Unity 2021.3.10f1, Fusion 1.1.3f 599 and Photon voice 2.31,

- 2 avatars solutions are supported (home made simple avatars & Ready Player Me 1.9.0 avatars),

Before you start

To run the sample :

Create a Fusion AppId in the PhotonEngine Dashboard and paste it into the

App Id Fusionfield in Real Time Settings (reachable from the Fusion menu).Create a Voice AppId in the PhotonEngine Dashboard and paste it into the

App Id Voicefield in Real Time SettingsThen load the

AvatarSelectionscene and pressPlay

Download

| Version | Release Date | Download | ||

|---|---|---|---|---|

| 0.0.19 | Feb 02, 2023 | Fusion Stage 0.0.19 Build 92 | ||

Handling Input

Desktop

Keyboard

- Move : WASD or ZQSD to walk

- Rotate : QE or AE to rotate

Mouse

- Move : left click with your mouse to display a pointer. You will teleport on any accepted target on release

- Rotate : keep the right mouse button pressed and move the mouse to rotate the point of view

- Move & rotate : keep both the left and right button pressed to move forward. You can still move the mouse to rotate

- Grab : put the mouse over the object and grab it using the left mouse button.

Meta Quest

- Teleport : press A, B, X, Y, or any stick to display a pointer. You will teleport on any accepted target on release

- Touch (ie for chat bubbles lock buttons) : simply put your hand over a button to toggle it

- Grab : first put your hand over the object and grab it using controller grab button

Folder Structure

The main folder /Stage contains all elements specific to this sample.

The folder /IndustriesComponents contains component shared with the Fusion Expo sample/

The /Photon folder contains the Fusion and Photon Voice SDK.

The /Photon/FusionXRShared folder contains the rig and grabbing logic coming from the VR shared sample, creating a FusionXRShared light SDK that can be shared with other projects.

The /Photon/FusionXRShared/Extensions folder contains extensions for FusionXRShared, for reusable features like synchronized rays, locomotion validation, ...

The /Plugins folder contains the Ready Player Me SDK

The /StreamingAssets folder contains pre-built ReadyPlayerMe avatars. It can be removed freely if you don’t want to use those prebuilt avatars.

The /XR folder contain configuration files for virtual reality.

Architecture overview

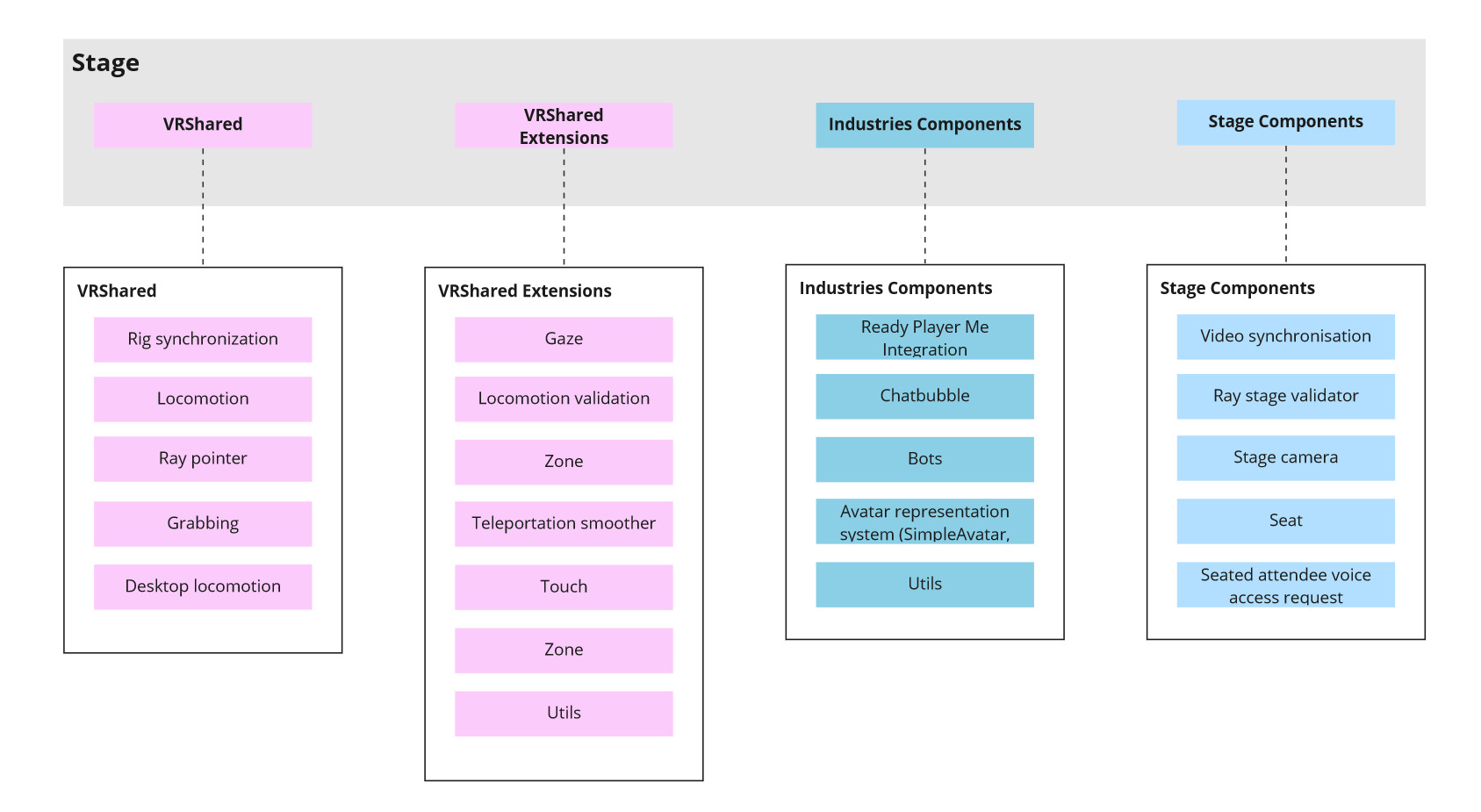

The Fusion Stage rely on the same code base than the one described in the VR Shared page, notably for the rig synchronization.

The grabbing system used here is the alternative "local rig grabbing" implementation described in the VR Shared - Local rig grabbing page.

Aside from this base, the sample, like the expo sample, contains some extensions to the FusionXRShared, to handle some reusable features like synchronized rays, locomotion validation, touching, teleportation smoothing or a gazing system.

Besides, the Fusion Stage sample shares a large part of the Fusion Expo sample code, including the chat bubbles.

Stage

The stage use a Zone, like the one used in the chat bubbles (see Fusion Expo sample ).

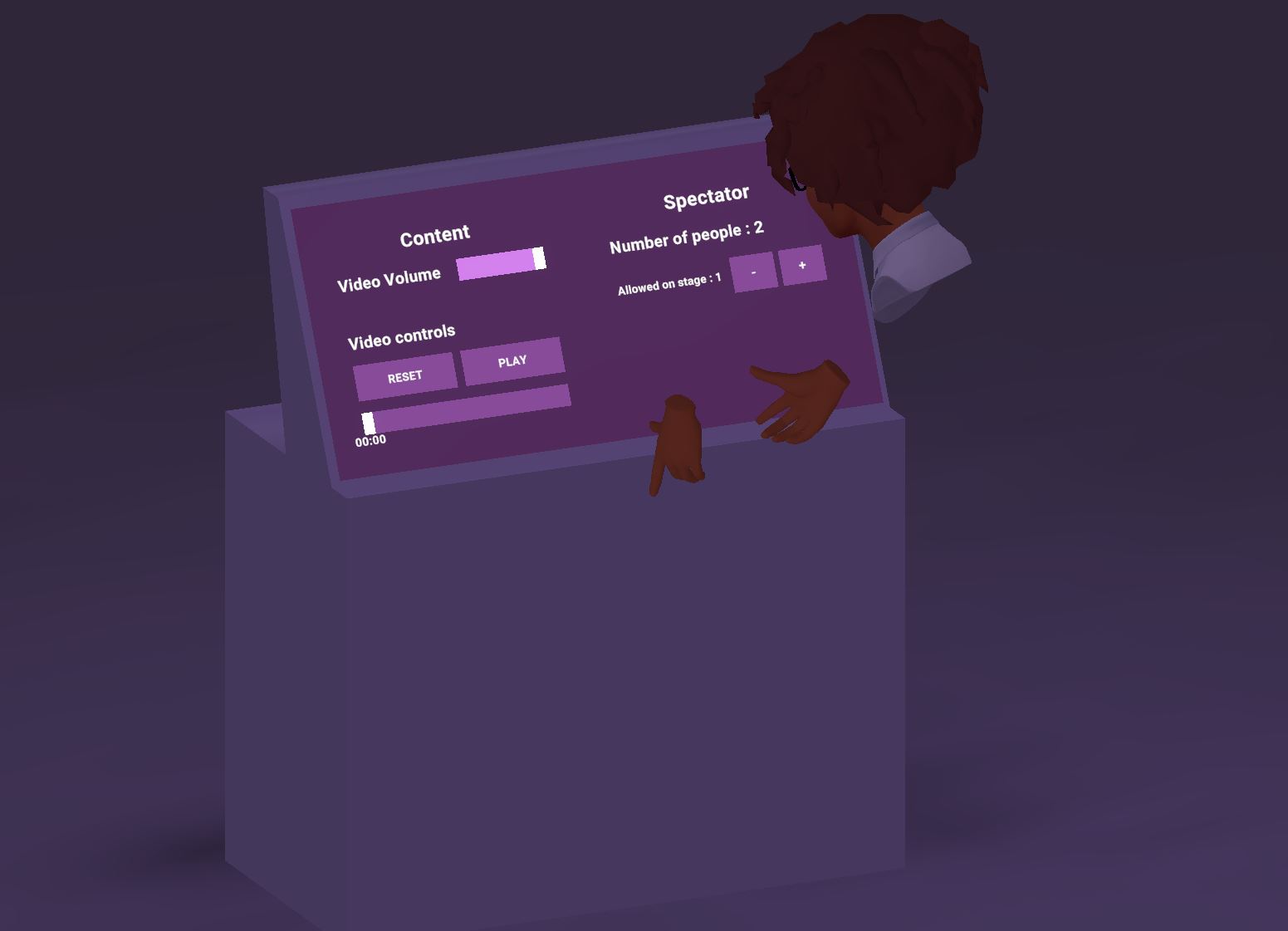

By default, this zone has a capacity limited to 1 user, thus preventing someone else to come to the stage when a presenter is already on stage. But the presenter can change this value with the stage console.

To be sure that the user is displayed properly for all user, the LODGroup is disabled too for any user entering the stage, in StageZone.ForceUserLOD() to guarantee that no LOD will trigger for them.

Voice

The audio group associated with the stage zone, is the special audio group "0", which by default can be heard by any player.

The ZoneAudioInterestChanger, which handles audio group switching when entering a zone, also determine if this special global group is used. In this case, if Zone.IsGlobalAudioGroup() return true, in ZoneAudioInterestChanger.ManageAudioSources():

- it force the audio source of any user entering this zone to be enabled

- it removes the audio source spatialization, so that the voice of this user is audible from every corner of the room.

Presenter camera

A presenter camera follows the user on the stage. To do so, the StageZone class registers as a IZoneListener listener for the stage zone, and triggers the camera tracking and recording when an user is on the stage.

The StageZone can handle several camera, and will trigger the traveling following the presenter for each one, while enabling the actual rendering only for the most relevant one. Those camera have a lower count of frame rendered by seconds, this being done in the StageCamera class, along with the camera movements. The camera are placed relatively to a camera rig Transform, and move along the x axis of this transform.

Seat

To decrease the amount of data shared by an user, it is possible to refresh their avatar synchronization less often when they are seated.

To do so, the HardwareRig subclass StageHardwareRig can receive seating requests (triggered by the SeatDetector class, which observe when the user teleport on a seat) : when an user is seated, the input will be less often updated. By doing so, the StageNetworkRig NetworkRig subclass will update the NetworkTransform position less often, leading to less data sent.

To disable this optional optimization, it is possible to set freezeForRemoteClientsWhenSeated to false on the StageHardwareRig.

Attendee stage voice access

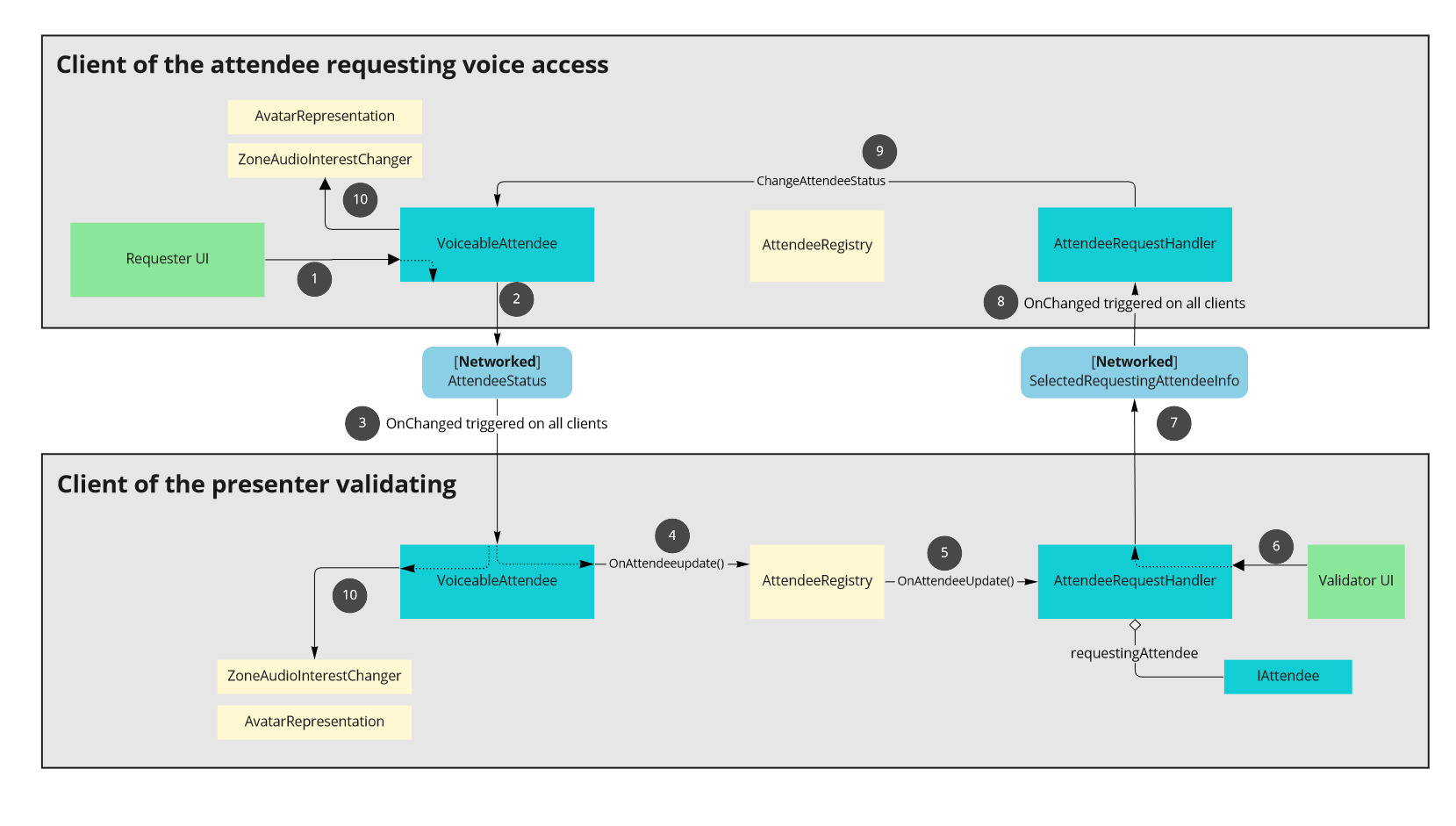

The conference attendees can request to have access to the stage microphone. The presenter on the stage can allow them, or reject their request.

The networked rig of an user contains a VoiceableAttendee component, that host a [Networked] variable AttendeeStatus. This status describes :

- if no request has been made yet,

- if the spectator is requesting voice access to the stage,

- if it has been accepted,

- if it has been accepted but they are momentarily muted,

- or if the request has been rejected,

When the user request voice access with their UI, (1) in the above diagram, their status is updated (2), and the network var sync it to all user (3). It triggers on all clients a callback (4) that warns an AttendeeRegistry components storing all the attendees, that broadcast this change info to all its listeners (5).

This way, the AttendeeRequestHandler, associated to the desk on the stage, can store a list of requesting attendees, and update its UI accordingly. A [Networked] var, SelectedRequestingAttendeeInfo holds the info about the selected attendee in the UI, so that every UI on each clients is synchronized.

When the presenter validate a voice request (6), SelectedRequestingAttendeeInfo attendeeStatus info is changed. It is not possible to directly change the VoiceableAttendee AttendeeStatus, as the presenter is probably not the requester, and so has no state authority the rig's networked object. But since SelectedRequestingAttendeeInfo is a [Networked] var, changing it locally for the presenter (7) triggers an update on all clients (8). During this update, each one checks if it is the state authority of the VoiceableAttendee networked object, and if it is, change the attendee status (9).

Finally, changing this status triggers an update of the VoiceableAttendee AttendeeStatus on all clients, and seeing that the spectator is now a voiced spectators, it can (10):

- enable their voice for all user through the

ZoneAudioInterestChanger(already used in chat bubbles of the the presenter stage: the stage is just a specialZone). ItsOnZoneChangedis triggered for the voiced attended as if they had entered the stageZone, hence enabling their voice. - disable the LODGroup for this user in the

AvatarRepresentation, so that they appears in max details for everybody, like the presenter on stage.

Video synchronization

The video player is currently a simple Unity VideoPlayer, but any video library could be used.

The presenter on stage can start, go to a specific time, or pause the video on the stage's console. To synchronize this for all user, the VideoControl NetworkBehaviour contains a PlayState [Networked] struct, holding the play state and position. Being a networked var, when it is changed by the presenter when they use the UI, the change callback is also triggered on all clients, allowing them to apply the change on their own player.

Emoticons

In addition to the voice request, at any time, spectators can express themself by sending emoticons.

Since the feature is not critical for this use case, when a user clicks on an emoticon UI button, we use RPC to spawn the associated prefab. This means that a new user entering the room will not see the previously generated emoticon (emoticons vanish after a few seconds anyway).

Also, we consider that there is no need to synchronize the positions of the emoticons in space, so the emoticon prefabs are not networked objects.

The EmotesRequest class, located on speactor UI is responsible for requesting the EmotesSpawner class to spawn an emoticon at the user's position.

Because a networked object is required, EmotesSpawner is located on the user's network rig.

Third party components

- Oculus Integration

- Oculus Lipsync

- Oculus Sample Framework hands

- Ready player me

- Sounds

Changelog

Fusion Stage 0.0.18

- Fix error message display when a network issue occours

Fusion Stage 0.0.17

- Update to Fusion SDK 1.1.3 F Build 599