Meta OVR hands synchronization

This addon shows how to synchronize the hand state of Meta's OVR hands (including finger tracking).

It can display hands either:

- from the data received from grabbed controllers

- from finger tracking data.

Regarding finger tracking data, the add-on demonstrates how to highly compress the hand bones data to reduce bandwidth consumption.

Meta XR SDK

Instead of the OpenXR plug-in used in other samples, the Oculus XR plug-in is used here.

It is recommended when using the Meta XR Core SDK, that is required here for enabling mixed reality passthrough, and shared spatial anchors.

The Meta XR SDK has been added through their scoped registry https://npm.developer.oculus.com/ (see the Meta documentation for more details).

The main installed packages from Meta registries are:

- Meta XR Core SDK: required for mixed reality passthrough, and shared spatial anchors. Also provides finger tracking API and classes.

- Meta XR Platform SDK: to have access to the Oculus user id, used in the shared spatial anchor registration process (not required for this add-on, but necessary if the project uses shared spatial anchors, or Meta avatars, for instance).

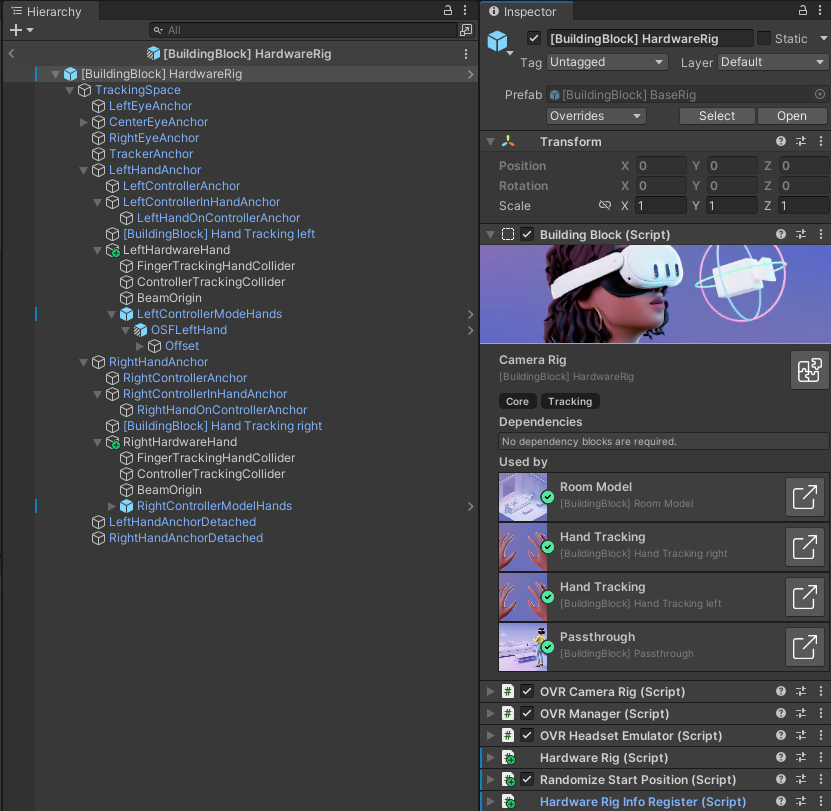

Oculus rig and building blocks

Being based on the Oculus XR plug-in instead of the OpenXR plug-in, a specific rig has been created to capture the headset and hand positions, as well as details on the inputs or finger tracking.

This hardware collecting rig has been created through the Meta building blocks:

- the prefab resulting from this step is available in the

/Prefabs/Rig/BaseBuildingBlocks/[BuildingBlock] BaseRigprefab. - the prefab actualy used in the add-on, with the synchronization components added to the previous one, is available in the

/Prefabs/Rig/[BuildingBlock] HardwareRigprefab.

Then, the same components used in the VRShared sample to collect and synchronize headset and hands position are used (see HardwareRig and HardwareHand in the VR Shared page ).

Finally, some specific components were added to handle the finger tracking and toggling between finger-tracking and controller-tracking hand representations, as described below.

Hand logic overview

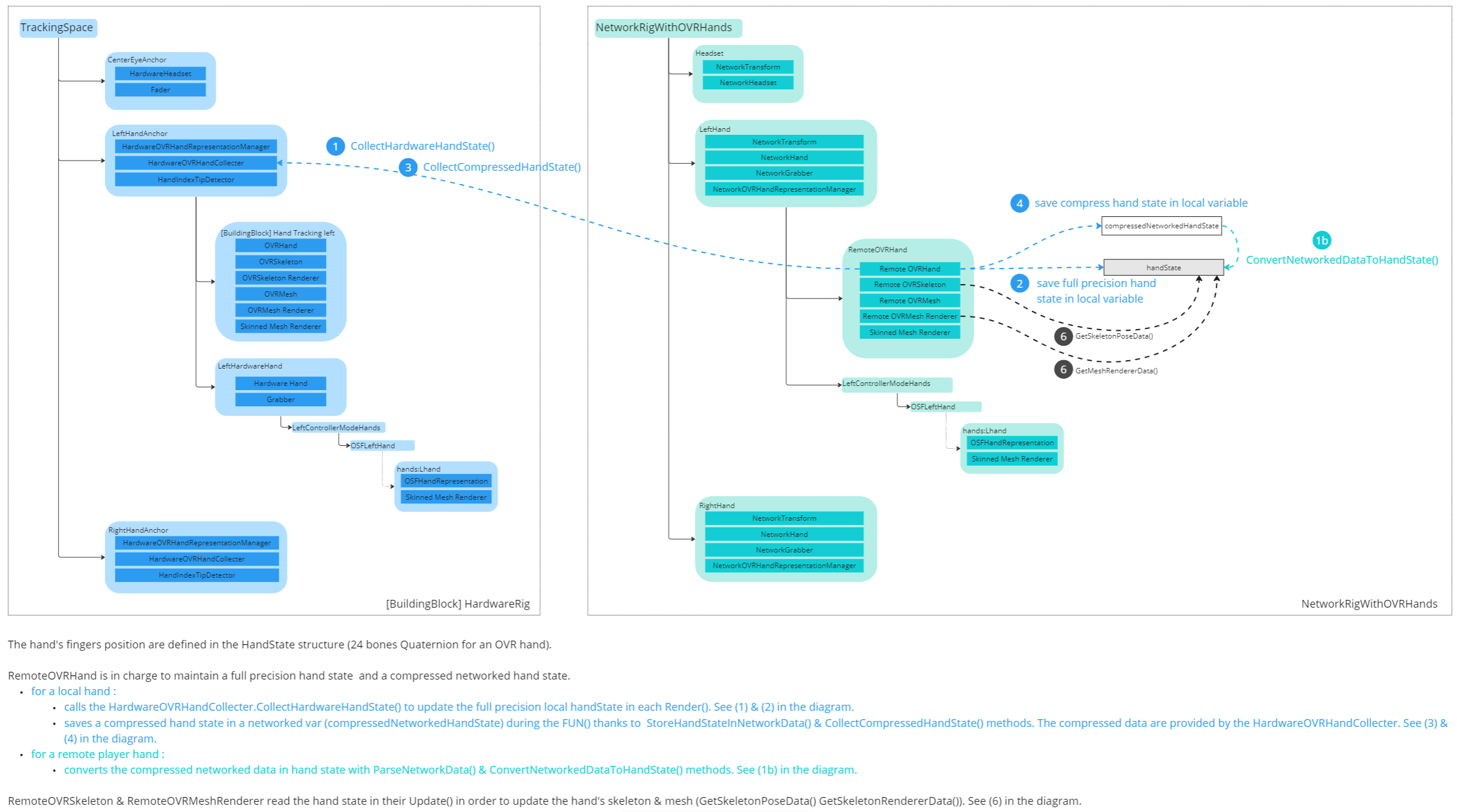

This add-on makes controller-based hand tracking and finger-based hand tracking coexist. The goal is to have a seamless transition from one to the other.

To do so:

- as in other VR samples, when the controller is used, the hand model displayed is the one from the Oculus Sample Framework.

- when finger tracking is used, for the local user, the

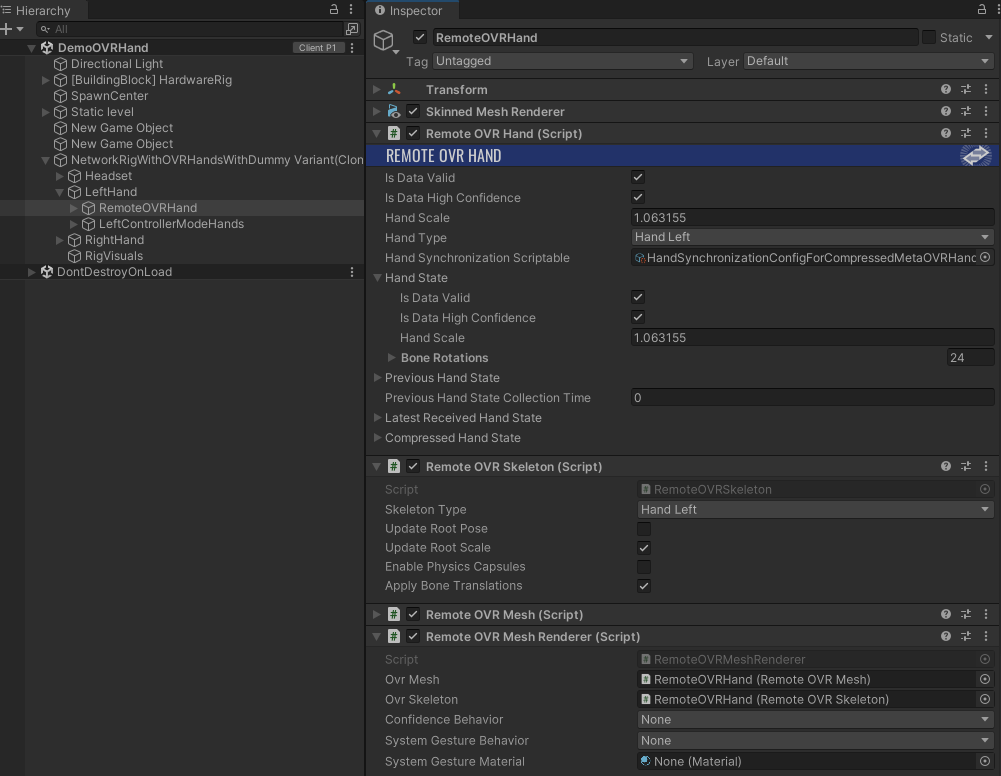

OVRHandcomponent of the Meta XR Core SDK collect the hand and finger bones data, theOVRSkeletonprepare the finger bones game object, and theOVRMeshandOVRMeshRendererprepare the skinned mesh renderer with those bones. All of this is available from the suggested building blocks setup. Alternatively, you can see it explained in details on Meta's Set Up Hand Tracking documentation page - for remote user, a

RemoteOVRHand(described below) recovers from the network the same hand and finger bones position data that theOVRSkeleton,OVRMeshandOVRMeshRendererexpect, to rebuild the hand in the same way - several of these hand states are stored upon reception in a ring buffer. During every

Render, we interpolate at a constant delay in the past between 2 of those states, to display smooth bones rotations, even between 2 ticks.

Note:

The OVRSkeleton, OVRMesh and OVRMeshRenderer classes currently can't be used in the editor for the remote user perfectly, due a specific debug issue, described here.

To bypass it, the project use copy of them RemoteOVRSkeleton, RemoteOVRMesh and RemoteOVRMeshRenderer, which simply remove the problematic editor-only line.

If a Meta package update makes them incompatible with the new code base, you can safely replace them by their original counterparts.

Local hardware rig hand components

The HardwareOVRHandCollecter component, placed on the local user hardware rig's hands, collects the hand state to provide it to synchronization compoments.

It relies on Meta's OVRHand component to have access to the hand state (including notably the finger bones rotations).

It also includes helpers properties to have access to the pinching state, or to know if the finger tracking is currently used.

Network rig hand components

The RemoteOVRHand component on the local user network rig relies on the HardwareOVRHandCollecter to find the local hand state, and then store it in network variables during the FixedUpdateNetwork.

For remote users, those data are parsed to recreate a local hand state, that is then used to answer to the RemoteOVRSkeleton, RemoteOVRMesh and RemoteOVRMeshRenderer components which handle the actual bones transform and the hand mesh state.

The synchronized hand state notably includes IsDataValid, which is true if the user was using finger tracking, false otherwise, and the bones info.

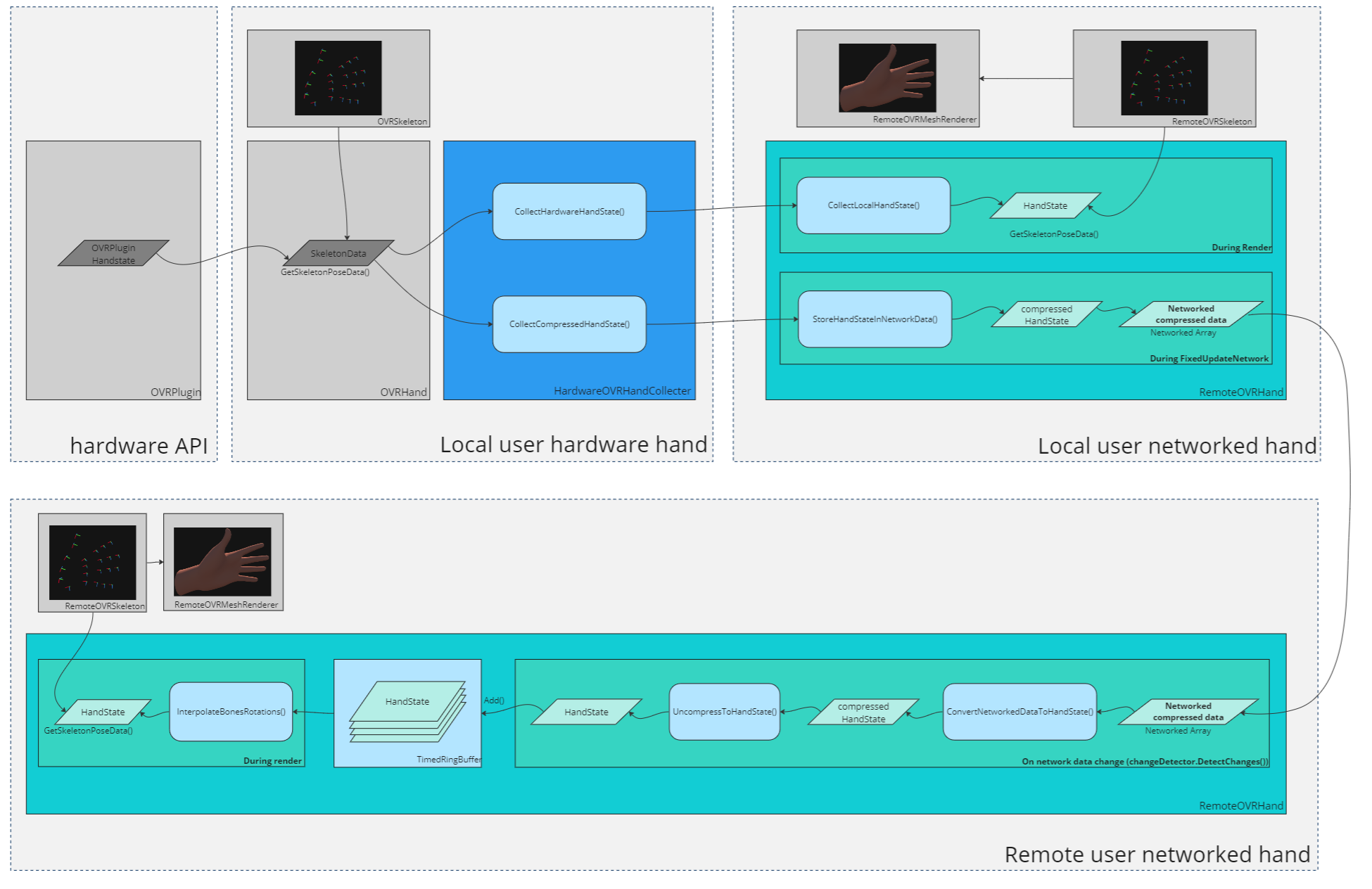

Bandwidth optimization

The bones information are quite expensive to transfer: the hand state includes 24 quaternions to synchronize. A simple approach to this synchronization, transfering all the quaternions, does not try to reduce bandwidth usage but works, and it can be tested with the UncrompressedRemoteOVRHand alternative version of RemoteOVRHand.

The actual RemoteOVRHand implementation uses some properties of the hand bones (specific rotation axis, limited range of moves, ...) to reduce the required bandwith. The level of precision desired for each bone can be specified in a dedicated HandSynchronizationScriptable scriptable (which has to be provided to both HardwareOVRHandCollecter and RemoteOVRHand).

The add-on includes a default one, HandSynchronizationConfigForCompressedMetaOVRHands, which has been built to match most needs:

- it offers a very high compression, using 20 times less bytes (an hand full bone rotations set is stored in 18 bytes instead of 386 bytes for a full quaternions transfer). A full quaternions transfer can be tested with the

HandSynchronizationConfigForMetaOVRHandsscriptable. - without the compression having any visible impact on the remote user's finger representation.

Interpolation

On the RemoteOVRHands, during each Render where a change of the network data is detected in ParseNetworkData(), the network data value is converted to a compressed hand state, and then to an actual hand state that can be used to animate the hand skeleton.

This hand state is then stored in a timed ring buffer (a ring buffer of hand states, where we also store the insertion times).

Then, during each Render, the hand state that is actually used to animate the bones is computed in InterpolateBonesRotations. It is interpolated between the 2 hand states stored in the ring buffer at a time around a specified delay in the past.

This way, even between tick receptions, the hand bones can rotate seamlessly between received rotation values.

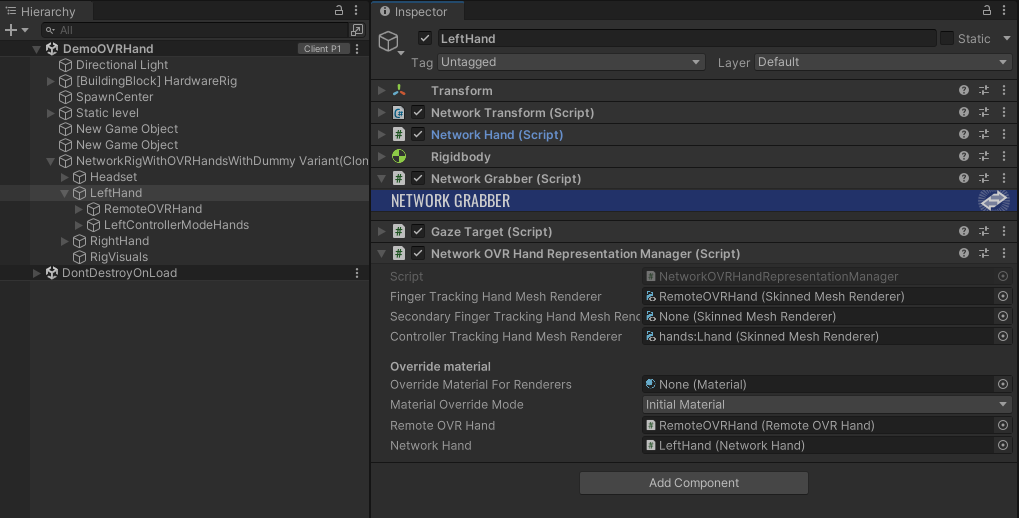

Toggling hand models

The OVRHandRepresentationManager component handles which mesh is displayed for an hand, based on which hand tracking mode is currently used:

- either the Oculus Sample Framework hand mesh when controller-tracking is used,

- or the mesh relying on the bones skeleton animated by a

OVRSkeletoncomponent logic when finger-tracking is used.

It also make sure to apply hand colors based on the avatar skin color (by implementing the IAvatarRepresentationListener, provided by the Avatar add-on).

Two versions of this script exist, one for the local hardware hands (used to animate the hand skeleton, for collider localisation purposes, or if we need offline hands), one for the network hands.

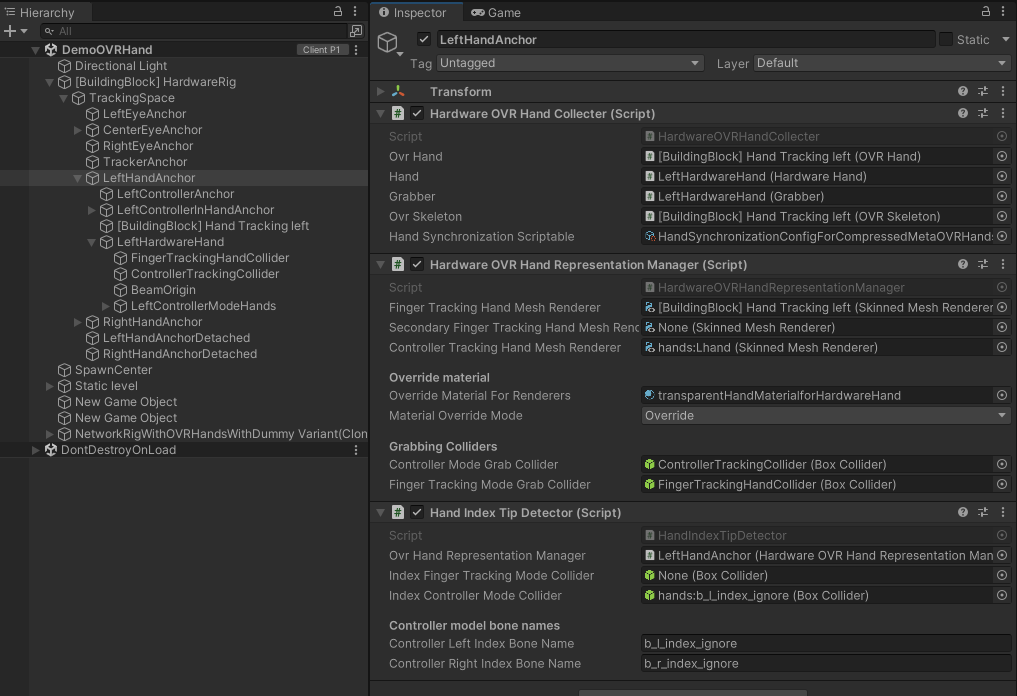

Local hardware rig hands

This version relies on the OVRHands placed on the hardware hands.

In addition to the other features, it also make sure to switch between the 2 grabbing colliders, one localized at the position of the palm when finger-tracking is used, one localized at the position of the palm when controller-based tracking is used (the "center" of the hand is not exactly at the same position in those 2 modes).

Note:

In the current setup, it has been choosed to display the networked hands only: the hands in the hardware rig are only here to collect the bones position, and animate properly the index collider position.

Hence, the material used for the hardware hand is transparent (it is required on Android for the controller hand, otherwise the animation won't move the bones if the renderer is disabled).

The hardware hand mesh is automatically set to transparent thanks to the MaterialOverrideMode of HardwareOVRHandRepresentationManager being set to Override, with a transparent material provided in the overrideMaterialForRenderers field.

Network rig hands

The NetworkOVRHandRepresentationManager component handles which mesh is displayed based on hand mode: either the Oculus Sample Framework hand mesh when the controller tracking is used (it checks it in the RemoteOVRHand data), of the mesh relying on the OVRHand logic.

Dependencies

- Avatar addon 2.0.2

- Meta XR Core SDK

Download

This addon latest version is included into the Industries addon project

Supported topologies

- shared mode

Changelog

- Version 2.0.0: First release