Stage Screen Sharing

Overview

The Fusion Stage Screen Sharing sample demonstrates an approach on how to develop a social application for up to 200 players with Fusion.

Each player is represented by an avatar and can talk to other players if they are located in the same chat bubble thanks to Photon Voice SDK.

And one user can take the stage and start presenting content.

Users can also share their PC screen on the room's large virtual screen, if authorized by the presenter.

Some of the highlights of this sample are :

- First, the player customizes its avatar on the selection avatar screen,

- Then, they can join the Stage scene. If the player launches the sample on a PC or MAC, it can choose between the Desktop mode (using keyboard & mouse) or VR mode (Meta Quest headset).

- Players can talk to each other if they are located in the same static chat bubble. In each static chat bubble, a lock button is available to prevent new players from entering.

- One user can take the stage, and this way speak to every users in the room. Then this user can increase the number of users allowed to join him on the stage.

- The presenter voice is not spatialized anymore, so everyone can hear them as through speakers.

- There, it is possible to share content, like a video whose playback is synchronized among all users.

- A stage camera system follows the presenter, to display him on a dedicated screen

- Once seated, a user can ask to speak with the presenter on stage. If the presenter accepts, then everyone will hear the conversation between the presenter and the user until the presenter or the user decides to stop the conversation.

- Also, people in the audience can express their opinion on the presentation by sendind emoticons.

- The tick rate is reduced when a player is seated in order to reduce bandwidth consumption and increase the number of players.

Technical Info

- This sample uses the Shared Mode topology,

- Builds are available for PC, Mac & Meta Quest,

- The project has been developed with Unity 2021.3, Fusion 2, Photon Voice 2.53,

- 2 avatars solutions are supported (home made simple avatars & Ready Player Me avatars),

Before you start

To run the sample :

Create a Fusion AppId in the PhotonEngine Dashboard and paste it into the

App Id Fusionfield in Real Time Settings (reachable from the Fusion menu).Create a Voice AppId in the PhotonEngine Dashboard and paste it into the

App Id Voicefield in Real Time SettingsThen load the

AvatarSelectionscene and pressPlay

Download

| Version | Release Date | Download |

|---|---|---|

| 2.0.5 | Oct 08, 2025 | Fusion Stage Screen Sharing 2.0.5 |

WebGL

The Stage Screen Sharing sample can be build for a WebGL target.

You can test stage WebGL build here.

Due to some Unity limitations regarding WebGL builds though, a few point need specific care to work properly and are detailed here.

Note that this WebGL build does not support WebXR (virtual reality in the browser): while it is achievable with some open source libraries, like unity-webxr-export, it is not yet supported by default in Unity, and thus not demonstrated here.

Please, test with Google Chrome (you may encounter VP8 codec issue with Firefox).

Handling Input

Desktop

Keyboard

- Move : WASD or ZQSD to walk

- Rotate : QE or AE to rotate

- Menu : Esc to open or close the application menu

Mouse

- Move : left click with your mouse to display a pointer. You will teleport on any accepted target on release

- Rotate : keep the right mouse button pressed and move the mouse to rotate the point of view

- Move & rotate : keep both the left and right button pressed to move forward. You can still move the mouse to rotate

- Grab : put the mouse over the object and grab it using the left mouse button.

Meta Quest

- Teleport : press A, B, X, Y, or any stick to display a pointer. You will teleport on any accepted target on release

- Touch (ie for chat bubbles lock buttons) : simply put your hand over a button to toggle it

Folder Structure

The main folder /Stage contains all elements specific to this sample.

The folder /IndustriesComponents contains components shared with others industries samples like Fusion Expo sample.

The /Photon folder contains the Fusion and Photon Voice SDK.

The /Photon/FusionXRShared folder contains the rig and grabbing logic coming from the VR shared sample, creating a FusionXRShared light SDK that can be shared with other projects.

The /Photon/FusionAddons folder contains the Industries Addons used in this sample.

The /XR folder contains configuration files for virtual reality.

Architecture overview

This sample rely on the same code base than the one described in the VR Shared page, notably for the rig synchronization.

Aside from this base, the sample, like the other Industries samples, contains some extensions to the FusionXRShared or Industries Addons, to handle some reusable features like synchronized rays, locomotion validation, touching, teleportation smoothing or a gazing system.

Also, this sample rely on the Photon Video SDK, which an addition of the Photon Voice SDK.

In addition to the normal client launched by user who want to join the room, user who want to share their screen have to launch a dedicated Windows application "Recorder client", which controls which screen they want to share, and request the authorization to display it.

The screen capture is based on uWindowCapture, which handles the captation of the desktop image and applies it on a texture.

Then, the Photon Video SDK handles this video stream through the VoiceConnection (for now, we are still using the "Voice" term in a meaning of "Stream").

Scene logic

We have to use the same Unity scenes for the normal client (attendee) and for the recorder client (to stream a desktop on the stage screen).

But some modifications are required depending on the client mode we want to use.

To do so, in the StageWithScreenSharing scene, the ExtendedRigSelection contains a specific rig configuration named Recorder to configure the application as the recorder client :

- change the default user prefab by a specific emitter prefab,

- disable the ambiant sound,

- enable objects required for the recording (specific rig, UI, emitter),

- register the application in the attendee registry.

Stage

The ChatBubble addon is used to manage the stage.

By default, the stage chat bubble has a capacity limited to 1 user, thus preventing someone else to come to the stage when a presenter is already on stage. But the presenter can change this value with the stage console.

To be sure that the user is displayed properly for all user, the LODGroup is disabled too for any user entering the stage to guarantee that no LOD will trigger for them (see class PublicSpeechHandler & StageCameraManager).

Voice

Because presenter(s) are in the stage chat bubble but not the others participants, we use the white list feature of the DynamicAudioGroup addon (version 2.0.2) to allows all participants to always listened to the presenter(s).

Also, it removes the audio source spatialization, so that the voice of this user is audible from every corner of the room.

Presenter camera

A presenter camera follows the user on the stage. To do so, the StageCameraManager component implements the IAudioRoomListener interface to maintain a list of user on stage.

So, it triggers the camera tracking and recording when an user is on the stage.

The StageCameraManager can handle several camera, and will trigger the traveling following the presenter for each one, while enabling the actual rendering only for the most relevant one.

Those cameras have a lower count of frame rendered by seconds, this being done in the StageCamera class, along with the camera movements.

Cameras are placed relatively to a camera rig Transform, and move along the x axis of this transform.

Seat & bandwidth reduction

Because it's not necessary to update a player's position when they're seated, we've added a feature to reduce the refresh rate when a user is seated.

It returns to a normal frequency when he leaves his seat. Doing this reduces bandwidth consumption and increases the number of users in the application.

The NetworkTransformRefreshThrottler component added on the head & hands of the player's networked rig is in charge of reducing the emission rate of specific objects. Do to so, when throttling is activated, it keeps setting the same previous position/rotation for the NetworkTransform, for some duration on the state authority.

Due to that, the tick data on the proxies remain the same for some duration, preventing the Fusion tick-based interpolation to behave properly.

To handle this specific approach, the throttler (when throttling is activated) uses a time-based interpolation on proxy, not trusting anymore the ticks states, but the timing at which the latest changes were received.

Note that the state authority itself will have its tick-based interpolation data corrupted by this approach. However, the throttler does not handles this, supposing that the state authority has some sort of extrapolation (force the position during Render) already in place.

It is the case here in the Stage sample, through the NetworkRig class, that applies this extrapolation.

Here, the throttling is activated in the StageNetworkRig.

The HardwareRig subclass StageHardwareRig can receive seating requests (triggered by the SeatDetector class, which observe when the user teleport on a seat). Then, the StageNetworkRig updates the local SeatStatus and activates the throttling is the user is seated.

C#

void LocalNetworkRigSeatStatusUpdate()

{

if (IsLocalNetworkRig && stageHardwareRig && SeatStatus.Equals(stageHardwareRig.seatStatus) == false)

{

SeatStatus = stageHardwareRig.seatStatus;

}

}

public override void FixedUpdateNetwork()

{

base.FixedUpdateNetwork();

LocalNetworkRigSeatStatusUpdate();

if (Object.HasStateAuthority)

{

rigThrottler.IsThrottled = SeatStatus.seated;

leftHandThrottler.IsThrottled = SeatStatus.seated;

rightHandThrottler.IsThrottled = SeatStatus.seated;

headsetThrottler.IsThrottled = SeatStatus.seated;

}

}

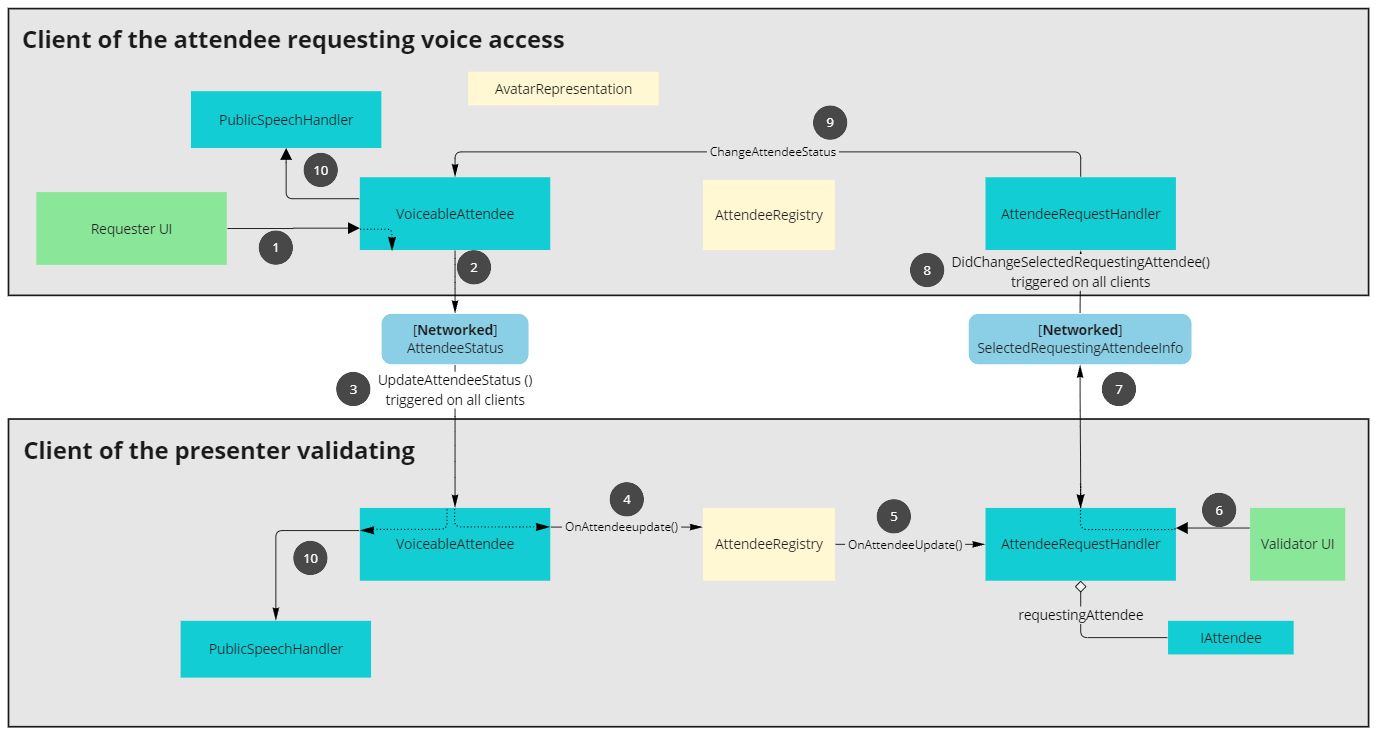

Attendee stage voice access

The conference attendees can request to have access to the stage microphone. The presenter on the stage can allow them, or reject their request.

The networked rig of an user contains a VoiceableAttendee component, that host a [Networked] variable AttendeeStatus. This status describes :

- if no request has been made yet,

- if the spectator is requesting voice access to the stage,

- if it has been accepted,

- if it has been accepted but they are momentarily muted,

- or if the request has been rejected,

When the user request voice access with their UI, (1) in the above diagram, their status is updated (2), and the network var sync it to all user (3).

It triggers on all clients a callback (4) that warns an AttendeeRegistry components storing all the attendees, that broadcast this change info to all its listeners (5).

This way, the AttendeeRequestHandler, associated to the desk on the stage, can store a list of requesting attendees, and update its UI accordingly.

A [Networked] var, SelectedRequestingAttendeeInfo holds the info about the selected attendee in the UI, so that every UI on each clients is synchronized.

When the presenter validate a voice request (6), SelectedRequestingAttendeeInfo attendeeStatus info is changed.

It is not possible to directly change the VoiceableAttendee AttendeeStatus, as the presenter is probably not the requester, and so has no state authority the rig's networked object.

But since SelectedRequestingAttendeeInfo is a [Networked] var, changing it locally for the presenter (7) triggers an update on all clients (8).

During this update, each one checks if it is the state authority of the VoiceableAttendee networked object, and if it is, change the attendee status (9).

Finally, changing this status triggers an update of the VoiceableAttendee AttendeeStatus on all clients, and seeing that the spectator is now a voiced spectators, it can (10):

- add this user into the white list of

DynamicAudioGroupMemberalways listened to (classPublicSpeechHandler) - turn off audio spatial bled to make sure everyone can hear regardless of distance

- disable the LODGroup for this user in the

AvatarRepresentation, so that they appears in max details for everybody, like the presenter on stage.

Video synchronization

The video player is currently a simple Unity VideoPlayer, but any video library could be used.

The presenter on stage can start, go to a specific time, or pause the video on the stage's console. To synchronize this for all user, the VideoControl NetworkBehaviour contains a PlayState [Networked] struct, holding the play state and position. Being a networked var, when it is changed by the presenter when they use the UI, the change callback is also triggered on all clients, allowing them to apply the change on their own player.

Emoticons

In addition to the voice request, at any time, spectators can express themself by sending emoticons.

Since the feature is not critical for this use case, when a user clicks on an emoticon UI button, we use RPC to spawn the associated prefab. This means that a new user entering the room will not see the previously generated emoticon (emoticons vanish after a few seconds anyway).

Also, we consider that there is no need to synchronize the positions of the emoticons in space, so the emoticon prefabs are not networked objects.

The EmotesRequest class, located on speactor UI is responsible for requesting the EmotesSpawner class to spawn an emoticon at the user's position.

Because a networked object is required, EmotesSpawner is located on the user's network rig.

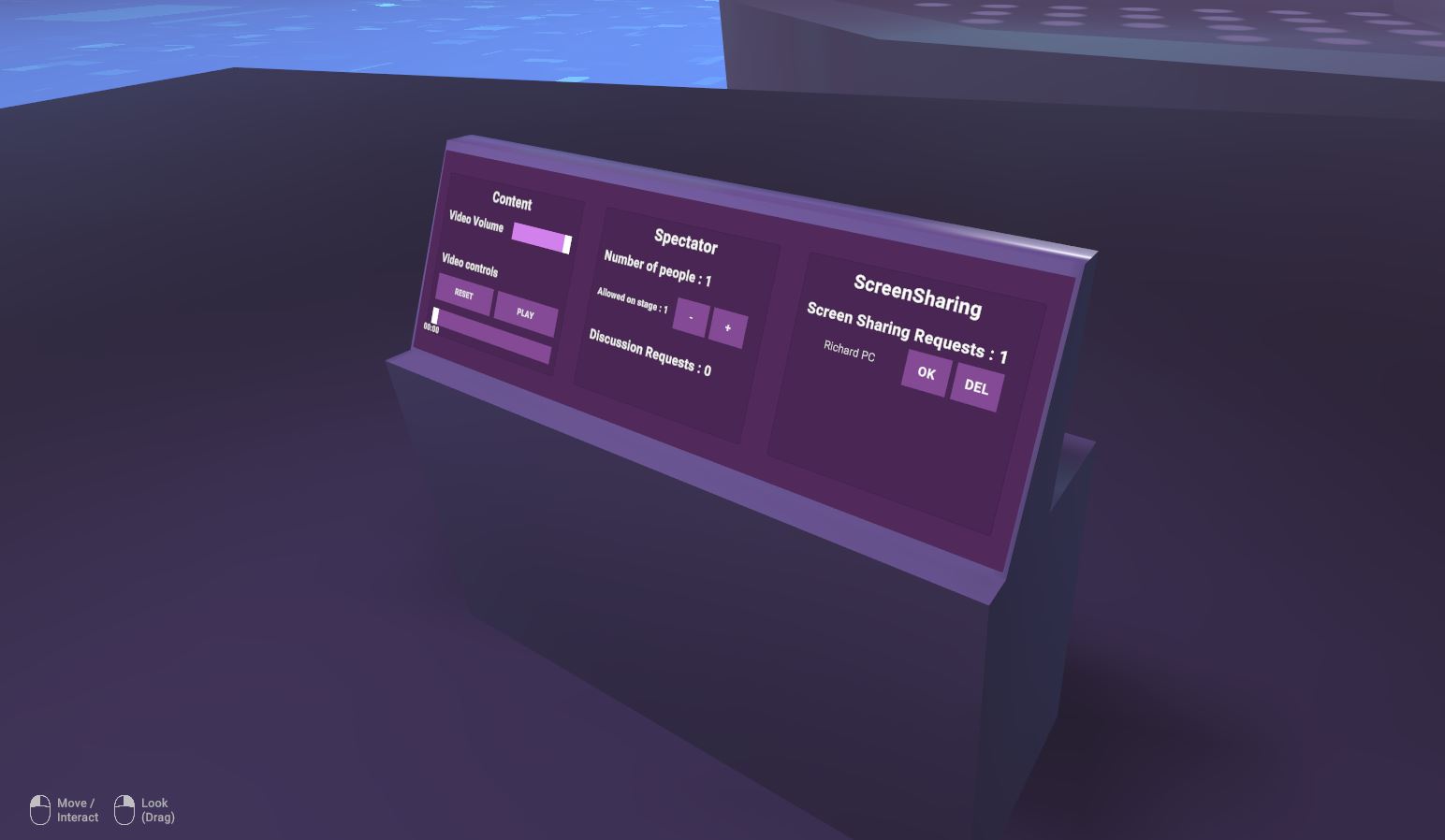

Screen sharing

To request the authorization to stream on the main screen, an authorization system similar to the voice request system is used : as a ScreenSharingAttendee, you can request screen sharing access, and the control screen on the stage offers a EmissionOrchestratorWithAuthorizationManagement to validate those requests.

Screen sharing is captured with uWindowCapture and sent to other clients through the PhotonVideo SDK.

See Screen Sharing Industries Addons for more details.

Screen Sharing Request Managment

The way a user is allowed to share his deskop screen on stage is similar to what was done to allow attendees to talk with the presentator.

The StageNetworkRigRecorder prefab is spawned when the recorder application join the session.

This prefab contains the ScreenShareAttendee class, that host a [Networked] variable ScreenShareStatus.

C#

[Networked]

public ScreenShareStatus ScreenShareStatus { get; set; }

This status describes :

- if a screen sharing request has not yet been made,

- if a screen sharing request has been made,

- if the request has been accepted,

- if it has been stopped,

- or if the request has been rejected,

C#

public enum ScreenShareStatus

{

NoScreenShareRequested, // no request yet

ScreenShareCanceled, // request canceled on the recorder UI

ScreenShareRequested, // request done, waiting for answer

ScreenShareInProgress, // screensharing in progress

ScreenShareStopped, // screensharing stopped (by the speaker using the desk, or using the recorder UI)

ScreenShareRejected // screensharing rejected by the speaker

}

The EmissionOrchestratorWithAuthorizationManagement associated to the desk on the stage, can store a list of user requesting screen sharing, and update its UI accordingly.

Please, see the IScreenShare interface for more details.

The first screen sharing request is displayed on the control desk.

When the ScreenShareStatus change (for example when the presenter validates a screen sharing request), it is broadcasted to the network so that the client owning the screen sharing can change its status.

Thus, when the screen sharing requester receives the authorization, it start to stream the selected deskop.

C#

if (ScreenShareStatus == ScreenShareStatus.ScreenShareInProgress)

{

Debug.Log("Starting Screen Sharing !");

screenSharingEmitter.ConnectScreenSharing();

}

The EmitterMenuWithAuthorizationManagement class is in charge of displaying the screen sharing buttons and update text fields according to the user interaction with the menu or according to the ScreenShareStatus status.

Compilation

We use the same Unity scenes for the normal client (attendee) and for the recorder client (to stream a desktop on the stage screen).

So, we have to made some manual modifications depending on the client we want to compile.

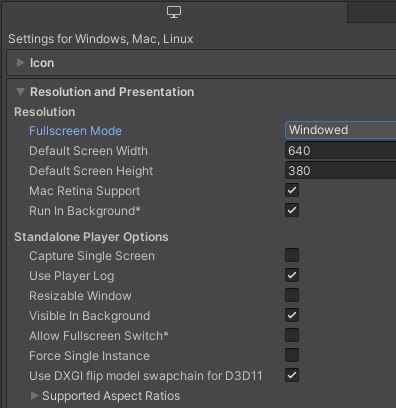

Recorder Client Compilation

So, to compile the recorder application, you have to follow 2 steps :

1/ Open the StageAvatarSelection scene : on the LoadMainSceneInRecorderMode game object, check the box Is Recorder Compilation Mode to load the main scene directly without loading the avatar selection scene.

This tips is required because the scene list must be identical for the normal client and for the recorder.

2/ Open the StageWithScreenSharing scene : on the ExtendedRigSelection game object & class, set to Force to use Recorder.

Please note that the ScreenShareAppWindowsSettings class on the recorder rig is in charge to change the resolution.

You can also change the Unity parameters manually :

Project Settings/Player:- Change

Product name: add 'Recorder' for example

- Change

Project Settings/Player/Resolution and Presentation/Resolution- Fullscreen Mode : Windowed

- Default Screen Width : 640

- Default Screen Height : 380

- Resizable : No

- Allow Fullscreen : No

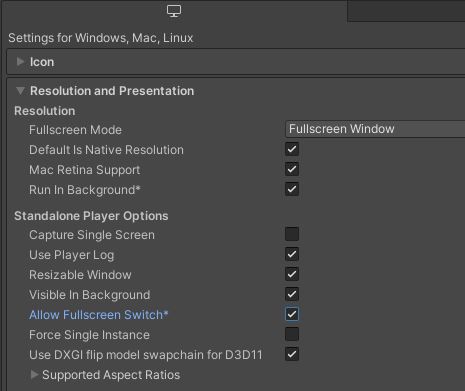

Normal Client Compilation

So, to compile the normal client application, you have to follow 3 steps :

1/ Open the StageAvatarSelection scene : on the LoadMainSceneInRecorderMode game object, uncheck the box Is Recorder Compilation Mode.

2/ Open the StageWithScreenSharing scene : on the RigSelection game object, RecorderAppRigSelection class, set the App Kind parameter to Normal.

RecorderAppRigSelection is in charge to configure the scene according to the normal/recorder selection.

3/ Check some Unity parameters :

Project Settings/Player:- Change

Product name: add 'Client' for example

- Change

Project Settings/Player/Resolution and Presentation/Resolution- Fullscreen Mode : Fullscreen Windows

- Resizable : Yes

- Allow Fullscreen : Yes

Used XR Addons & Industries Addons

To make it easy for everyone to get started with their 3D/XR project prototyping, we provide a comprehensive list of reusable addons.

See Industries Addons for more details.

Here are the addons we've used in this sample.

XRShared

XRShared addon provides the base components to create a XR experience compatible with Fusion.

It is in charge of the players' rig parts synchronization, and provides simple features such as grabbing and teleport.

See XRShared for more details.

Spaces

We reused the same approach than the one used in the Meeting samples to allow several instances of the same room.

So, users have the possibility to join the public room or a private meeting room by specyfing a room number.

This choice can be made in the avatar selection screen or later with the application menu.

See Space Industries addons for more details.

Connection Manager

We use the ConnectionManager addon to manage the connection launch and spawn the user representation.

See ConnectionManager Addons for more details.

Extended Rig Selection

We use this addon to switch between the three rigs required in this sample :

- VR rig for Meta build,

- Desktop rig for Windows and Mac client,

- Recorder rig to build the Windows recorder application in charge to stream desktop on the screen

See Extended Rig Selection Industries Addons for more details.

Avatar

This addon supports avatar functionality, including a set of simple avatars.

See Avatar Industries Addons for more details.

Ready Player Me Avatar

This addon handles the Ready Player Me avatars integration.

See Ready Player Me Avatar Industries Addons for more details.

Social distancing

To ensure comfort and proxemic distance, we use the social distancing addon.

See Social distancing Industries Addons for more details.

Locomotion validation

We use the locomotion validation addon to limit the player's movements (stay in defined scenes limits).

See Locomotion validation Industries Addons for more details.

Dynamic Audio group

We use the dynamic audio group addon to enable users to chat together, while taking into account the distance between users to optimize comfort and bandwidth consumption.

We also use the whitelist feature to allow participant to listen to the presentor while not being in the same audio room.

See Dynamic Audio group Industries Addons for more details.

Audio Room

The AudioRoom addon is used to soundproof chat bubbles.

See Audio Room Industries addons for more details.

Chat Bubble

This addon provides the static chat bubbles included in the Expo scenes.

See Chat Bubble Industries Addons for more details.

Virtual Keyboard

A virtual keyboard is required to customize the player name or room ID.

See Virtual Keyboard Industries Addons for more details.

Touch Hover

This addon is used to increase player interactions with 3D objects or UI elements. Interactions can be performed with virtual hands or a ray beamer.

See Touch Hover Industries Addons for more details.

Feedback

We use the Feedback addon to centralize sounds used in the application and to manage haptic & audio feedbacks.

See Feedback Addons for more details.

Screen Sharing

Users can share their computer screen and broadcast it on the stage's large screen if allowed by the presentor.

See Screen Sharing Industries Addons for more details.

Third party components

- Oculus Integration

- Oculus Lipsync

- Oculus Sample Framework hands

- Ready player me

- Sounds

Known Issues

- Quest : if the screen sharing has been initiated before the user logs in, then the display of the screen share may be long or may fail.

- Overview

- Architecture overview

- Scene logic

- Stage

- Seat & bandwidth reduction

- Attendee stage voice access

- Video synchronization

- Emoticons

- Screen sharing

- Screen Sharing Request Managment

- Compilation

- Used XR Addons & Industries Addons

- XRShared

- Spaces

- Connection Manager

- Extended Rig Selection

- Avatar

- Ready Player Me Avatar

- Social distancing

- Locomotion validation

- Dynamic Audio group

- Audio Room

- Chat Bubble

- Virtual Keyboard

- Touch Hover

- Feedback

- Screen Sharing

- Third party components

- Known Issues