Anchors

This addon detects visual markers such as QR codes or ArUco codes and offers two use cases: colocalization and object repositioning.

It can operate with a single marker type (QR code or ArUco) or both simultaneously.

Marker detection & representation

Supported markers

QR Code with MRUK

QR code detection is based on the Meta MR Utility Kit (MRUK). Now, it supports Trackables of QR code type.

If QR code detection doesn't work with a compiled application, specially after an headset reboot, try to enable the experimental mode with adb command (adb shell setprop debug.oculus.experimentalEnabled 1)

ArUco with OpenCV

Using ArUco markers requires the OpenCV for Unity asset available in the Unity on the Asset Store.

We do not distribute OpenCV for Unity with the project. You must purchase it yourself and add it to the project. Once installed, it is automatically detected, enabling ArUco marker support.

Marker detection logic

In this addon, the marker usage is a 3 steps process:

- Marker detection: the actual raw marker information collecting process, from the hardware information. It can be image analysis, direct operating system information like

MRUKTrackabledetection in Horizon OS, or even manual in-app declaration of common points. - Marker position stabilization: the raw marker detection can be more or less stable, to use it with confidence, an additional layer ensure to extract short-term and long-term stabilized positioning from the raw positioning

- Marker usage: finally, those marker positioning info can be use for actual features.

AnchorTag

The AnchorTag is the class representing point detected in space: it can be used to track marker detection results, or to visualize stabilized version of those detected position.

When used for detection results, the class is responsible to tell if the anchor is still detected or not, through the IsDetected property. The default implementation consider that the anchor is detected if its gameobject is active, but if a Meta's MRUKTrackable is present on the game object, then we rely on its IsTracked property.

The class also handles the visual aspect of the anchors.

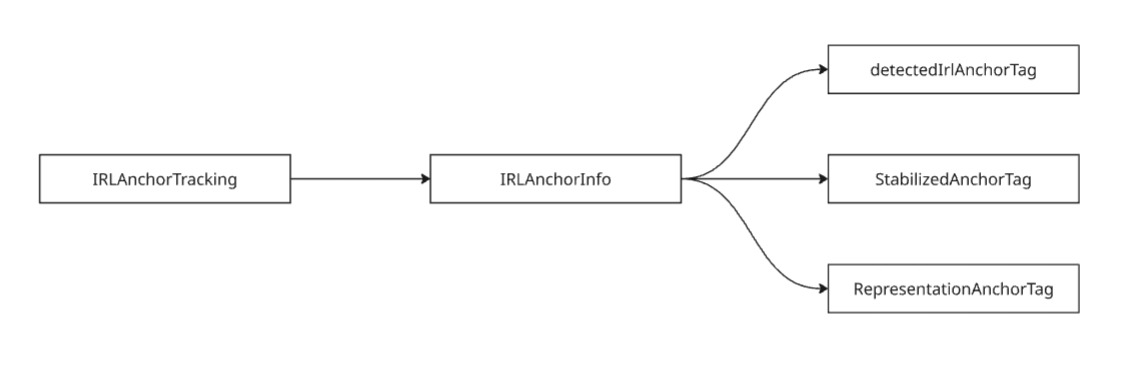

IRLAnchorInfo

The various information related to a marker are grouped in the IRLAnchorInfo class.

It notably stores:

- its last detection status:

wasDetectedThisFrame,lastValidPosition,lastValidRotation, - the stabilized pose to use and its stability status:

stablePose,isPoseStable, - the duration of this stability relatively to an expected long stability duration

expectedDetectedAnchorsStabilityDuration:hasLongStability,longStabilityProgress.

It necessarily stores a reference to the AnchorTag representing the raw detection results (to allow checking its IsDetected property), but it can optionally use other AnchorTag to visualize the stabilized results, or simplified visualization representation: for a single marker, the addon manages three types of anchors:

detectedIrlAnchorTag: thisAnchorTagtracks the actual input anchor position. It is updated in realtime and active only when the position has been detected.

This is not intended to be displayed except for debugging purposes, in particular when using ArUco markers, because the current position may show large variations depending on the user's head movements.stabilizedIrlAnchorTag: thisAnchorTagis used to represent the anchor's stabilized position for the current frame (either this being average or latest position, based on the stabilization logic), that should be used in actual usage of anchors.representationIrlAnchorTag: it is used to display a representation of the anchor that hide some variation: it moves to the stabilized when it has remained stabilized at the same position for a sufficient amount of time, or moves slowly to the last detected position otherwise.

IRLAnchorTracking

The tracking and stabilization of the detected visual marker is handled by the IRLAnchorTracking class.

The detected marker analysis can be either automatic (during every late update, we consider that the detection has been done, and we collect which anchors are detected or not), or be called on demand to synchronize on a detection system that might need more than one frame to finish detecting markers.

It provides the IIRLAnchorTrackingListener interface to notify listeners that an anchor's status has changed.

C#

public interface IIRLAnchorTrackingListener

{

void OnIRLAnchorSpawn(IRLAnchorTracking irlAnchorTracking, string anchorId);

void OnIRLAnchorDetectedThisFrame(IRLAnchorTracking irlAnchorTracking, IRLAnchorInfo anchorInfo);

void OnDetectionStarted(IRLAnchorTracking irlAnchorTracking);

void OnDetectionFinished(IRLAnchorTracking irlAnchorTracking);

}

IRLAnchorTracking computes the anchor's average position using the latest detected poses and estimate the anchor stability. Several parameters allow you to adjust the sensitivity :

historyDuration: duration, in seconds, of the history, determining the number of previous positions to take into account when calculating the average position. To convert the duration to a number of positions, the maximum expected FPS can be provided inmaxFpspositionStabilityThreshold&rotationStabilityThreshold: allowed deviation before considering that an anchor's position is no longer stable.maxDetectedToStabilizedDistanceForLongStability: the maximum distance between the anchor's stable position and its last detected position, beyond which long term stability is no longer considered valid.expectedDetectedAnchorsStabilityDuration: the duration required to consider that a stable anchor (isPoseStable is true) has been stable long enough (so hasLongStability can be set to true).

Use cases

Once stable markers are detected in real life, and mapped to the virtual scene position, those data can be used for various use cases.

Colocalization

In this use case, the visual marker can be used to colocalize users physically in the same room, that is make sure that in a mixed reality application, the real life user on the camera will appear at the same position that their avatar in the virtual.

Colocalization types

Several cases can occur regarding colocalization scenarios:

- all user are in the same real life room

- users are split among several rooms in different locations

- several markers are present in one real life room (to cover large rooms needs)

- the markers position in the virtual scene is predefined (to place a predefined virtual overlay on the real life room)

- every real life room is only has one marker, but people can move from one room to the other by walking in their location

All those cases are supported by the following implementation.

Note: the add-on contains also contains a simple AnchorBasedColocalization class, that does not require networking synchronization, for instance if there is just one common room, and if the detectable anchors position are all predefined and hardcoded in the Unity scene. This class being less flexible, it is mostly left in the add-on for reference purposes.

Rooms logic

To cover all those cases, the add-on introduces the concept of rooms, defined by their rooms ids. A room id is a temporary identification of a real life room.

User can be detected in a room, as well as anchors.

In addition to that the position of the users and of detected anchors need to be shared on the network.

If further information allows to realize that 2 room ids describe the same real life location, the rooms can be merged, with only one room id remaining.

This occurs when:

- a first user U1 detects an anchor A1, creating room R1, and sharing a network anchor NA1 storing its position

- a second user U2 detects another anchor A2, creating room R2, and sharing a network anchor NA1 storing its position

- the second user U2 also detects the anchor A1.

When such a room merge occurs:

- users and anchors room id change to match the merged room room id. Here, only the room R1 will remain, and both anchors and both users will be associated to this room

- the user who has initiated the merged is moved. Here, the Unity scene position of U1 won't change, but U2 position will change, so that the position of the anchor A1 relatively to U2 does not changes, while this relative position matches the NA1 position shared by U1

- room R1 is destroyed

- if other users where with U2 in room R1, they have to move in a similar way to U2

Supporting classes

A NetworkIRLRoomMember represents an user a a colocalization scenario. Its networked RoomId property stores the room id of the room in which this user is: at start, while no info is known about anchors, a unique room id is generated for this user, that is alone in this room. This class should be placed on the networked rig spawned for each user.

A NetworkedIRLRoomAnchor shares information about a detected real life anchor. In addition to a AnchorId networked property storing its anchor id, its networked RoomId property stores the room id of the room in which this anchor us: when an anchor is stable and detected for the first time, a networked anchor is created, and its room id is set to the room id of the user that detected it.

Every NetworkIRLRoomMember stores the last NetworkedIRLRoomAnchor it detected in the local RoomAnchorToFollow property. If the anchor is moved, the member will move to preserve its offset to the anchor.

The IRLRoomManager class tracks all NetworkIRLRoomMember and NetworkedIRLRoomAnchor (either from the local user or remote users), and stores them in their room. It watches room id changes on them to adapt its rooms cache content (the class is not networked and rebuilds locally an overview of all rooms based on the networked members and anchors data and updates).

Anchor detection logic

The IRLRoomManager requires a IRLAnchorTracking in the scene, and subscribes to its event.

When an anchor is detected, and its position has a long stability:

- 1 - if it is the first time that this anchor id is detected (locally or by a remote user):

- a

NetworkedIRLRoomAnchoris spawned, and placed at the detected position

- a

- 2 - if a known network anchor (created locally or by a remote user) has the same anchor id:

- 2.1 - if this network anchor is in the same room than the local user: we don't do anything

- 2.2 - if this network anchor is not in the same room than the local user: we consider that a colocalization has to be triggered

- the user position is changed so that the anchor they sees matches the networked anchor position. Its rig position before and after the merge are stored in

NetworkIRLRoomMember's networked properties, to ensure that the same move can then be applied by other members in the room - we plan a room merge

- the user position is changed so that the anchor they sees matches the networked anchor position. Its rig position before and after the merge are stored in

When a room merge occurs:

- all user and anchor in the previous rooms also change their room id to the new merged room

- all anchors are moved by their state authority to apply the same move then the user that triggered the colocalization did

Note: to detect a room merge, we see when a member room id changes. To avoid cascading several merges, NetworkIRLRoomMember contains a RoomPresenceCause networked property to allow to determine which user was the one changing its room id as it was initializing the room merge: the anchor moves will thus only occurs once.

Finally, all NetworkIRLRoomMember try to preserve their position offset to the last NetworkedIRLRoomAnchor they detected (stored in RoomAnchorToFollow). So when the room anchors have been moved during the room merge, the other members will also move to stay in sync with their anchor position.

Special cases and options

Hardcoded networked anchors

It is possible to store in the scene NetworkedIRLRoomAnchor without actually detecting anchors (or to spawn them through some kind of calibration data).

For such anchors, their IsChangingRoomForbidden has to be set to true: it warns the IRLRoomManager that a room containing it cannot be merged, and anchors and members should stay in it.

This allows to force the user position to a specific one, even for the first user detecting a given anchor.

This is relevant in scenario where the room has been calibrated, and the position of anchors are well known relatively to each others: this will allow for instance to map a predefined virtual decoration over a real life room.

Predefined room id

In the default implementation, when an users see several anchors, they are all supposed to be in the same room, triggering room merges.

However, if the colocalization scenario might include moving from one room to another, for instance if the user can roam in a building with several distinct rooms, it is possible to predefine an anchor room id, to prevent it from being merged into another room: in this case, the user will change room, leaving the previous one unchanged (no merge will occur).

Note that only one anchor can be present in a given room in this scenario: if 2 anchors would be predefined in the same room, we would not know how they are placed relatively to each other without the room merge mechanism.

To set predefined anchors, there are 2 options:

- in

IRLRoomManager, fill thepredefinedTagRoomMappingswith pairs of anchor id/room id - in

IRLRoomManager, provide apredefinedRoomSuffixSeparator: if an anchor id contains this string, the anchor aid string will be split around this sperator, and the right side will be considered the room id. For instance, with a "-Room-"predefinedRoomSuffixSeparator, an QR code with the payloadAnchor18-Room-R3will be predefined in the room "R3"

For such anchors, the IsChangingRoomForbidden of their NetworkedIRLRoomAnchor component is automatically set to true, to prevent their room to be merged: anchors and members will stay in it.

Object repositioning

In this use case, after a calibration process, when a marker is detected, a virtual object can be spawned and repositioned according to the detected marker's position and the calibration data.

This repositioning is managed by the AnchorBasedObjectSynchronization class.

The calibration data, stored in ModelCalibration, describe how the anchor are expected to be placed on the object, by providing their relative position and rotation.

The class AnchorBasedObjectSynchronization triggers a repositioning when it receives stabilized anchor positions, provided by a sibling IRLAnchorTracking component, if the detected anchor ids matches the anchor id expected in the calibration.

If several expected anchor are detected at the same time, an virtual average anchor is used (one one for the detected anchors, and for the matching calibration anchors), by averaging all the anchors data.

Then, with this unique reference anchor from the calibration, and this unique anchors from the detection, the object is moved to make them coincide.

Please note that the repositioning algorithm is currently optimized to detect a static object and to compensate for head movements using a stabilization algorithm.

This is especially important when tracking ArUco markers, where marker positions, reported at a high frequency, can vary significantly.

It is less necessary with QR code detection through the Meta API, because the reported position is already stabilized.

Demo

Demo scenes can be found in Assets\Photon\FusionAddons\Anchors\Demo\Scenes\ folder, for repositioning and colocalization use cases.

Dependencies

- XRShared addon

- Meta MRUK and XR SDK

- [Optional] OpenCV

- [Optional] Screensharing add-on (that includes

PassthroughCameraSamplesMeta classes, required for when using OpenCV to access camera capture code)

Download

This addon latest version is included into the Industries addon project

Supported topologies

- shared mode

3rd Party Assets and Attributions

The Aruco markers support requires OpenCV, and the actual implementaiton is based on Takashi Yoshinaga's QuestArUcoMarkerTracking:

- OpenCV for Unity

- QuestArUcoMarkerTracking MIT License Copyright (c) 2025 Takashi Yoshinaga;"

Changelog

- Version 2.0.7: Add option to change the anchor tag display after a long stability detection

- Version 2.0.6:

- Update Aruco and OpenCV support update for Meta SDK v83: transition to latest version of https://github.com/TakashiYoshinaga/QuestArUcoMarkerTracking, supporting Meta SDK v83

- Version 2.0.5:

- Add security because of QR Code reading incompatibility between Meta SDK v78 and MetaOS v83

- Add security for QR code with long payload

- Version 2.0.4: Update scene to use the new WebCamTextureManager Prefab (to avoid guid collision with Meta SDKs V81)

- Version 2.0.3:

- Add visualization option on IRLRoomManager for room associated parts (display just for one member for the remote rooms, all members of remote rooms, all rooms, ...)

- Bug fix when the last member of a room leaves it (anchor removal, room cleanup, ...)

- Version 2.0.2:

- Prefabs refactoring

- Compatibility with Fusion 2.1

- [Beta preview] Possibility to move remote room in colocalization scenario, with NetworkIRLRoomMoveRequester components

- Version 2.0.1:

- Ensure that anchors whose state authority has disconnected receive a new state authority (to continue moving on room merges)

- Bug fix: colocalization could fail in certain scenario (when a member was following an anchor, that it had created, during a room merge triggered by another player) due to an unneeded state authority check

- Version 2.0.0: First release